@Alex-Kushleyev Sorry for the delay, some data for you in different configurations:

Getting data from log0000 and data.bag

voxl-logger

mean: 0.9766542020947352 ms

std dev: 0.0013052458028126252 ms

total messages: 61393

rosbag record

mean: 3.230353079590143 ms

std dev: 2.992801413066607 ms

total messages: 18361

----------

Getting data from log0001 and data_nodelay.bag

voxl-logger

mean: 0.9766513801209619 ms

std dev: 0.0013052148029740315 ms

total messages: 61342

rosbag record

mean: 3.2565453276935123 ms

std dev: 3.0139953610144303 ms

total messages: 17860

----------

Getting data from log0002 and data_perf.bag

voxl-logger

mean: 0.976641095612789 ms

std dev: 0.001267007877929727 ms

total messages: 61384

rosbag record

mean: 3.73874577006071 ms

std dev: 3.513557787123189 ms

total messages: 15819

----------

Note significant differences in messages for each, indicating lots of message drop by the ros node.

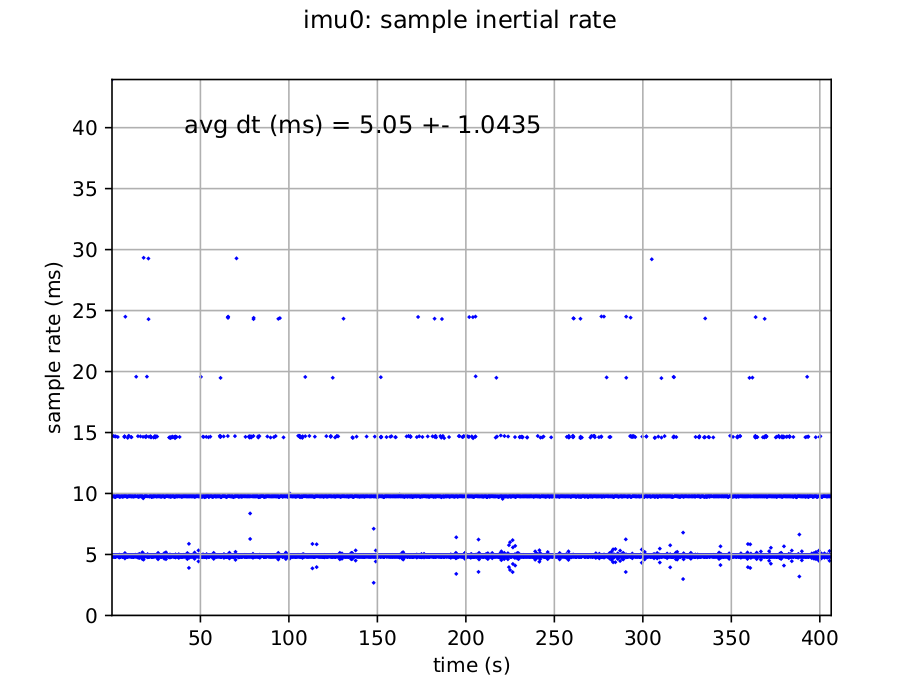

Considering the sampling rate and standard deviation for different configurations. Logging was done with voxl-logger -t 60 -i imu_apps -d bags/imu_test/ and rosbag record /imu_apps -O data --duration 60. I tried setting the rosbag to record with --tcpnodelay, but this seems to have no effect. During the performance run, the cpu usage was as follows:

Name Freq (MHz) Temp (C) Util (%)

-----------------------------------

cpu0 1804.8 40.1 22.33

cpu1 1804.8 39.7 18.10

cpu2 1804.8 40.1 17.82

cpu3 1804.8 39.7 16.16

cpu4 2419.2 39.0 0.00

cpu5 2419.2 38.6 0.00

cpu6 2419.2 37.8 0.00

cpu7 2841.6 39.0 0.00

Total 40.1 9.30

10s avg 12.26

-----------------------------------

GPU 305.0 37.4 0.00

GPU 10s avg 0.00

-----------------------------------

memory temp: 37.8 C

memory used: 734/7671 MB

-----------------------------------

Flags

CPU freq scaling mode: performance

The only thing which seems out of the ordinary here is that many CPUs are operating consistently at low frequency. Maybe you have a comment on why that could be? Otherwise, it seems the transfer from the voxl-imu-server to ros causes quite a bit of loss in messages. Here is some voxl 2 mini install information:

--------------------------------------------------------------

system-image: 1.7.8-M0104-14.1a-perf

kernel: #1 SMP PREEMPT Sat May 18 03:34:36 UTC 2024 4.15

--------------------------------------------------------------

hw platform: M0104

mach.var: 2.0.0

--------------------------------------------------------------

voxl-suite: 1.3.3

--------------------------------------------------------------

with this IMU config:

"imu0_enable": true,

"imu0_sample_rate_hz": 1000,

"imu0_lp_cutoff_freq_hz": 92,

"imu0_rotate_common_frame": true,

"imu0_fifo_poll_rate_hz": 100,

"aux_imu1_enable": false,

"aux_imu1_bus": 1,

"aux_imu1_sample_rate_hz": 1000,

"aux_imu1_lp_cutoff_freq_hz": 92,

"aux_imu1_fifo_poll_rate_hz": 100,

"aux_imu2_enable": false,

"aux_imu2_spi_bus": 14,

"aux_imu2_sample_rate_hz": 1000,

"aux_imu2_lp_cutoff_freq_hz": 92,

"aux_imu2_fifo_poll_rate_hz": 100,

"aux_imu3_enable": false,

"aux_imu3_spi_bus": 5,

"aux_imu3_sample_rate_hz": 1000,

"aux_imu3_lp_cutoff_freq_hz": 92,

"aux_imu3_fifo_poll_rate_hz": 100