@Leo-Allesch ,

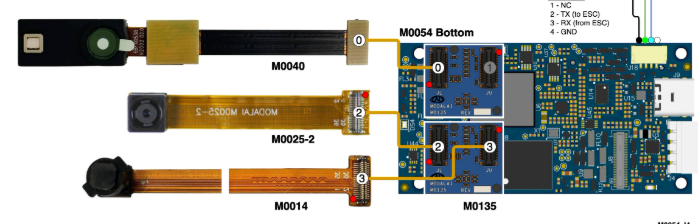

It seems the TOF sensor and camera port 2 are not functional. I am assuming that you tried using both M0135 adapters to test port 2? Considering that it was working before, this is likely not a software issue, but a hardware issue, but it is not clear why exactly.

All of your cameras are interchangeable in the camera ports, as long as the camera ports and sensors are working (and the sensormodule drivers are set up correctly).

By default, the port 1 (camera slot 1), is reserved for use in a stereo configuration when used with M0076, like this one : https://docs.modalai.com/voxl2-camera-configs/#c10---front-stereo-only . So it will not work as a generic camera port when used with M0135. The functionality can be re-configured in software (requires a change in the kernel), but we don't have a ready-to-go kernel with just this change (we can revisit this later, if needed).

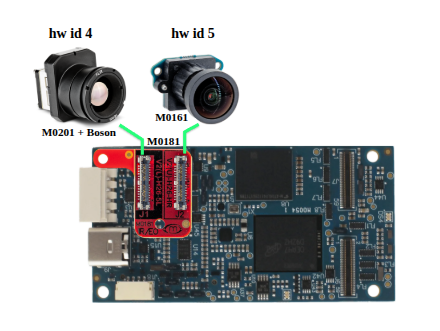

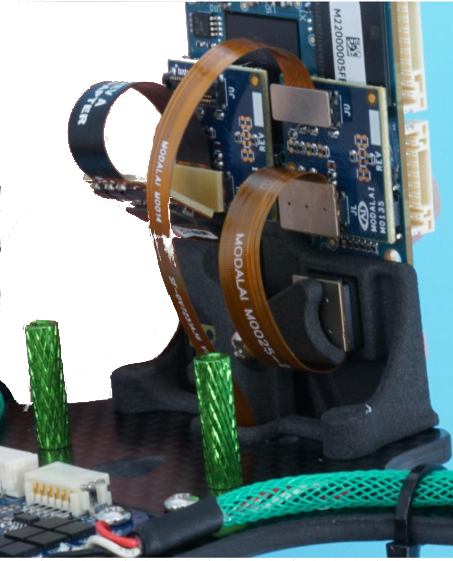

By the way, you can also test VOXL2 J8. J8 is set up in a similar way as J6, that is the J8L can be used for any camera, but J8U is reserved (by default) for another stereo pair. However, this will allow you to test yet another port just to double check things. The camera slot IDs for J8 are 4 and 5. Please note that the orientation of J8 is rotated compared to J6 and J7. You can see how a TOF sensor is attached to VOXL2 J8 via M0076 adapter : https://docs.modalai.com/voxl2-camera-configs/#cx---two-time-of-flights-tof . M0076 is a single port version of M0135 interposer (only providing the Lower camera port).

So, if you test VOXL2 J8, use the lower camera port 4 (J8L).

Yes, M0040 is EOL, so the replacements are not available. The upgraded version of TOF sensor is here : https://docs.modalai.com/M0169/ , however it has different dimensions and connector requirements. We can discuss this further if needed.

What is your goal? Do you need the original configuration working or are you potentially looking for any updates?

Alex