Can anyone recommend a Tflite Colab Notebook for VOXL2 Training

-

I successfully trained a YOLOv5 model using the following instruction sets

https://docs.ultralytics.com/yolov5/

https://docs.ultralytics.com/yolov5/tutorials/train_custom_data/

https://docs.ultralytics.com/yolov5/tutorials/model_export/Followed the directions to export the model to a tflite having FP16 half precision

python export.py --weights best.pt --include tflite --half

ProTip: Add --half to export models at FP16 half precision for smaller file sizes

ProTip: Add --half to export models at FP16 half precision for smaller file sizesand when i bring over to voxl2 I get the following error in /var/logs/syslog

Jan 7 18:19:21 m0054 systemd[1]: Started voxl-tflite-server.

Jan 7 18:19:21 m0054 bash[18587]: WARNING: Unknown model type provided! Defaulting post-process to object detection.

Jan 7 18:19:21 m0054 bash[18587]: INFO: Created TensorFlow Lite delegate for GPU.

Jan 7 18:19:29 m0054 bash[18587]: received SIGTERM

Jan 7 18:19:29 m0054 systemd[1]: Stopping voxl-tflite-server...

Jan 7 18:19:39 m0054 bash[18587]: INFO: Initialized OpenCL-based API.

Jan 7 18:19:39 m0054 bash[18587]: INFO: Created 1 GPU delegate kernels.

Jan 7 18:19:39 m0054 bash[18587]: ------VOXL TFLite Server------

Jan 7 18:19:40 m0054 bash[18587]: Error in TensorData<float>: should not reach here

Jan 7 18:19:40 m0054 bash[18587]: Segmentation fault:

Jan 7 18:19:40 m0054 bash[18587]: Fault thread: voxl-tflite-ser(tid: 18670)

Jan 7 18:19:40 m0054 systemd[1]: voxl-tflite-server.service: Main process exited, code=killed, status=11/SEGV

Jan 7 18:19:40 m0054 systemd[1]: voxl-tflite-server.service: Failed with result 'signal'.

Jan 7 18:19:40 m0054 systemd[1]: Stopped voxl-tflite-server.

Jan 7 18:19:40 m0054 systemd[1]: Started voxl-tflite-server.

Jan 7 18:19:40 m0054 bash[18674]: WARNING: Unknown model type provided! Defaulting post-process to object detection.

Jan 7 18:19:40 m0054 bash[18674]: INFO: Created TensorFlow Lite delegate for GPU.

Jan 7 18:19:41 m0054 bash[1425]: ERROR in pipe_client_init_channel opening request pipe: No such device or address

Jan 7 18:19:41 m0054 bash[1425]: Most likely the server stopped without cleaning up

Jan 7 18:19:41 m0054 bash[1425]: Client is cleaning up pipes for the server -

Hey, happy to try and help resolve this!

The first thing I notice is that the voxl-tflite-server is defaulting your model to object detection which seems incorrect as you have a YOLO model. This is because the tflite server does a string compare call to determine which model is being used as seen here. What this means is you'll need to rename your model to

yolov5_float16_quant.tflitefor the time being to get proper YOLO processing. Obviously this isn't ideal and we're working on making this functionality better in a future software release.However, I'm more curious about that "should not reach here" message. I've traced that back to

inference_helper.cppwhich is likely hitting this line or one of the other ones which are similar to it. So the TFLite Server is attempting to read in your Tensors in some expected format but it's differing from the type it's getting.What you should try first is the renaming suggestion I mentioned in the first paragraph. It's possible that because it's defaulting to an object detection model and not a YOLO model, that's causing the server to read in your Tensors as the wrong datatype. If that doesn't work, if you could provide me with the output of

cat /etc/modalai/voxl-tflite-server.confthat might help me in better diagnosing your issue. If I can't help, I may need you to pass along your actual model file so that I can load in your exact configuration and do some debugging to find the issue.Sorry about this!

Thomas Patton

thomas.patton@modalai.com -

@Thomas-Patton Thanks for your response. Didnt realize you were checking for exact names.

I renamed my yolo model to be yolov5_float16_quant.tflite and updated the yolov5_labels.txt file

but still getting an error.

Jan 9 15:06:30 m0054 systemd[1]: Started voxl-tflite-server.

Jan 9 15:06:30 m0054 bash[5690]: INFO: Created TensorFlow Lite delegate for GPU.

Jan 9 15:06:47 m0054 bash[5690]: INFO: Initialized OpenCL-based API.

Jan 9 15:06:47 m0054 bash[5690]: INFO: Created 1 GPU delegate kernels.

Jan 9 15:06:47 m0054 bash[5690]: ------VOXL TFLite Server------

Jan 9 15:06:47 m0054 bash[5690]: Segmentation fault:

Jan 9 15:06:47 m0054 bash[5690]: Fault thread: voxl-tflite-ser(tid: 5770)

Jan 9 15:06:47 m0054 bash[5690]: Fault address: 0x656972623d3d206e

Jan 9 15:06:47 m0054 bash[5690]: Unknown reason.

Jan 9 15:06:47 m0054 bash[1410]: ERROR in pipe_client_init_channel opening request pipe: No such device or address

Jan 9 15:06:47 m0054 bash[1410]: Most likely the server stopped without cleaning up

Jan 9 15:06:47 m0054 bash[1410]: Client is cleaning up pipes for the server

Jan 9 15:06:47 m0054 systemd[1]: voxl-tflite-server.service: Main process exited, code=killed, status=11/SEGV

Jan 9 15:06:47 m0054 systemd[1]: voxl-tflite-server.service: Failed with result 'signal'.

Jan 9 15:06:48 m0054 systemd[1]: voxl-tflite-server.service: Service hold-off time over, scheduling restart.

Jan 9 15:06:48 m0054 systemd[1]: voxl-tflite-server.service: Scheduled restart job, restart counter is at 16.

Jan 9 15:06:48 m0054 systemd[1]: Stopped voxl-tflite-server.

Jan 9 15:06:48 m0054 systemd[1]: Started voxl-tflite-server.

Jan 9 15:06:48 m0054 bash[5774]: INFO: Created TensorFlow Lite delegate for GPU.here's my voxl-tflite-server.conf

/**- This file contains configuration that's specific to voxl-tflite-server.

- skip_n_frames - how many frames to skip between processed frames. For 30Hz

-

input frame rate, we recommend skipping 5 frame resulting -

in 5hz model output. For 30Hz/maximum output, set to 0. - model - which model to use. Currently support mobilenet, fastdepth,

-

posenet, deeplab, and yolov5. - input_pipe - which camera to use (tracking, hires, or stereo).

- delegate - optional hardware acceleration: gpu, cpu, or nnapi. If

-

the selection is invalid for the current model/hardware, -

will silently fall back to base cpu delegate. - allow_multiple - remove process handling and allow multiple instances

-

of voxl-tflite-server to run. Enables the ability -

to run multiples models simultaneously. - output_pipe_prefix - if allow_multiple is set, create output pipes using default

-

names (tflite, tflite_data) with added prefix. -

ONLY USED IF allow_multiple is set to true.

*/

{

"skip_n_frames": 0,

"model": "/usr/bin/dnn/yolov5_float16_quant.tflite",

"input_pipe": "/run/mpa/hires_color",

"delegate": "gpu",

"allow_multiple": false,

"output_pipe_prefix": "mobilenet"

} -

Thanks for an informative response. One thing that's confusing me is the message

"ERROR in pipe_client_init_channel"as thepipe_client_init_channelmethod is deprecated. Do you mind letting me know what version of the SDK you're on? If it isn't the most recent SDK, it's probably worth upgrading to see if it fixes anything. I know we've put out a lot of changes inlibmodal-pipe. You can read how to flash the latest SDK here.Unfortunately just from these debug messages I can't pin down the issue and so I might need you to provide me with a model file to help out more. I can understand if you don't want to leak your trained model file, though. One thing you could do in this case would be to just train for a single epoch just as a means of creating a model through the same process. If I have a model file I can do some more rigorous debugging to determine the issue.

Thanks and sorry about all of this!

Thomas Patton

thomas.patton@modalai.com -

@Thomas-Patton

voxl2:/$ voxl-version

system-image: 1.6.2-M0054-14.1a-perf

kernel: #1 SMP PREEMPT Fri May 19 22:19:33 UTC 2023 4.19.125hw version: M0054

voxl-suite: 1.0.0

will update to 1.0.1

Can I email you my tflite and saved model for review? I'm doing a run right now that should be completed in a couple hours.

Sabri -

@sansoy You should upgrade to the latest SDK (1.1.2)

-

@tom so i downloaded the upgrade and started the upgrade but its been stuck for about an hour.

How long does it take to flash the upgrade?

SabriFlashing the following System Image:

Build Name: 1.7.1-M0054-14.1a-perf-nightly-20231025

Build Date: 2023-10-25

Platform: M0054

System Image Version: 1.7.1Installing the following version of voxl-suite:

voxl-suite Version: 1.1.2Would you like to continue with SDK install?

- Yes

- No

#? yes

[ERROR] invalid option

#? 1

[INFO] adb installed

[INFO] fastboot installed

---- Starting System Image Flash ----

----./flash-system-image.sh ----

Detected OS: LinuxInstaller Version: 0.8

Image Version: 1.7.1Please power off your VOXL, connect via USB,

then power on VOXL. We will keep searching for

an ADB or Fastboot device over USB

[INFO] Found ADB device

[INFO] Rebooting to fastboot

.

[INFO] Found fastboot device

[WARNING] This system image flash is intended only for the following

platform: VOXL2 (m0054)Make sure that the device that will be flashed is correct. Flashing a device with an incorrect system image will lead the device to be stuck in fastboot.Would you like to continue with the VOXL2 (m0054) system image flash?

- Yes

- No

#? 1

-

@sansoy It should start right away, I would power cycle your voxl2 and try again

-

@tom i did all that and still stuck. could it be whats in the warning about being stuck in fastboot?

it is the voxl2 and not the voxl2 mini.[WARNING] This system image flash is intended only for the following

platform: VOXL2 (m0054)Make sure that the device that will be flashed is correct. Flashing a device with an incorrect system image will lead the device to be stuck in fastboot. -

@sansoy As long as you are using the voxl2 SDK and are indeed flashing voxl2 hardware then that warning can be ignored.

-

@tom hey Tom, i'm having absolutely no luck.

i've tried 3 times and it still just hangs atWould you like to continue with the VOXL2 (m0054) system image flash?

- Yes

- No

#? 1

I then followed the unbrick instructions and reinstalled everything per

https://docs.modalai.com/voxl2-unbricking/#ubuntu-hostGot the system back up and running and tried to install the latest SDK again with no luck.

It just hangs. -

UPDATE: Got it working with "sudo" for the install. normally one would get a permission errors and thought maybe that was the issue and sure enough. recommend updating your docs to

say sudo ./install.sh -

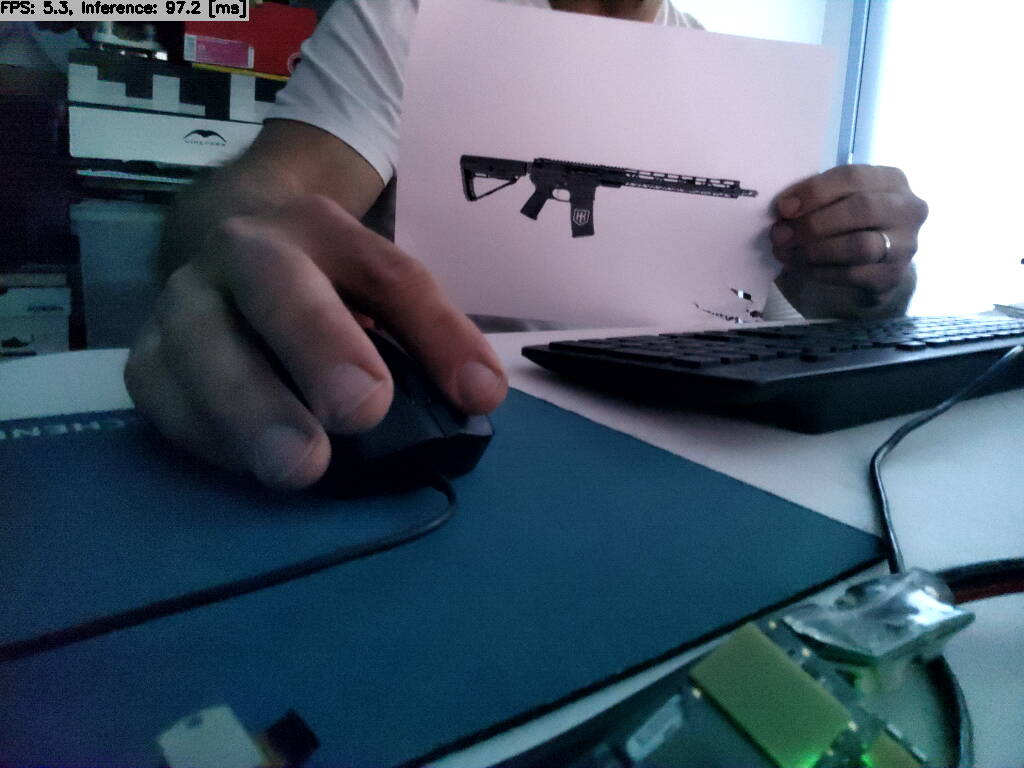

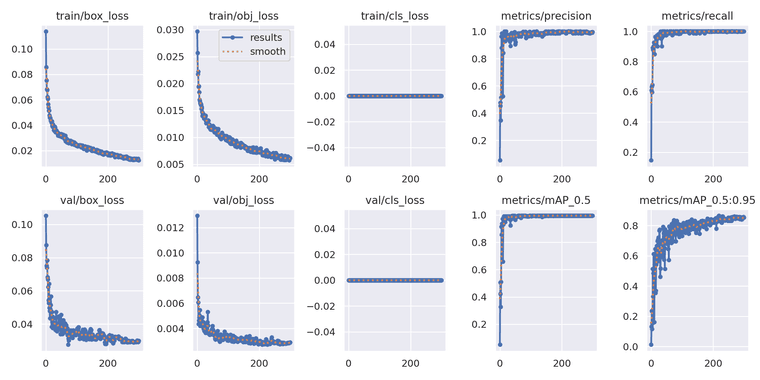

@tom so i trained on a new batch of AR15 images and got really good numbers in terms of losses and mAPs. Ran an unquantized and quantized version in voxl-tflite-server and again nothing is being recognized.

Here's a link to the tflites, and saved_models with inference results on never before seen images.

Any insight on how to make these models work in your environment would be awesomely appreciated.https://drive.google.com/drive/folders/1N1pU0jMRTb3rODSfIuETPrBf66m4ody7?usp=drive_link

-

@sansoy Interesting, sudo isn't normally required. I'm curious, what linux distro are you running?

-

@sansoy @Thomas-Patton is the ML expert here and I'll let him comment on that front

-

@tom Ubuntu 22.04.3 LTS

-

@sansoy Huh, okay, that's what I run as well.

What groups are your default user in? For example, here is mine:

~ groups ok | 10:20:36 AM tom adm dialout cdrom sudo dip plugdev lpadmin lxd sambashare docker -

@tom eve@eve:~$ groups

eve adm cdrom sudo dip plugdev lpadmin lxd sambashare -

@sansoy Can you try adding your user to the dialout group and seeing if that fixes the issue?

sudo usermod -a -G dialout $USER -

@tom did that and still no inference.