@modaltb Thanks, despite the delay, for the response! I only have one last question in this regard: is it possible to rotate the stereo ov7251 by 90 degrees or currently it is only possible to flip them by 180?

This info would help me a lot. Thanks!

Posts made by afdrus

-

RE: Camera orientation parameterposted in Ask your questions right here!

-

RE: Bad DFS disparityposted in VOXL 2

@thomas Thanks a lot for the support! I'll dig into it and go back to you in case something is not clear!

-

RE: Bad DFS disparityposted in VOXL 2

@thomas Thank you for your response! That would be great, could you please show me a bit more precisely where can I start to edit the code to make this adjustments?

-

RE: Bad DFS disparityposted in VOXL 2

@Moderator thank you for your response!

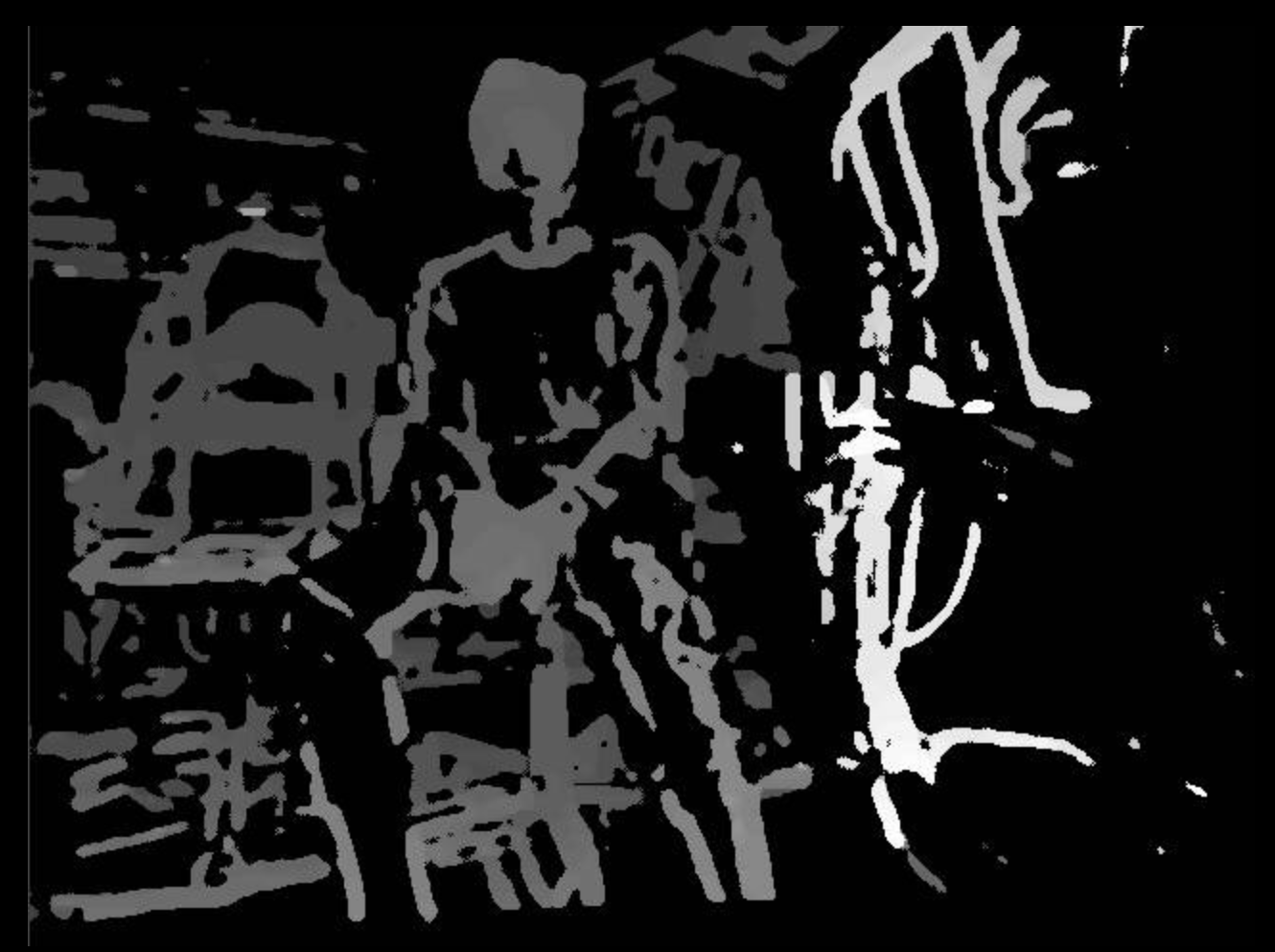

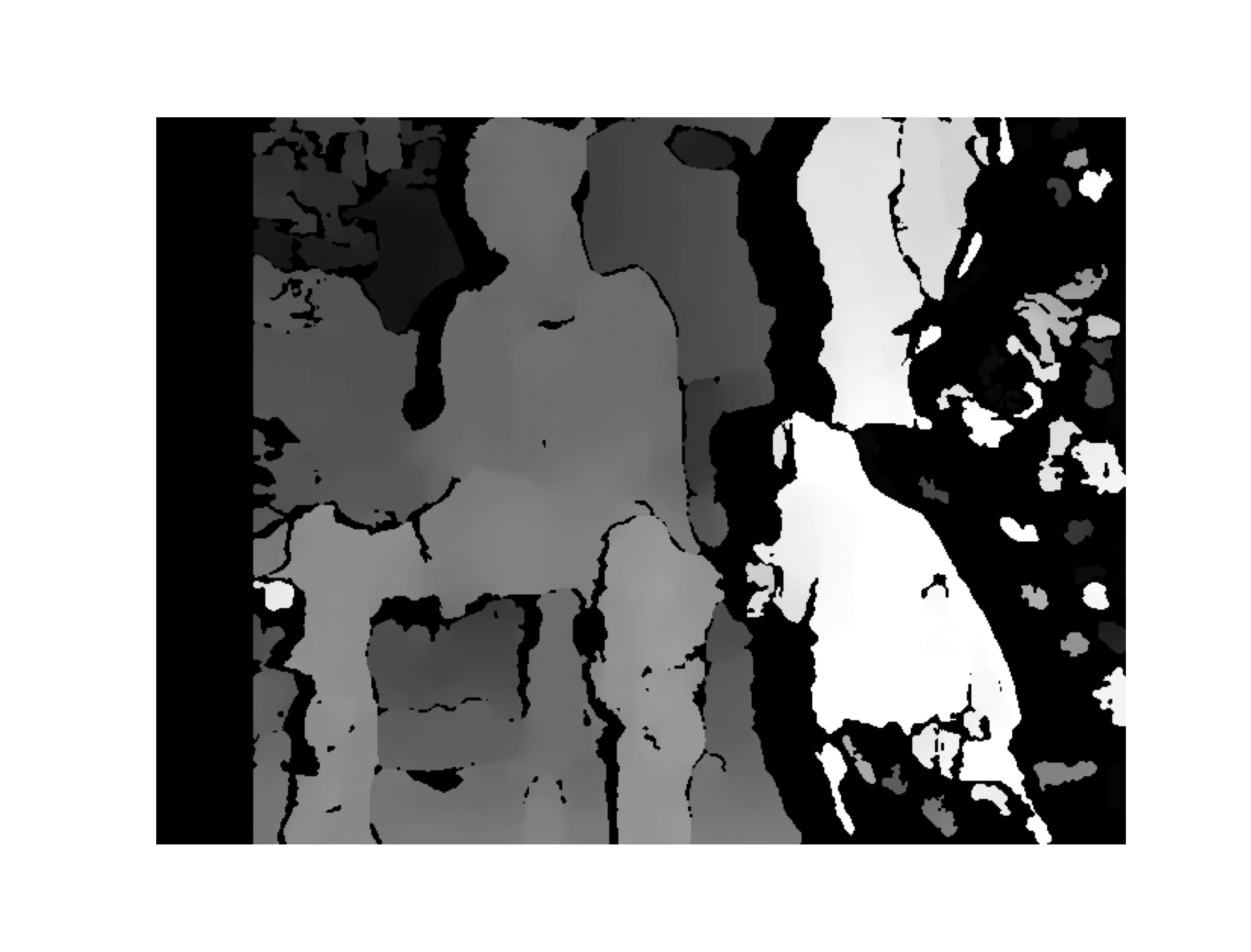

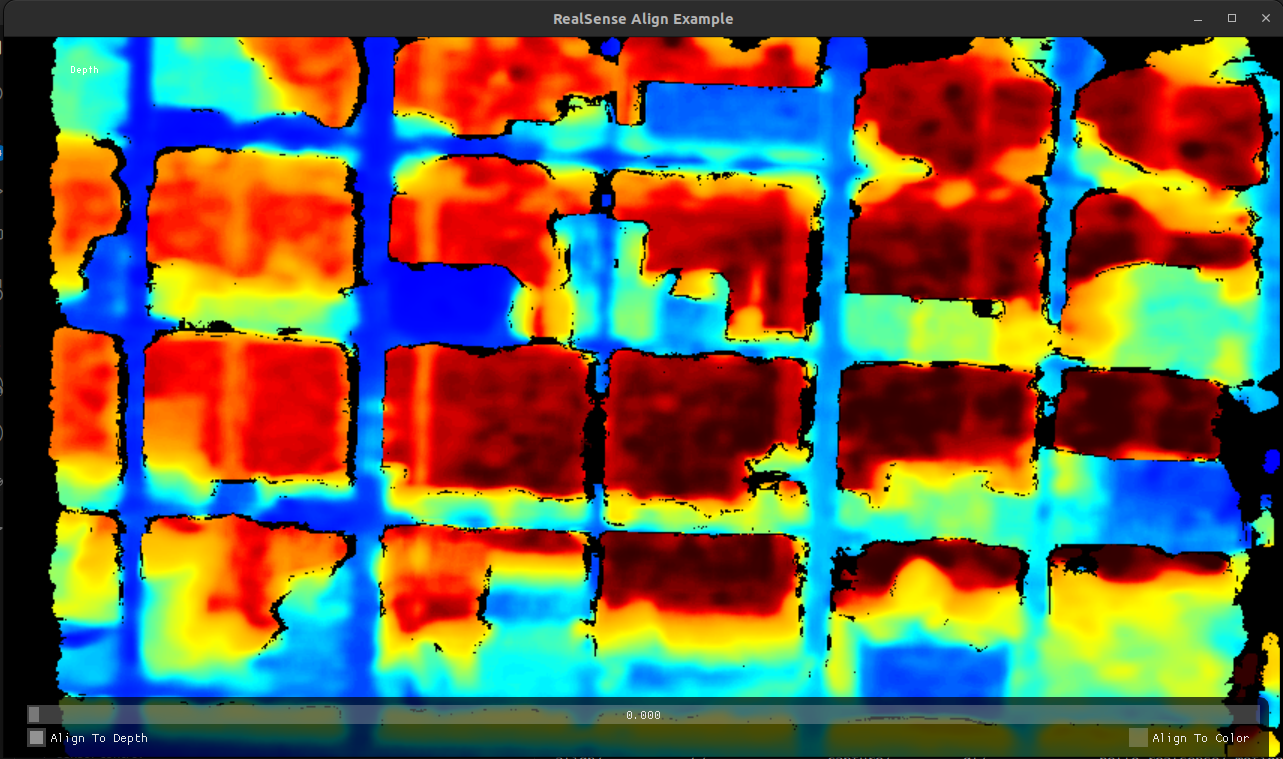

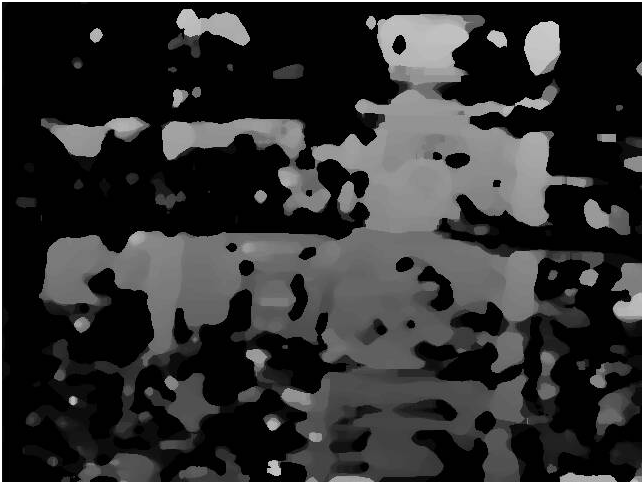

I agree with what you suggest, but we are not discussing about the computation of the disparity in an edge case scenario of a white wall. I am just confused, for instance take the example below: why the voxl-disparity is so sparse (first top picture) with respect to the other done via opencv (second bottom picture)?top-disparity

bottom-disparity

In both cases I am using the same grey images, with the same extrinsics/intrinsics parameters, and yet the disparity from opencv is able to fill in the areas within the edges, whereas voxl-disparity is not. Are there any parameters that I can tune to get the top-disparity to look more similar to the bottom-disparity?

-

RE: Bad DFS disparityposted in VOXL 2

@thomas Sure, the model that I am using is the D455.

I was just trying to understand if the disparity that I am getting from voxl-dfs-server (now) is the best that I can get (or at least not too far from the best that I can get). This is why I asked if you could post a good disparity taken with one of your stereo setup. The one in the website https://docs.modalai.com/voxl-dfs-server-0_9/ looks very similar to what I am getting ATM: good disparity along the edges of the objects, but no information in uniform areas.

Thanks again for the reply!

-

RE: Bad DFS disparityposted in VOXL 2

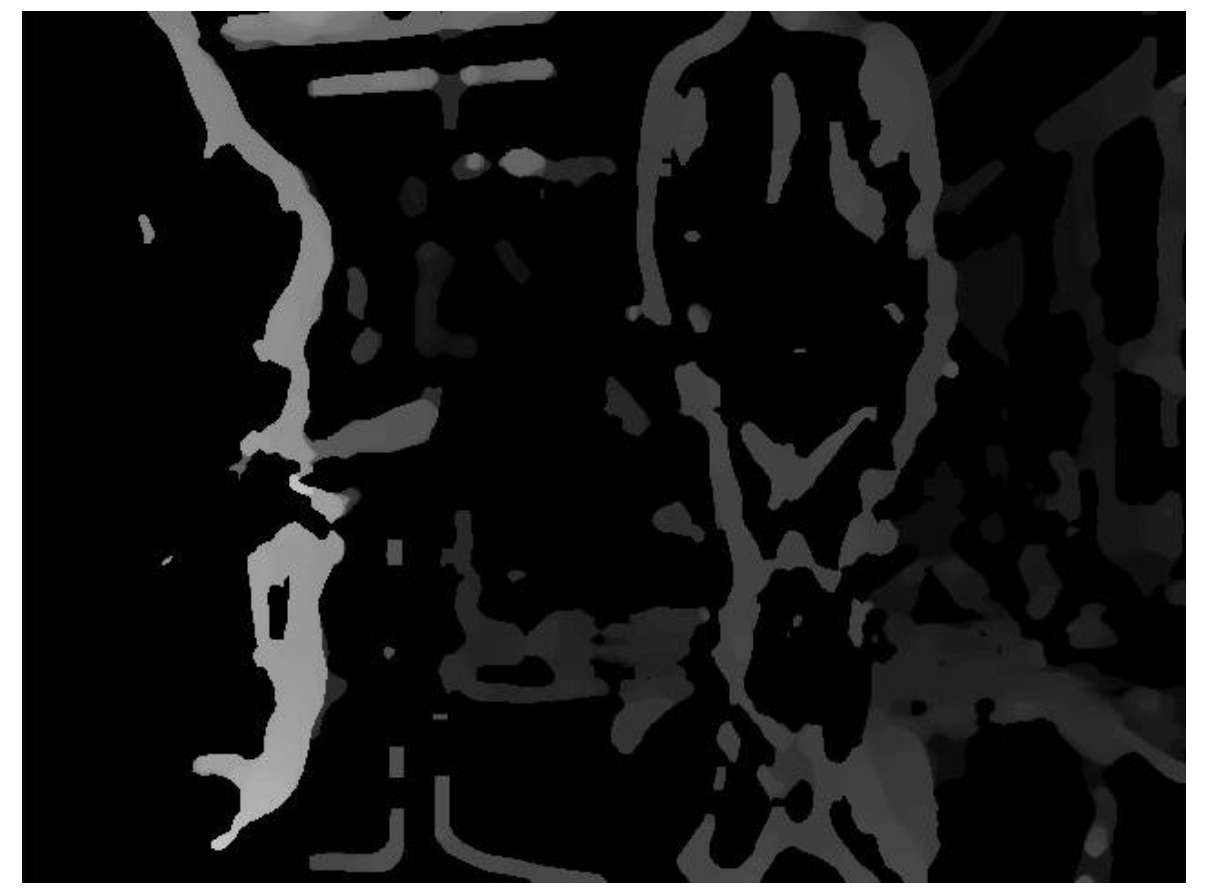

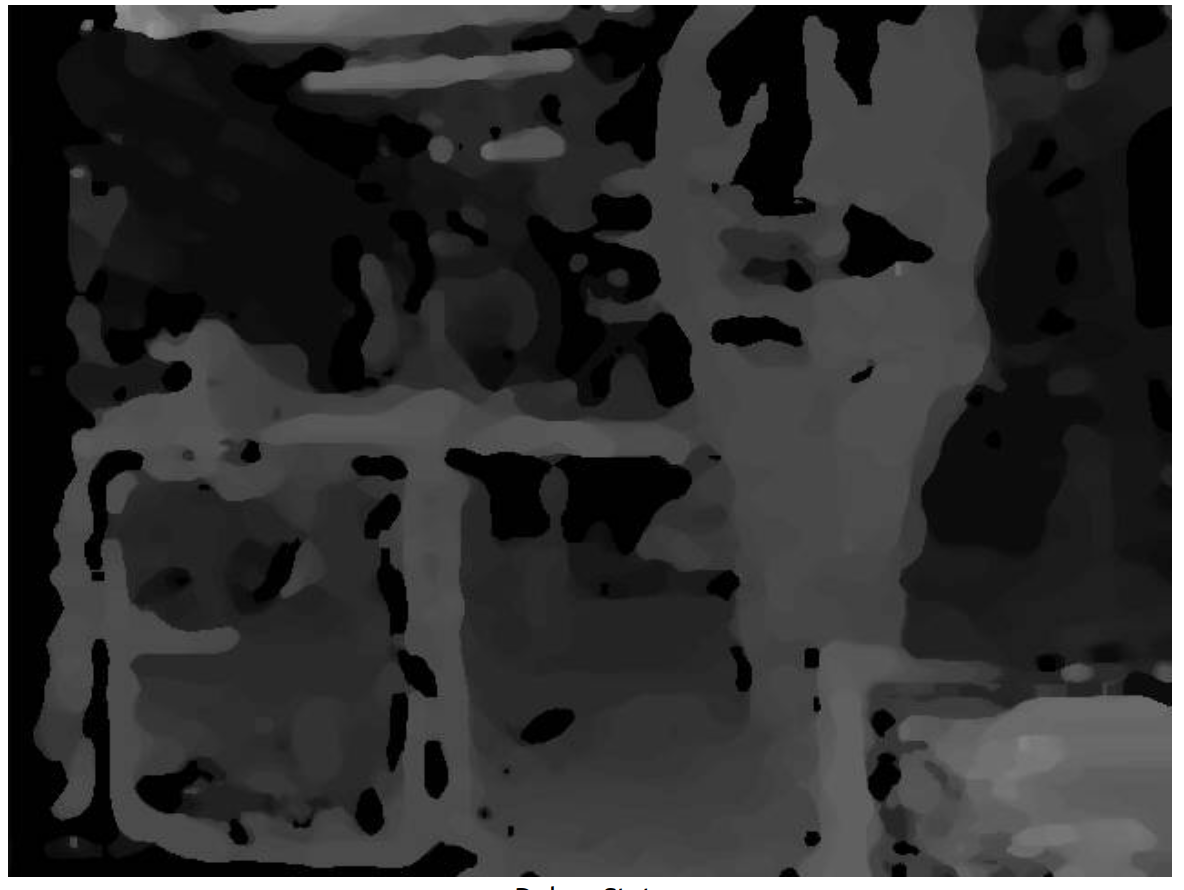

@thomas Thank you for your response, On the SDK 0.9 I have the same issue. I tried to re-focus the cameras and re-calibrate them and the dfs has improved maybe slightly:

no parameter tuning

post_median_filter=15

post_median_filter=15, blur_filter=5

post_median_filter=15, blur_filter=5, dfs_pair_0_cost_threshold=80

However, I am not able to get a uniform disparity as the one that I would get from the intel sensor for example, there are always a lot of holes over the objects in the scenes.

Is there a way to fine tune this? Maybe a parameter in voxl-dfs-server that I did not yet tweaked?

Could this be related to other macroscopic issues?Thank you in advance for your response!

-

Bad DFS disparityposted in VOXL 2

Hi everyone,

I am using a Sentinel (1) drone upgraded to the SDK 1.1.0. I was trying to get the disparity out of the frontal stereo however the results are quite bad, please see below the output of the sentinel (greyscale) vs the output of a an intel realsense (colored). For reference the object in view is a library.

I tried to play around with the parameters in voxl-dfs-server, but I can't get a decent result.

- Do you have any suggestions? I have a feeling that it might be something macroscopic like: camera mounting, stereo out of focus or bad calibration (although I repeated it quite a lot).

- Could you please post a good disparity taken with one of your stereo setup along with the respective grey scale stereo images? This would help to have a benchmark that I can compare with?

Thank you in advance for your response.

-

RE: Poor VIO performance when tracking distant featuresposted in VOXL 2

@Alex-Kushleyev Thank you very much for the precise response!!!! I noticed that

logDepthBootstrapis harcoded invoxl-qvio-server/server/main.cppto belog(1)(i.e. 0) here: https://gitlab.com/voxl-public/voxl-sdk/services/voxl-qvio-server/-/blob/master/server/main.cpp?ref_type=heads#L360

but I will try to add it to the qvio server params so that I can tune it more easily.useLogCameraHeightset to false should enablelogDepthBootstrapinstead oflogCameraHeightBootstrapright? Regarding these two "bootstraps":- Could you please explain what is the difference between the two and when should one be preferred with respect to the other?

- From the comments in https://developer.qualcomm.com/sites/default/files/docs/machine-vision-sdk/api/v1.2.13/group__mvvislam.html#ga6f8af5b410006a44dbcf59f7bc6a6b38 it is not clear to me what the meaning of these two params is: are they expected to work only during the initialization phase? What influence would they have during the rest of the flight?

Thanks again in adavance for your reply.

-

Poor VIO performance when tracking distant featuresposted in VOXL 2

Hi everyone,

I am using a Sentinel drone upgraded to the SDK 1.1.0 to perform indoor autonomous missions.

The vibrations are all green with voxl-inspect-vibration; The number of features >10; Illumination conditions are good: the indoor environment is artificially illuminated with artificial light that don't cause any flickering; I tried also to use another tracking cam pitched by 15 degrees instead of 45, but the following results are the sameHowever, I experienced drifts of 3m with respect to the ground truth over 10m of flight. In this case the features were roughly 10-15m away from the drone. Whereas as soon as the features were 30ish m away from the drone, it started to oscillate around the point sent by the mission with an amplitude ~0.5m. Consider the indoor environment as a room where most of the features are concentrated in only one side of the walls.

1)Is this behavior expected by the current VIO? If not, what solutions do you propose?

2) What drift should I expect over 10m in good environment conditions?

3) Slightly unrelated question: Is it possible to use the tracking cam and stereo_rear cam at the same time as input to the qvio-server?Thank you in advance for your response.

-

RE: Add mask file to QVIO algorithmposted in Feature Requests

@Alex-Kushleyev Thank you for the help! Can I locate the pgm file anywhere I want on the Voxl2 and specify the absolute path to the pgm in staticMaskFileName or do I have to put the file in a specific path in order for the mvSLAMinitialize function to find it?

Moreover, are there any parameters that can be tweaked to force the qvio to maximize the amount of features across the whole image? When yawing I noticed that the algorithm tends to stick to the features detected in the previous frames rather than picking new ones. As a result, the at the end of the yaw the drone has 20ish features on one side of the image and none on the other side (despite the same lighting conditions) and as a result the quality of the vio can drop below 10% quite easily. Basically I would like the algorithm to switch earlier on new feature candidates rather than keeping old ones in the buffer.

-

RE: Add mask file to QVIO algorithmposted in Feature Requests

@david-moro I am trying to do the same thing, where did you locate the .pgm file in the voxl-qvio-server package? In the github example there is no pgm file.

Thank you in advance for your response -

RE: Stereo tag detection: WARNING, apriltag roll/pitch out of boundsposted in Ask your questions right here!

I did a very quick and dummy workaround to fix the problem, most of the changes are in

geometry.cin the following snippet of code:int geometry_calc_R_T_tag_in_local_frame(int64_t frame_timestamp_ns, rc_matrix_t R_tag_to_cam, rc_vector_t T_tag_wrt_cam, rc_matrix_t *R_tag_to_local, rc_vector_t *T_tag_wrt_local, char* input_pipe) { // these "correct" values are from the past aligning to the timestamp static rc_matrix_t correct_R_imu_to_vio = RC_MATRIX_INITIALIZER; static rc_vector_t correct_T_imu_wrt_vio = RC_VECTOR_INITIALIZER; int ret = rc_tf_ringbuf_get_tf_at_time(&vio_ringbuf, frame_timestamp_ns, &correct_R_imu_to_vio, &correct_T_imu_wrt_vio); // fail silently, on catastrophic failure the previous function would have // printed a message. If ret==-2 that's a soft failure meaning we just don't // have enough vio data yet, so also return silently. if (ret < 0) return -1; pthread_mutex_lock(&tf_mutex); // Read extrinsics parameters int n; vcc_extrinsic_t t[VCC_MAX_EXTRINSICS_IN_CONFIG]; vcc_extrinsic_t tmp; // now load in extrinsics if (vcc_read_extrinsic_conf_file(VCC_EXTRINSICS_PATH, t, &n, VCC_MAX_EXTRINSICS_IN_CONFIG)) { return -1; } // Initialize camera matrices rc_matrix_t R_cam_to_imu_tmp = RC_MATRIX_INITIALIZER; rc_matrix_t R_cam_to_body = RC_MATRIX_INITIALIZER; rc_vector_t T_cam_wrt_imu_tmp = RC_VECTOR_INITIALIZER; rc_vector_t T_cam_wrt_body = RC_VECTOR_INITIALIZER; rc_vector_zeros(&T_cam_wrt_body, 3); rc_vector_zeros(&T_cam_wrt_imu_tmp, 3); rc_matrix_identity(&R_cam_to_imu_tmp, 3); rc_matrix_identity(&R_cam_to_body, 3); printf("input_pipe: %s \n", input_pipe); if (strcmp(input_pipe, "stereo_front") == 0) { // Pick out IMU to Body. config.c already set this up for imu1 so just leave it as is if (vcc_find_extrinsic_in_array("body", "stereo_front_l", t, n, &tmp)) { fprintf(stderr, "ERROR: %s missing body to stereo_front_l, sticking with identity for now\n", VCC_EXTRINSICS_PATH); return -1; } rc_rotation_matrix_from_tait_bryan(tmp.RPY_parent_to_child[0] * DEG_TO_RAD, tmp.RPY_parent_to_child[1] * DEG_TO_RAD, tmp.RPY_parent_to_child[2] * DEG_TO_RAD, &R_cam_to_body); rc_vector_from_array(&T_cam_wrt_body, tmp.T_child_wrt_parent, 3); T_cam_wrt_imu_tmp.d[0] = T_cam_wrt_body.d[0] - T_imu_wrt_body.d[0]; T_cam_wrt_imu_tmp.d[1] = T_cam_wrt_body.d[1] - T_imu_wrt_body.d[1]; T_cam_wrt_imu_tmp.d[2] = T_cam_wrt_body.d[2] - T_imu_wrt_body.d[2]; rc_matrix_multiply(R_body_to_imu, R_cam_to_body, &R_cam_to_imu_tmp); printf("RPY: \n%5.2f %5.2f %5.2f\n\n", tmp.RPY_parent_to_child[0], tmp.RPY_parent_to_child[1],tmp.RPY_parent_to_child[2]); printf("R_cam_to_imu_tmp: \n%5.2f %5.2f %5.2f\n %5.2f %5.2f %5.2f\n %5.2f %5.2f %5.2f\n\n", R_cam_to_imu_tmp.d[0][0], R_cam_to_imu_tmp.d[0][1], R_cam_to_imu_tmp.d[0][2], R_cam_to_imu_tmp.d[1][0], R_cam_to_imu_tmp.d[1][1], R_cam_to_imu_tmp.d[1][2], R_cam_to_imu_tmp.d[2][0], R_cam_to_imu_tmp.d[2][1], R_cam_to_imu_tmp.d[2][2]); // calculate position of tag wrt local rc_matrix_times_col_vec(R_cam_to_imu_tmp, T_tag_wrt_cam, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, T_cam_wrt_imu_tmp); rc_matrix_times_col_vec_inplace(correct_R_imu_to_vio, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, correct_T_imu_wrt_vio); rc_matrix_times_col_vec_inplace(R_vio_to_local, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, T_vio_ga_wrt_local); // calculate rotation tag to local rc_matrix_multiply(R_cam_to_imu_tmp, R_tag_to_cam, R_tag_to_local); rc_matrix_left_multiply_inplace(correct_R_imu_to_vio, R_tag_to_local); rc_matrix_left_multiply_inplace(R_vio_to_local, R_tag_to_local); } else if (strcmp(input_pipe, "stereo_rear") == 0) { // Pick out IMU to Body. config.c already set this up for imu1 so just leave it as is if (vcc_find_extrinsic_in_array("body", "stereo_rear_l", t, n, &tmp)) { fprintf(stderr, "ERROR: %s missing body to stereo_rear_l, sticking with identity for now\n", VCC_EXTRINSICS_PATH); return -1; } rc_rotation_matrix_from_tait_bryan(tmp.RPY_parent_to_child[0] * DEG_TO_RAD, tmp.RPY_parent_to_child[1] * DEG_TO_RAD, tmp.RPY_parent_to_child[2] * DEG_TO_RAD, &R_cam_to_body); rc_vector_from_array(&T_cam_wrt_body, tmp.T_child_wrt_parent, 3); T_cam_wrt_imu_tmp.d[0] = T_cam_wrt_body.d[0] - T_imu_wrt_body.d[0]; T_cam_wrt_imu_tmp.d[1] = T_cam_wrt_body.d[1] - T_imu_wrt_body.d[1]; T_cam_wrt_imu_tmp.d[2] = T_cam_wrt_body.d[2] - T_imu_wrt_body.d[2]; rc_matrix_multiply(R_body_to_imu, R_cam_to_body, &R_cam_to_imu_tmp); printf("R_cam_to_imu_tmp: \n%5.2f %5.2f %5.2f\n %5.2f %5.2f %5.2f\n %5.2f %5.2f %5.2f\n\n", R_cam_to_imu_tmp.d[0][0], R_cam_to_imu_tmp.d[0][1], R_cam_to_imu_tmp.d[0][2], R_cam_to_imu_tmp.d[1][0], R_cam_to_imu_tmp.d[1][1], R_cam_to_imu_tmp.d[1][2], R_cam_to_imu_tmp.d[2][0], R_cam_to_imu_tmp.d[2][1], R_cam_to_imu_tmp.d[2][2]); // calculate position of tag wrt local rc_matrix_times_col_vec(R_cam_to_imu_tmp, T_tag_wrt_cam, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, T_cam_wrt_imu_tmp); rc_matrix_times_col_vec_inplace(correct_R_imu_to_vio, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, correct_T_imu_wrt_vio); rc_matrix_times_col_vec_inplace(R_vio_to_local, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, T_vio_ga_wrt_local); // calculate rotation tag to local rc_matrix_multiply(R_cam_to_imu_tmp, R_tag_to_cam, R_tag_to_local); rc_matrix_left_multiply_inplace(correct_R_imu_to_vio, R_tag_to_local); rc_matrix_left_multiply_inplace(R_vio_to_local, R_tag_to_local); } else if (strcmp(input_pipe, "tracking") == 0) { printf("R_cam_to_imu: \n%5.2f %5.2f %5.2f\n %5.2f %5.2f %5.2f\n %5.2f %5.2f %5.2f\n\n", R_cam_to_imu.d[0][0], R_cam_to_imu.d[0][1], R_cam_to_imu.d[0][2], R_cam_to_imu.d[1][0], R_cam_to_imu.d[1][1], R_cam_to_imu.d[1][2], R_cam_to_imu.d[2][0], R_cam_to_imu.d[2][1], R_cam_to_imu.d[2][2]); // calculate position of tag wrt local rc_matrix_times_col_vec(R_cam_to_imu, T_tag_wrt_cam, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, T_cam_wrt_imu); rc_matrix_times_col_vec_inplace(correct_R_imu_to_vio, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, correct_T_imu_wrt_vio); rc_matrix_times_col_vec_inplace(R_vio_to_local, T_tag_wrt_local); rc_vector_sum_inplace(T_tag_wrt_local, T_vio_ga_wrt_local); // calculate rotation tag to local rc_matrix_multiply(R_cam_to_imu, R_tag_to_cam, R_tag_to_local); rc_matrix_left_multiply_inplace(correct_R_imu_to_vio, R_tag_to_local); rc_matrix_left_multiply_inplace(R_vio_to_local, R_tag_to_local); } printf("\n__________________________________________________________________________\n"); pthread_mutex_unlock(&tf_mutex); return 0; } -

RE: Stereo tag detection: WARNING, apriltag roll/pitch out of boundsposted in Ask your questions right here!

@Moderator Thanks to some prints I notice that the issue comes from

R_cam_to_imuwhich always (and only) picks up the parameters from the tracking camera in extrinsics.conf. Regardless of the pipe specified invoxl-tag-detector.conf. Hope this insight might help you in solving the issue in future releases. -

RE: Stereo tag detection: WARNING, apriltag roll/pitch out of boundsposted in Ask your questions right here!

@Moderator Thank you for your response!

I thought that, as the name of the transformation suggest, R_tag_to_fixed did not depend on the orientation of the camera but rather on the orientation between the tag and fixed frame. I would expect to set the camera orientation in the extrinsics.conf file.Hoever if I run

voxl-vision-hub --debug_tag_localI get the followingID 0 T_tag_wrt_local -4.74 2.63 0.89 Roll 1.45 Pitch 0.05 Yaw -2.14 WARNING, apriltag roll/pitch out of bounds ID 0 T_tag_wrt_local -4.74 2.63 0.89 Roll 1.39 Pitch 0.04 Yaw -2.16 WARNING, apriltag roll/pitch out of bounds ID 0 T_tag_wrt_local -4.73 2.63 0.89 Roll 1.32 Pitch 0.06 Yaw -2.08 ID 0 T_tag_wrt_local -4.74 2.63 0.89 Roll 1.40 Pitch 0.05 Yaw -2.13 ID 0 T_tag_wrt_local -4.74 2.64 0.89 Roll 1.40 Pitch 0.04 Yaw -2.14No matter what values I put in extrinsics.conf or in R_tag_to_fixed, the Roll keeps being around 1.4 radians

-

RE: Stereo tag detection: WARNING, apriltag roll/pitch out of boundsposted in Ask your questions right here!

Nothing changed on v1.1.0

-

RE: VOA with front and rear stereo pairsposted in VOXL 2

@Jetson-Nano You should also change some parameters on the autopilot's side, did you do that? Which autopilot are you using?

-

RE: VOXL2 dfs server crashing after update (stack smashing)posted in GPS-denied Navigation (VIO)

@Thomas-Patton Are there any updates? I am facing the exact same issue

-

RE: QVIO hard reset causing error with PX4 altitude for VIOposted in Ask your questions right here!

@Aaron-Porter I am facing the same issue, is it solved?