Using a custom tflite model in VOXL 2

-

Hello team,

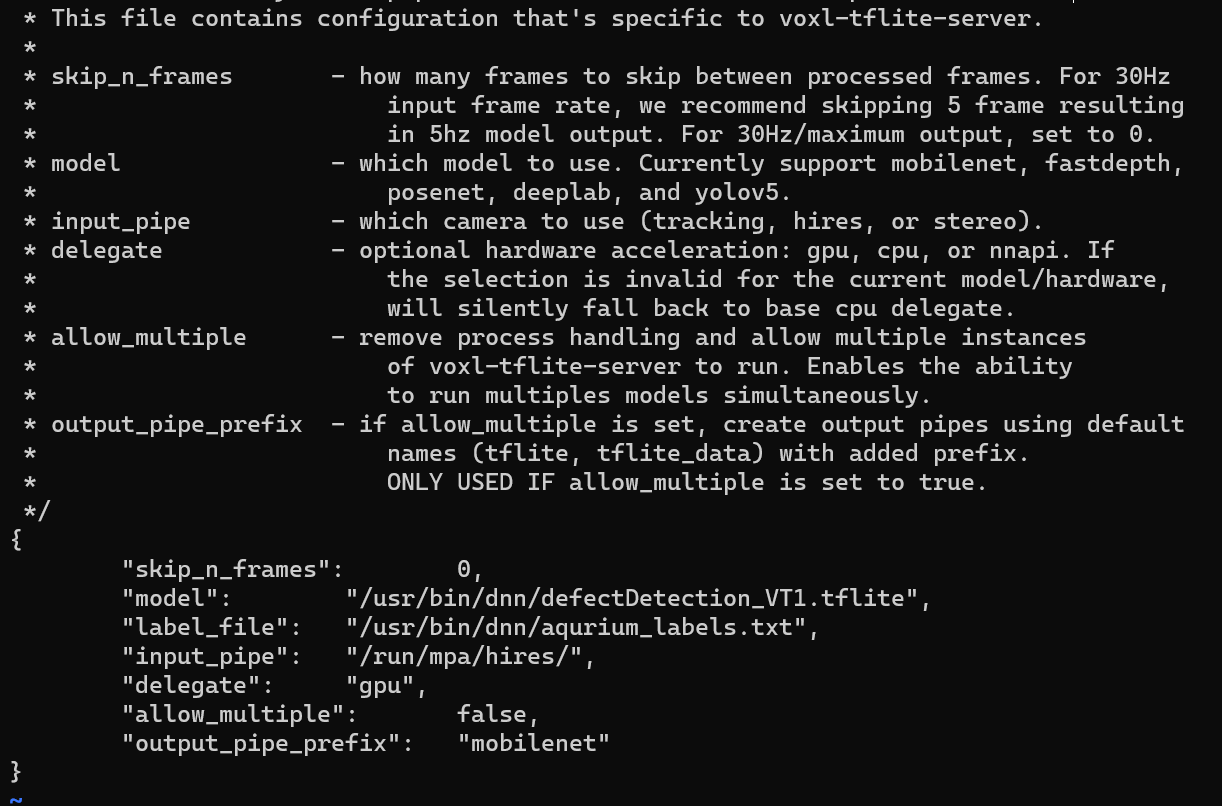

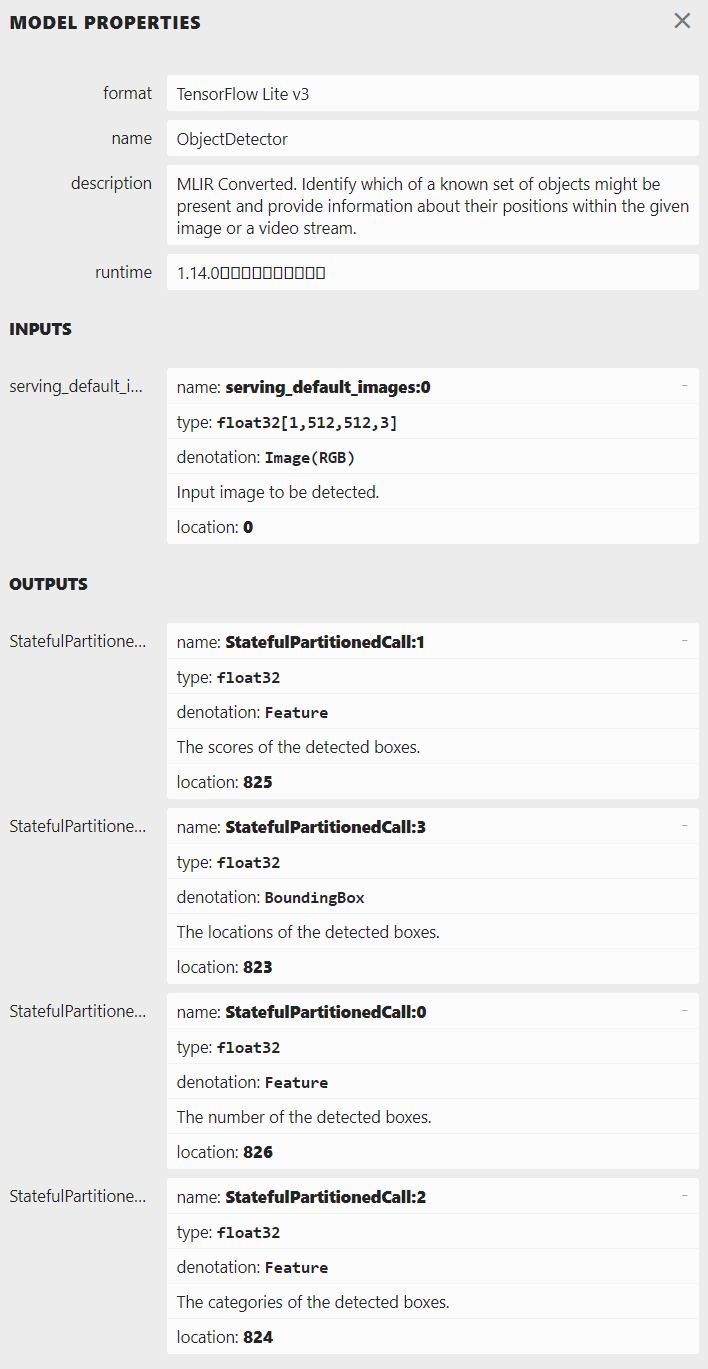

I would like to use a custom tflite model on VOXL 2. The architecture of the model is EfficientDet-Lite3. I can't see any framed output in the voxl-portal. The output is almost the same as the hires but with a little deley.What I've done is push the custom tflite model file and the label file to the VOXL 2 and specify the location in the voxl-tflite-server.conf

Could you kindly inform me how to deal with it? The picture below is the configuration of voxl-tflite-server.conf and the input and output of the custom model.

Many thanks!

-

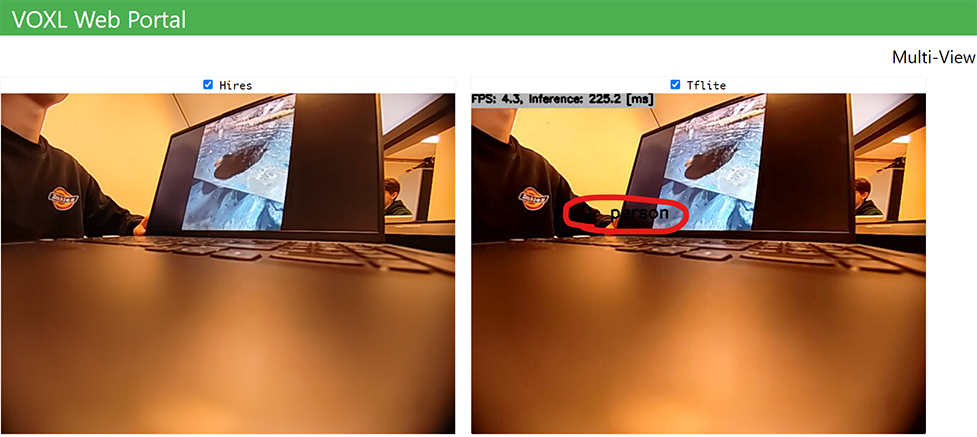

I would like to add that sometimes it will come out a label called (0 person).

But the label file I specified has no class called 0 person, which is very weird.It seems like it is still using this coco_labels.txt as the label file.

-

You will need to modify the code to make use of your new model. You will need to code the tflite-server to both read the model, and then post_process the model based on the inputs and outouts of your model

https://gitlab.com/voxl-public/voxl-sdk/services/voxl-tflite-server/-/blob/master/src/main.cpp#L196

-

@Chad-Sweet Thank you for your reply! I trained another yolov5 model so that I don't need to change too many things in the main.cpp file. Currently I just add the label file path and my model path in the main.cpp file.

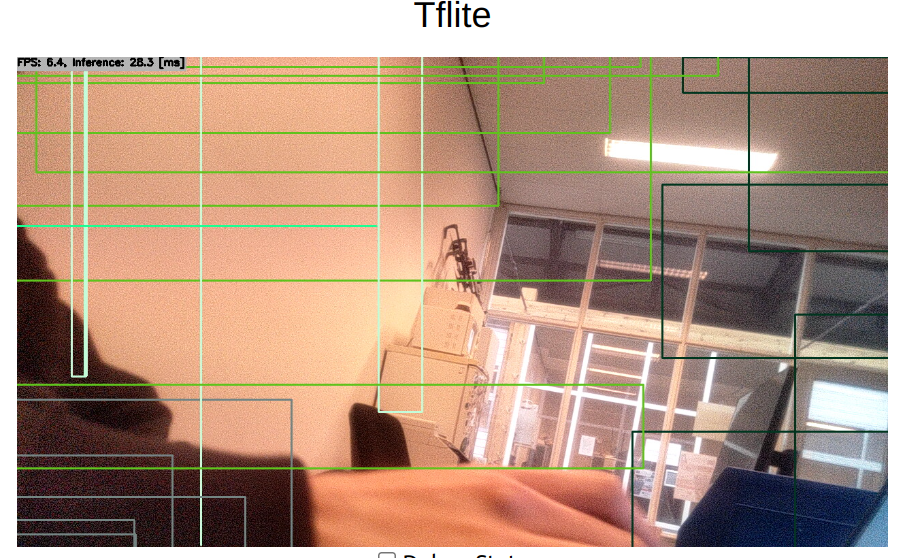

When I deployed the voxl-tflite-server to the VOXL 2, the output looks weird and it will stuck sometimes.

The difference between the prebuild yolov5 model and my custom model is my model has only one class. Could you please tell me where I am doing wrong?

Thank you very much for your attention to this matter! -

We don't know how to support you on yolov5, sorry. It might be easier to debug in TensorFlow Lite on your PC

-

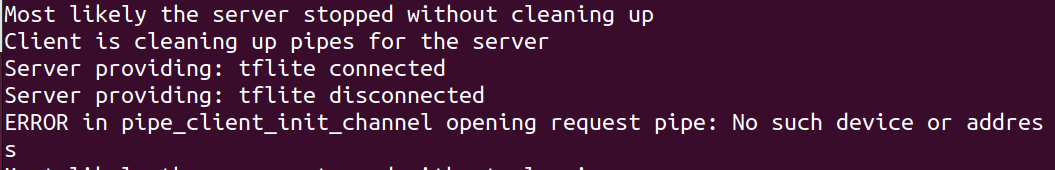

@Chad-Sweet Okay, could you kindly inform me which version of yolov5 did you use in the prebuild version? I'm not sure if it's my model's problem, because it works fine in my computer. I'd like to try training an exectly the same yolov5 model for testing becase when I post_process the model most time it will raise an error in the voxl-portal shown below.

-

@Chad-Sweet said in Using a custom tflite model in VOXL 2:

You will need to modify the code to make use of your new model. You will need to code the tflite-server to both read the model, and then post_process the model based on the inputs and outouts of your model

https://gitlab.com/voxl-public/voxl-sdk/services/voxl-tflite-server/-/blob/master/src/main.cpp#L196

Problem sovled, there is much more code need to be changed than I expected. Thanks for your assistances!

-

Hello,

I am trying to get pretty much the same thing working on the VOXL 2 with a Yolov5 model that only has one class. I am seeing similar outputs when I deploy it to the drone. @Siyu-Chen, what steps did you go through to get this working on your device? The model inferences just fine on my computer. I think it has something to do with the pre/post-processing. Thanks in advance for your help.