VOXL2 tflite custom models

-

Hello!

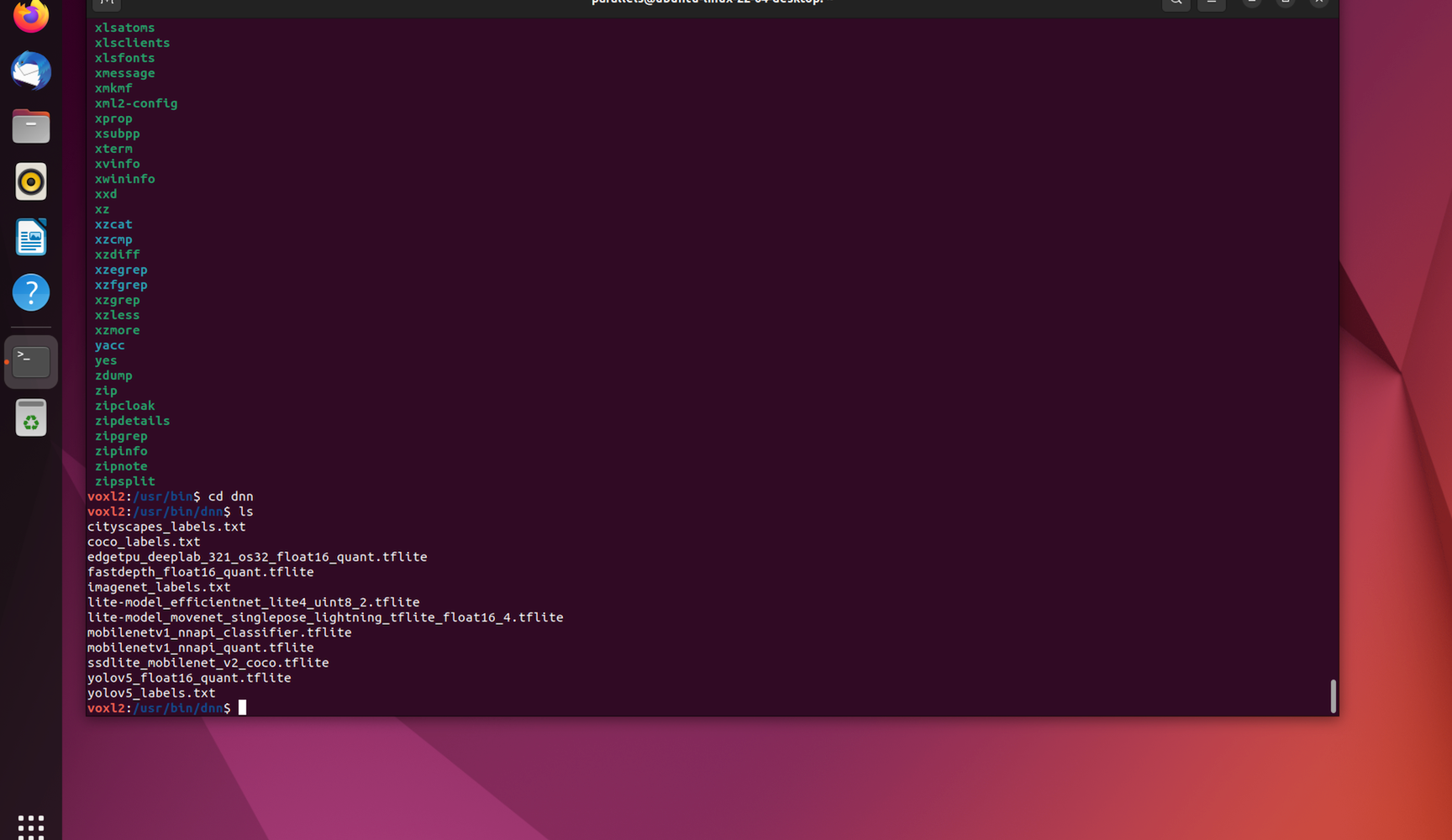

TL;DR – Does the VOXL2 have an EdgeTPU included in the hardware or is it just the name given to the DeepLab v3 model below? Also, how can I access the models in the VOXL2 in order to fine-tune or optimize for my specific needs?:

edgetpu_deeplab_321_os32_float16_quant.tflite

I wanted to make this post to ask about this and see if anyone has had the same experience and has any resources to accomplish this task. I have seen some forum posts of this being successfully done on VOXL however not any forum posts on the VOXL2 (qrb5165).

I found this article which has the performance of the models and a link to the segmentation model on Github. That Github repository PINTO_Model_Zoo also has a link to the EdgeTPU-DeepLab models trained on the Cityscapes dataset.

My understanding is that I can use a Deeplab v3 segmentation model compatible with TensorFlow Lite, which then needs to be quantized for best performance and to be compatible with the nnapi used by VOXL2. I am unsure of the role edgetpu plays in this process. I hope the documentation over this is made public soon to help in the start of this process.

Thank you as always!

-

@Jgaucin voxl-tflite-server is just a wrapper for standard TensorFlow Lite that has been compiled with the proper configurations to take advantage of QRB5165.

voxl-tflite-server code

So, if you can achieve what you are trying to do using TensorFlow Lite on the desktop, then you should be able to bring over to VOXL 2 in a straightforward manner.

The inference_worker function here is where the models are processed