@Moderator said in Hires_snapshot snapshot-no-save is saving ?:

voxl-camera-server

What is the easiest way to update it ? I looks like apt update is not doing the job right.

@Moderator said in Hires_snapshot snapshot-no-save is saving ?:

voxl-camera-server

What is the easiest way to update it ? I looks like apt update is not doing the job right.

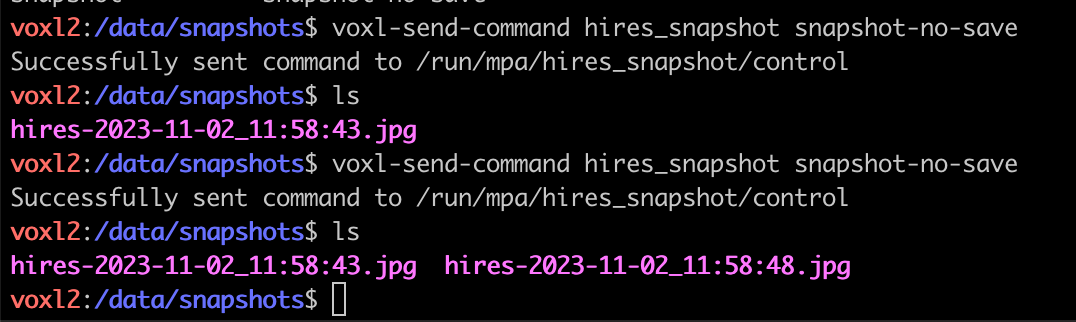

Okay. I found the problem... its snapshot_no_save (instead of snapshot-no-save)... I believe the website docs and the internal terminal info have to be updated.. Thanks... Im just leaving the topic for people who might have similar issue.

Im using a Starling drone... and recently updated.

I believe there is a bug with the command voxl-send-command hires_snapshot snapshot-no-save... when I use it it still saves to the folder :/data/snapshots.. is this the expected behaviour ?

In my current project I need a very accurate position estimation.. I wonder if the voxl-mapper service provides a better accurate position estimation than the VIO, since it probably uses a SLAM on top of the VIO. If so how can I acquire this data ? Thank You

@Darshit-Desai Then I believe you cannot do anything.. .the TOF is limited to certain surfaces and texture...

@Darshit-Desai If you have the 3D point cloud and you need to project it to a camera you need the intrinsics of the camera and the extrinsic... That's where I used the instrisic pf the RGB, on the image I sent you each point is a XYZ point projected to the RGB.. so with that you have the location in 3D space of the objects the camera is seeing.

@Caio-Licínio-Vasconcelos-Pantarotto The point cloud has less resolution than the depth view given by the TOF ? I didn't really explored it..

What do you mean by "running an object detector on a different resolution image and matching it with a sparse point cloud for getting 3d coordinates is inaccurate" ? You wanna use object detection on the RGB right ? Why you say that using the space point cloud is inaccurate ? Sorry I don't know much about this..

@Caio-Licínio-Vasconcelos-Pantarotto You also need to permute (1,0,2) the coordinates as they come from the sensor.. to be the way opencv expects

There is one thing on the VIO reference system that made me confused... We have T_imu_wrt_vio which is "Translation of the IMU with respect to VIO frame in meters", and we have T_cam_wrt_imu which is "Location of the optical center of the camera with respect to the IMU." I just want to clarify...

I would think that T_cam_wrt_imu (Also the rotation) is a fixed number (since the vector between the camera and the imu should be constant in the fixed frame of the drone).. and when I measured it on the Starling indeed is something like (0.026 0.007 0.024) most of the time.. but the T and R (0 45 90) changes slightly during movement.. is this because the IMU itself moves internally a bit ?

is the T_imu_wrt_vio the XYZ global position of the IMU that is given by the VIO algorithm ? So it should be interpreted as the position in space of the drone... Sorry the sentence "with respect to VIO frame" confused me a bit...

@Darshit-Desai I'm using the point cloud data of the TOF and projecting it to the RGB frame.. I calculated the RGB intrinsics using OpenCV. You need to use a rotation matrix diag[-1,1,1] and the translation you have to manually adjust because the TOF sensor is located (on the Starling) with a XYZ translation with respect to the RGB.. Its working more or less ok.. I believe this way is better because with the 3D point cloud you don't have to play with 2 cameras intrinsics..

@Moderator On the Starling.. is the TOF point cloud with relation to the high res camera ? or to the center of the body ? Because I believe to project from the point cloud to the high res frame I would need only the intrinsics of the high res and also the position with relation the where the (0,0,0) of the point cloud really is, no ?

@Moderator I'm using a straight out of the box Starling.. with updated software..

On the Portal we can see an Image Tof_LR, TOF_Depth and TOF_conf.. TOF_LR looks like its projecting the tof point cloud into the camera... Is that what is happening ? I would like to know how can I do that.. I believe I would need precisely the Extrinsics of the TOF and the High REs Camera.. is there any source code that shows how this is done ? I need to superimpose the TOF depth data into the camera frame.

@Caio-Licínio-Vasconcelos-Pantarotto

Unrelated to this... also noticed a dark ring border effect on the bottom of the image.. is this a common effect for this camera ?

I believe the high resolution snapshot is delivering a corrupted image...

I run voxl-send-command hires_snapshot snapshot and after pull the snapshot will adb pull... My MacOS native photo viewer can open the picture with no problem, but when opening on VScode I could see in the end of the picture 2 lines of black and white pixels... when trying to open using a python library I noticed that PIL cannot open, MatPlotLib cannot open, only opencv can open it, but it send a message Premature end of JPEG file. Is this a know problem with the snapshot service ? or is this corruption happening on adb pull ?

Are the intrinsics matrices of the TOF and High Resolution cameras available anywhere ?