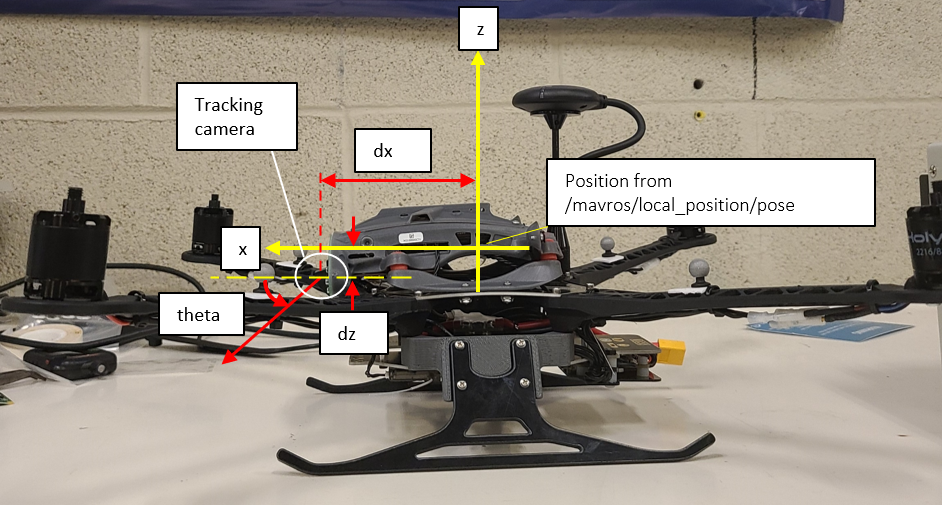

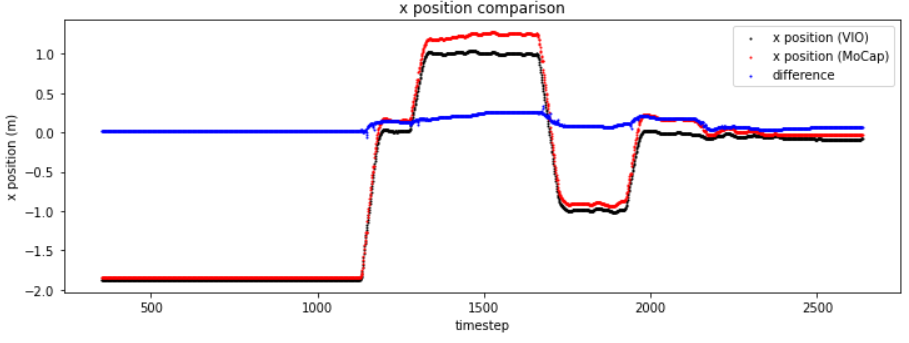

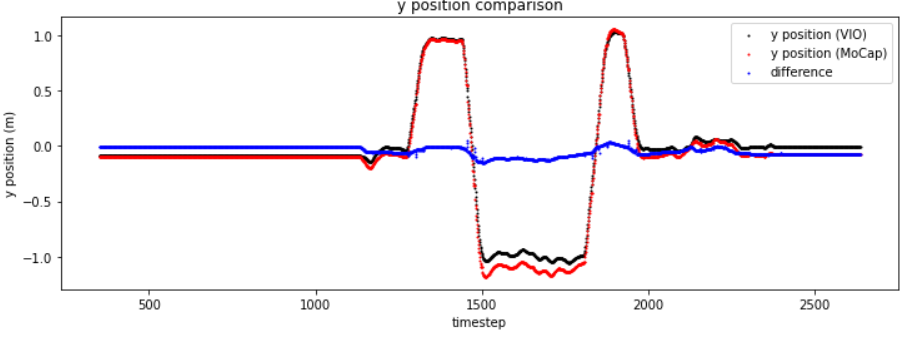

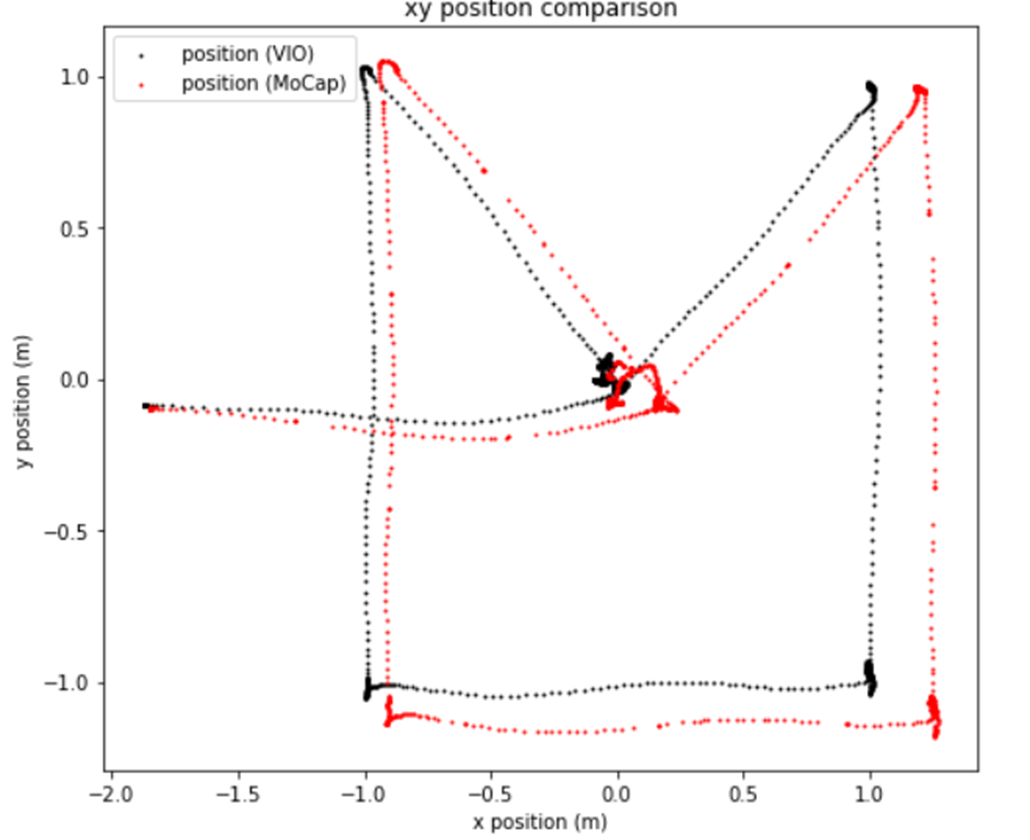

I've been working with the RB5 drone for autonomous flight and computer vision applications. I recently did a basic test to quantify the accuracy of VIO. In this test, I had the drone fly to positions (0,0,0), (1,1,1), (1,-1,1), (-1,-1,1), (-1,1,1) and back to (0,0,0), from its starting location. These are in global coordinates established by a motion capture system.

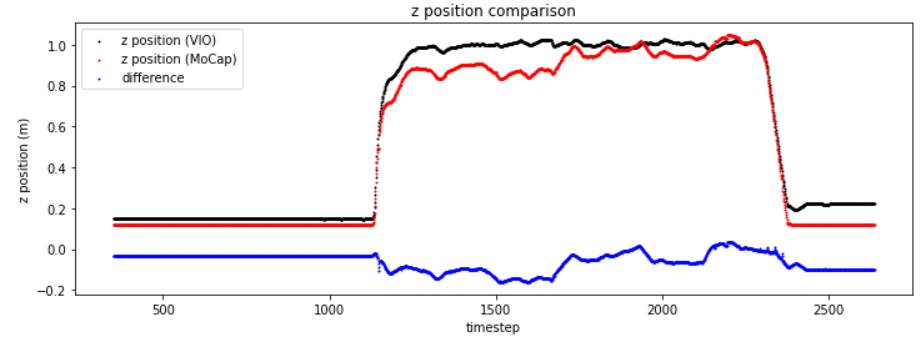

When powered up, I obtained the homogenous transformation from initial motion capture estimate. This homogenous transformation was then applied to the drone's VIO pose estimate. The drone compared this VIO pose estimate with my desired set point position inputs for flight. Motion capture was used to estimate ground truth position. I plotted the trajectories below. In each plot, black is VIO pose estimate, red is motion capture pose estimate and blue is the difference between them.

Overall, I can see that the VIO is working and it won't be perfect but I'd like explore ways to improve its accuracy. I am also certain this is not a PID control error either, as we can see the estimated (VIO) pose converging onto the set point inputs I provide; the drone clearly thinks it is in the right position, when it is not, as given from the motion capture pose estimate.

Some options I'm considering:

- changing PX4 parameters associated with VIO

- building a ROS node(s) to improve the pose estimate. I am only using the ROS topic /mavros/local_position/pose. Maybe I can use more information such as from /mavros/local_position/velocity_body and fuse them together for example.