Inconsistent IMU sampling

-

Hi,

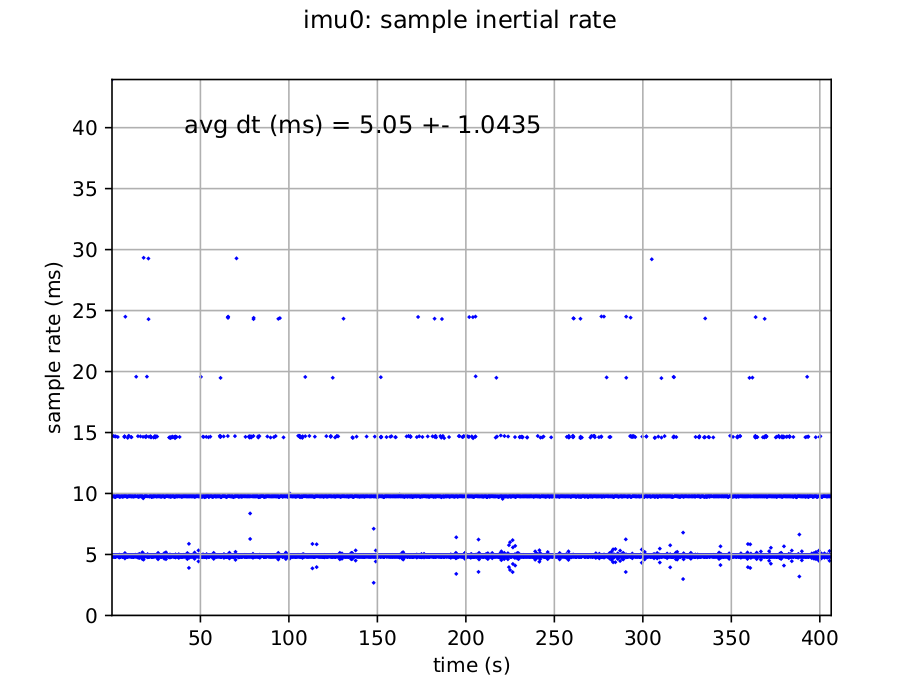

We've lately been noticing a lot of inconsistency in the publishing frequency and sample rate (from time stamp) of the IMU when publishing to ROS with the voxl-mpa-to-ros tool using SDK 1.1.3. This error is showing similar symptoms as other people (like this but doesn't seem to be fixed by setting CPU to performance mode. Using voxl-inspect-imu shows a dt of about 1ms, corresponding well to 1kHz (with the default IMU config).

We diagnose this problem primarily using

rostopic hzas well as with the following plot of IMU sampling rate we noticed while doing VIO calibrations. We tried also setting the sampling rate to 200z instead, to see if this would alleviate some pressure, but still there is quite a lot of inconsistency (of course worse with 1kHz).

This has been tested with very few other services running in the background, so compute usage when this occurs is minimal. The only thing running with any noticeable compute usage is

voxl-px4I was curious if this is expected behavior or if you had any suggestions for how to troubleshoot/debug/solve this problem?

-

Can you please try to use voxl-logger to log the IMU data concurrently with mpa-to-ros pipeline?

voxl-logger will save imu data with human readable timestamps, so you could easily plot that and double check if the IMU samples produced by

qrb5165-imu-serverare indeed jumping around. It is possible that something related to ROS is introducing a timestamp issue.Alex

-

@Alex-Kushleyev Thanks! I'll try this, note I should have mentioned this is with a voxl2 mini computer and on the ROS side the only thing happening is recording to rosbag.

Morten

-

@Morten-Nissov yes that makes sense.

so the data flow is as follows:

qrb5165-imu-server ->(mpa_msg) -> voxl-mpa-ros -> (ros_msg) -> rosbag recordSo, yeah it should be good to confirm whether voxl logger is also experiencing the issue, where the flow would be:

qrb5165-imu-server ->(mpa_msg) -> voxl_loggerAlso, please check if there is any difference when you set the cpu mode to perf:

voxl-set-cpu-mode perf -

@Alex-Kushleyev Sorry for the delay, some data for you in different configurations:

Getting data from log0000 and data.bag voxl-logger mean: 0.9766542020947352 ms std dev: 0.0013052458028126252 ms total messages: 61393 rosbag record mean: 3.230353079590143 ms std dev: 2.992801413066607 ms total messages: 18361 ---------- Getting data from log0001 and data_nodelay.bag voxl-logger mean: 0.9766513801209619 ms std dev: 0.0013052148029740315 ms total messages: 61342 rosbag record mean: 3.2565453276935123 ms std dev: 3.0139953610144303 ms total messages: 17860 ---------- Getting data from log0002 and data_perf.bag voxl-logger mean: 0.976641095612789 ms std dev: 0.001267007877929727 ms total messages: 61384 rosbag record mean: 3.73874577006071 ms std dev: 3.513557787123189 ms total messages: 15819 ----------Note significant differences in messages for each, indicating lots of message drop by the ros node.

Considering the sampling rate and standard deviation for different configurations. Logging was done with

voxl-logger -t 60 -i imu_apps -d bags/imu_test/androsbag record /imu_apps -O data --duration 60. I tried setting the rosbag to record with--tcpnodelay, but this seems to have no effect. During the performance run, the cpu usage was as follows:Name Freq (MHz) Temp (C) Util (%) ----------------------------------- cpu0 1804.8 40.1 22.33 cpu1 1804.8 39.7 18.10 cpu2 1804.8 40.1 17.82 cpu3 1804.8 39.7 16.16 cpu4 2419.2 39.0 0.00 cpu5 2419.2 38.6 0.00 cpu6 2419.2 37.8 0.00 cpu7 2841.6 39.0 0.00 Total 40.1 9.30 10s avg 12.26 ----------------------------------- GPU 305.0 37.4 0.00 GPU 10s avg 0.00 ----------------------------------- memory temp: 37.8 C memory used: 734/7671 MB ----------------------------------- Flags CPU freq scaling mode: performanceThe only thing which seems out of the ordinary here is that many CPUs are operating consistently at low frequency. Maybe you have a comment on why that could be? Otherwise, it seems the transfer from the voxl-imu-server to ros causes quite a bit of loss in messages. Here is some voxl 2 mini install information:

-------------------------------------------------------------- system-image: 1.7.8-M0104-14.1a-perf kernel: #1 SMP PREEMPT Sat May 18 03:34:36 UTC 2024 4.15 -------------------------------------------------------------- hw platform: M0104 mach.var: 2.0.0 -------------------------------------------------------------- voxl-suite: 1.3.3 --------------------------------------------------------------with this IMU config:

"imu0_enable": true, "imu0_sample_rate_hz": 1000, "imu0_lp_cutoff_freq_hz": 92, "imu0_rotate_common_frame": true, "imu0_fifo_poll_rate_hz": 100, "aux_imu1_enable": false, "aux_imu1_bus": 1, "aux_imu1_sample_rate_hz": 1000, "aux_imu1_lp_cutoff_freq_hz": 92, "aux_imu1_fifo_poll_rate_hz": 100, "aux_imu2_enable": false, "aux_imu2_spi_bus": 14, "aux_imu2_sample_rate_hz": 1000, "aux_imu2_lp_cutoff_freq_hz": 92, "aux_imu2_fifo_poll_rate_hz": 100, "aux_imu3_enable": false, "aux_imu3_spi_bus": 5, "aux_imu3_sample_rate_hz": 1000, "aux_imu3_lp_cutoff_freq_hz": 92, "aux_imu3_fifo_poll_rate_hz": 100 -

@Alex-Kushleyev Followup, I can change the

fifo_poll_rate_hzparameter and this has significantly increased the performance at the cost of significantly increase CPU load on the slow cores. Maybe you can advise how we should proceed."imu0_enable": true, "imu0_sample_rate_hz": 1000, "imu0_lp_cutoff_freq_hz": 92, "imu0_rotate_common_frame": true, "imu0_fifo_poll_rate_hz": 500,Name Freq (MHz) Temp (C) Util (%) ----------------------------------- cpu0 1804.8 41.7 44.95 cpu1 1804.8 41.7 33.94 cpu2 1804.8 42.0 46.88 cpu3 1804.8 42.4 44.00 cpu4 2419.2 40.5 0.00 cpu5 2419.2 40.5 0.00 cpu6 2419.2 39.7 0.00 cpu7 2841.6 40.1 0.00 Total 42.4 21.22 10s avg 15.10 ----------------------------------- GPU 305.0 38.6 0.00 GPU 10s avg 0.00 ----------------------------------- memory temp: 39.0 C memory used: 746/7671 MB ----------------------------------- Flags CPU freq scaling mode: performance Standby Not Active -----------------------------------Note even with this change some IMU messages are still dropped:

Getting data from log0003 and data_perf_fifo500.bag voxl-logger mean: 0.9766390443514245 ms std dev: 0.001688066415815054 ms total messages: 61397 rosbag record mean: 1.0005186426507142 ms std dev: 0.15095098578685434 ms total messages: 59599 -

@Morten-Nissov Note, although this does make things look better, there still is a pretty significant difference between the voxl logger and recording a rosbag, with a relatively non-insignificant performance difference between the two.

Also, not really sure why the voxl-logger doesn't manage a clean 1kHz, following the configuration. Seems to be consistently off the commanded value.

-

@Alex-Kushleyev Hi again, Sorry to keep spamming. I just wanted to make sure this is up-to-date as I progress.

A bit silly I didn't realize before, but I think the problem lies in the FIFO buffer reading vs IMU queue size in the

voxl-mpa-to-ros, that being said the conversion from pipe to ros also costs some latency it seems.In

voxl-mpa-to-ros, there is the followingm_rosPublisher = m_rosNodeHandle.advertise<sensor_msgs::Imu>(topicName, 1);Given that the FIFO buffer (with default settings) reads 10 IMU messages at a time, I think this should be changed to

m_rosPublisher = m_rosNodeHandle.advertise<sensor_msgs::Imu>(topicName, 25);so it has a little extra depending on what happens. This results in a very large improvement:

Getting data from log0004 and data_incr_queue.bag voxl-logger mean: 0.9766417070988479 ms std dev: 0.0014018018261035131 ms total messages: 61363 rosbag record mean: 0.9766411567432235 ms std dev: 0.02937541759378401 ms total messages: 59305although you can see there are still some dropped measurements and still a worsening of the standard deviation. I realized the IMU reading can also directly publish to ros from the

voxl-imu-server, and this seems a little more benefit as wellrosbag record mean: 0.9768075697417741 ms std dev: 0.012558722853297727 ms total messages: 61147still not totally without message dropping. This also still has some worsening about the standard deviation, I wonder if that's simply due to the conversion in

_clock_monotonic_to_ros_time. -

@Morten-Nissov , Sorry about the delay. Let me answer your questions / comments in order..

- the 8 cpu frequencies ranging from 1.8Ghz to 2.8Ghz are correct for the performance mode. The VOXL2 cpu has 3 types of cores and what you see are the maximum frequencies for those cores. Performance mode pegs the frequencies to max unless the cpu overheats, when frequency throttling will occur.

- the IMU frequency is not exactly 1Khz because the IMU is running using its own internal oscillator, which is does not perfectly align with 1000Hz output frequency, so the closest frequency the IMU can achieve is about 976Hz or so.

- Finally, you are absolutely right that the ROS publisher queue size being set to 1 is causing the messages to be dropped because they are published too quickly and are simply discarded when queue overflows.

I really don't see the down side of increasing the buffer size and i agree it should be set to at least the sample_rate / fifo_poll_rate , which is the number of samples that would be published at a time.

I will discuss this with the team.. thank you for pointing out the issue and good job figuring it out!

Alex

-

@Alex-Kushleyev Thanks for getting back to me, I must've missed reading the part regarding the CPU frequencies, now that you say it seems obvious.

the IMU frequency is not exactly 1Khz because the IMU is running using its own internal oscillator, which is does not perfectly align with 1000Hz output frequency, so the closest frequency the IMU can achieve is about 976Hz or so.

This is interesting actually, because the dt and sampling rate seems to consistently be slightly higher than 1kHz, approximately 1.03kHz. Can this make sense for the IMU?

Finally, you are absolutely right that the ROS publisher queue size being set to 1 is causing the messages to be dropped because they are published too quickly and are simply discarded when queue overflows.I really don't see the down side of increasing the buffer size and i agree it should be set to at least the sample_rate / fifo_poll_rate , which is the number of samples that would be published at a time.

I will discuss this with the team.. thank you for pointing out the issue and good job figuring it out!I didn't see a major change in CPU consumption, at least of the two solutions increasing the FIFO queue cost much more CPU than changing the queue size did. I would offer to make a PR if the change was more complicated than just changing a number

Anyway thanks. I'm a bit curious about the IMU, but otherwise I think this is solved.

Morten

-

About the IMU dt, sorry, i got it backwards, the dt is 0.976ms, so that would be slightly above 1khz. So we are on the same page.

Yes, reducing the FIFO size results in more SPI read transactions, which means that the CPU / Kernel has to do more work. There is a per transaction overhead that is much more significant than just getting more data per single transaction.

Let's not bother with the PR because it is a 2-character change

. I will get to it and test it soon.

. I will get to it and test it soon.Alex