@nickyblanch For anyone else working with ToF over voxl-mpa-to-ros, I'll document what I ended up doing. Also consider this my application to ModalAI's dev team

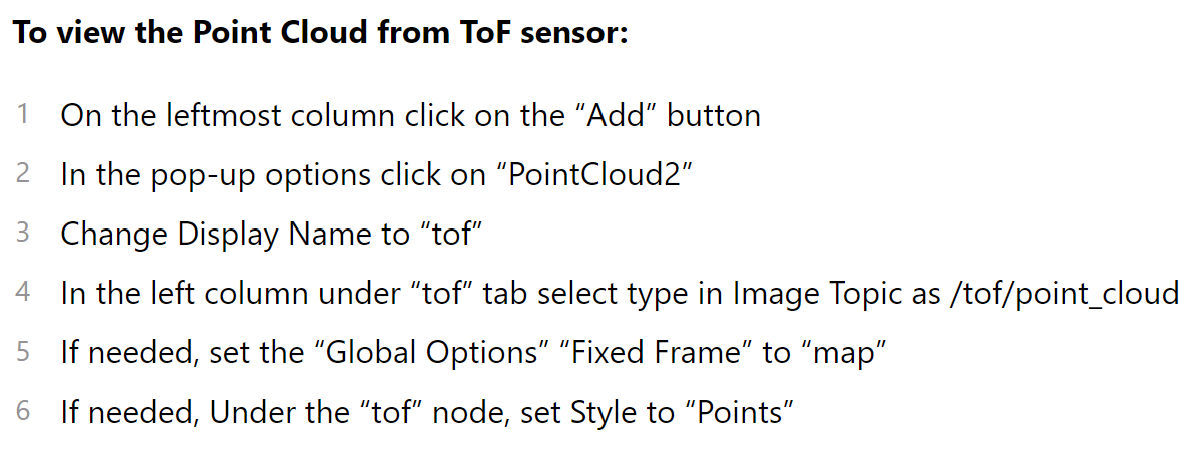

1.) One of the first things you will notice when retrieving ToF data over vox-mpa-to-ros is that the ToF pointcloud2 message is stamped with the frame world in the header. However, this frame will not exist by default in most ROS ecosystems. Therefore, to even visualize the data to start with, you will need to create a world frame. The most basic way to do this is with a static TF on either the ROS host or a remote computer connected to it (a laptop running some version of ROS1). This is simply a debug step to make sure you're getting data, and this won't be necessary later.

rosrun tf static_transform_publisher 0 0 0 0 0 0 1 map world 10

The frame name world is confusing here, because the world frame generally refers to a global frame in a ROS ecosystem. However, in this case, the world frame is local to the ToF sensor.

2.) Another thing you'll notice is that the timestamp in the header of each message published by voxl-mpa-to-ros is relative to boot time on the VOXL, not the standard Unix epoch time that ROS expects. Be careful to avoid timing errors by either:

-

(a) Republishing incoming messages with a new timestamp on a laptop (not recommended, may cause slight timing mismatches) (discussed later)

-

(b) Use the timestamps from the ROS messages originating from the VOXL for all transforms and additional ROS operations needed. Do not use

ros::Time::now()

because it will cause messages to be ignored by the message filter.

3.) If you're interested in stabilizing the ToF messages into a global frame to create a 3D map (which was my goal), then there is a series of transforms that you will need to publish. The transform tree looks like:

[ToF Frame] -> [IMU Frame] -> [Map Frame] -> [NED Frame]

The NED frame is optional, but neither the ToF frame nor the IMU frame are in NED, so it makes it easier to interpret the data if we standardize it to Z down, Y to the right, and X forward at the end.

4.) The first transform takes the ToF frame (world) to the IMU frame. This is a static transform. This transform will vary based on the specific setup of your VOXL and FC. For my Seeker drones, I pulled the extrinsics file and saw that the IMU and ToF frames have a 180 degree rotation in Yaw relative to each other. Therefore, a ROS transform like this is required:

void ToF_IMU_static_broadcaster(void) {

// SETUP ////////////////////////////////////////////////////////////////////////////////

static tf2_ros::StaticTransformBroadcaster static_broadcaster;

geometry_msgs::TransformStamped static_transformStamped;

// HEADER TIMESTAMP AND TF FRAMES ///////////////////////////////////////////////////////

static_transformStamped.header.stamp.sec = 0;

static_transformStamped.header.stamp.nsec = 0;

static_transformStamped.header.frame_id = [INSERT ToF FRAME NAME];

static_transformStamped.child_frame_id = [INSERT IMU FRAME NAME]; // CAN BE ANYTHING; I USED BASE_LINK

// TRANSLATION; NO TRANSLATION //////////////////////////////////////////////////////////

static_transformStamped.transform.translation.x = 0;

static_transformStamped.transform.translation.y = 0;

static_transformStamped.transform.translation.z = 0;

// ORIENTATION //////////////////////////////////////////////////////////////////////////

// See ModalAI VOXL SEEKER CAM V1 extrinsics for more information on the relationship

// between the ToF and the VIO coordinate frames. Essentially, the yaw axes are offset

// by 180 degrees (1 pi rads).

tf2::Quaternion quat;

quat.setRPY(0, 0, 3.14);

quat.normalize();

static_transformStamped.transform.rotation.x = quat.x();

static_transformStamped.transform.rotation.y = quat.y();

static_transformStamped.transform.rotation.z = quat.z();

static_transformStamped.transform.rotation.w = quat.w();

// SEND //////////////////////////////////////////////////////////////////////////////////

static_broadcaster.sendTransform(static_transformStamped);

}

5.) Next, you will need a transfrom from the IMU frame to a global frame. Although this global frame can have any name, you should not use world because it conflicts with the frame the ToF data is provided in. I used map; note that this matches the frame the VIO data is provided in, so we get the added benefit of being able to directly plot VIO data in this frame.

This transform is not static like the previous one. Rather, we need to dynamically change the transform to reflect the changing relationship between the frames attached to the VOXL itself and the unmoving world frame. VIO data allows us to do that. Essentially, the odometry xyz and rpy values are used as translations and rotations, respectively.

It is critical that we do rotation BEFORE translation. Therefore, this transform is technically split up to two distinct transforms in ROS in order to ensure rotation is handled before translation.

void poseCallback(const nav_msgs::Odometry::ConstPtr& msg) {

// RETRIEVE POSE DATA ///////////////////////////////////////////////////////////////////

float x = msg->pose.pose.position.x;

float y = msg->pose.pose.position.y;

float z = msg->pose.pose.position.z;

float x_o = msg->pose.pose.orientation.x;

float y_o = msg->pose.pose.orientation.y;

float z_o = msg->pose.pose.orientation.z;

float w_o = msg->pose.pose.orientation.w;

// TRANSFORM HANDLERS ////////////////////////////////////////////////////////////////////

static tf2_ros::TransformBroadcaster br;

geometry_msgs::TransformStamped transform_rotation;

geometry_msgs::TransformStamped transform_translation;

// ROTATION //////////////////////////////////////////////////////////////////////////////

tf2::Quaternion q(-1.0 * x_o, -1.0 * y_o, -1.0 * z_o, 1.0 * w_o);

// SEND TRANSFORMS ////////////////////////////////////////////////////////////////////////

// ROTATION

transform_rotation.header.stamp = msg->header.stamp;

transform_rotation.header.frame_id = [INSERT IMU FRAME NAME];

transform_rotation.child_frame_id = [INSERT INTERMEDIATE FRAME NAME];

transform_rotation.transform.translation.x = 0 * x;

transform_rotation.transform.translation.y = 0 * y;

transform_rotation.transform.translation.z = 0 * z;

transform_rotation.transform.rotation.x = q.x();

transform_rotation.transform.rotation.y = q.y();

transform_rotation.transform.rotation.z = q.z();

transform_rotation.transform.rotation.w = q.w();

br.sendTransform(transform);

// TRANSLATION

transform_translation.header.stamp = msg->header.stamp;

transform_translation.header.frame_id = [INSERT INTERMEDIATE FRAME NAME];

transform_translation.child_frame_id = [INSERT MAP FRAME NAME];

transform_translation.transform.translation.x = -1.0 * x;

transform_translation.transform.translation.y = -1.0 * y;

transform_translation.transform.translation.z = -1.0 * z;

transform_translation.transform.rotation.x = 0;

transform_translation.transform.rotation.y = 0;

transform_translation.transform.rotation.z = 0;

transform_translation.transform.rotation.w = 1;

br.sendTransform(transform_translation);

}

6.) Lastly, we should make a transform to take our current frame into a more standard NED frame. This is similar to the first transform because it is static.

void map_NED_static_broadcaster(void) {

// SETUP ////////////////////////////////////////////////////////////////////////////////

static tf2_ros::StaticTransformBroadcaster static_broadcaster;

geometry_msgs::TransformStamped static_transformStamped_NED;

// HEADER TIMESTAMP AND TF FRAMES ///////////////////////////////////////////////////////

static_transformStamped_NED.header.stamp.sec = 0;

static_transformStamped_NED.header.stamp.nsec = 0;

static_transformStamped_NED.header.frame_id = [INSERT MAP FRAME NAME];

static_transformStamped_NED.child_frame_id = [INSERT NED FRAME NAME];

// TRANSLATION; NO TRANSLATION //////////////////////////////////////////////////////////

static_transformStamped_NED.transform.translation.x = 0;

static_transformStamped_NED.transform.translation.y = 0;

static_transformStamped_NED.transform.translation.z = 0;

// ORIENTATION //////////////////////////////////////////////////////////////////////////

static_transformStamped_NED.transform.rotation.x = -0.5;

static_transformStamped_NED.transform.rotation.y = 0.5;

static_transformStamped_NED.transform.rotation.z = 0.5;

static_transformStamped_NED.transform.rotation.w = 0.5;

// SEND //////////////////////////////////////////////////////////////////////////////////

static_broadcaster.sendTransform(static_transformStamped_NED);

}

7.) If you find yourself wanting to mess around with the names of the frames that each message is published in, or with the timestamps of each message, there is an alternative to editing and re-building the voxl-mpa-to-ros package. Using a custom class like the one below, you can republish messages with a new timestamp or new frame, while keeping the same data.

class pc_subscribe_and_publish {

public:

pc_subscribe_and_publish() {

// TOPIC WE ARE SUBSCRIBING TO //////////////////////////////////////////////////////////

sub = node.subscribe("/tof_pc", 10, &pc_subscribe_and_publish::pc_callback, this);

// TOPIC WE ARE PUBLISHING //////////////////////////////////////////////////////////////

pub = node.advertise<sensor_msgs::PointCloud2>("[INSERT NEW TOPIC NAME]", 100);

}

void pc_callback(const sensor_msgs::PointCloud2ConstPtr& msg) {

// KEEP ALL DATA THE SAME, ONLY CHANGE TIMESTAMP ////////////////////////////////////////

sensor_msgs::PointCloud2 msg_out;

msg_out.header = std_msgs::Header();

msg_out.header.frame_id = [INSERT NEW FRAME NAME];

msg_out.header.stamp = msg->header.stamp;

msg_out.height = msg->height;

msg_out.width = msg->width;

msg_out.fields = msg->fields;

msg_out.is_bigendian = msg->is_bigendian;

msg_out.point_step = msg->point_step;

msg_out.row_step = msg->row_step;

msg_out.data = msg->data;

msg_out.is_dense = msg->is_dense;

pub.publish(msg_out);

}

private:

ros::NodeHandle node;

ros::Publisher pub;

ros::Subscriber sub;

};

8.) The last step to get everything running is to add some ROS requirements. In your main function, you'll need to make sure the above transform publishers are subscribing to the odom messages. You'll also need to create an instance of the custom class if you want to do any republishing.

int main(int argc, char** argv) {

// SETUP ////////////////////////////////////////////////////////////////////////////////

ros::init(argc, argv, "tof_tf_broadcaster");

ros::NodeHandle node;

// BASE LINK TF BROADCASTER /////////////////////////////////////////////////////////////

ros::Subscriber sub = node.subscribe("/qvio/odometry", 10, &poseCallback);

// SENSOR FRAME TF BROADCASTER //////////////////////////////////////////////////////////

map_NED_static_broadcaster();

ToF_IMU_static_broadcaster();

// PC2 SUBSCRIBER/PUBLISHER /////////////////////////////////////////////////////////////

pc_subscribe_and_publish sapobject;

// RUN

ros::spin();

return 0;

}

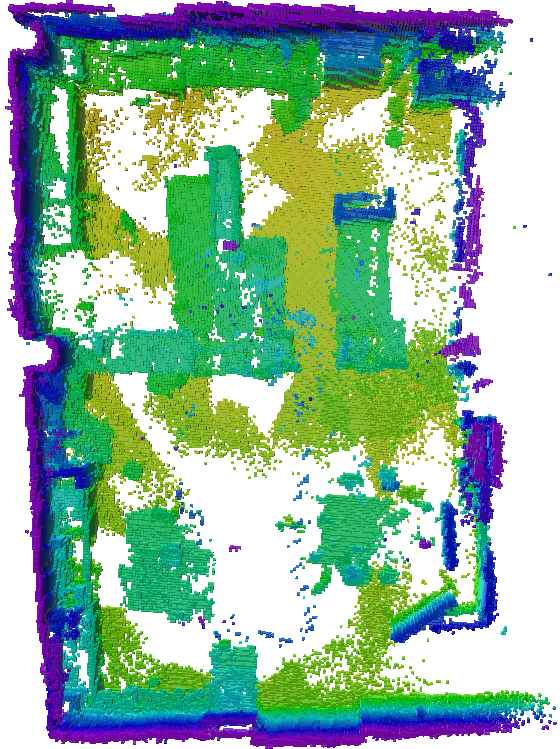

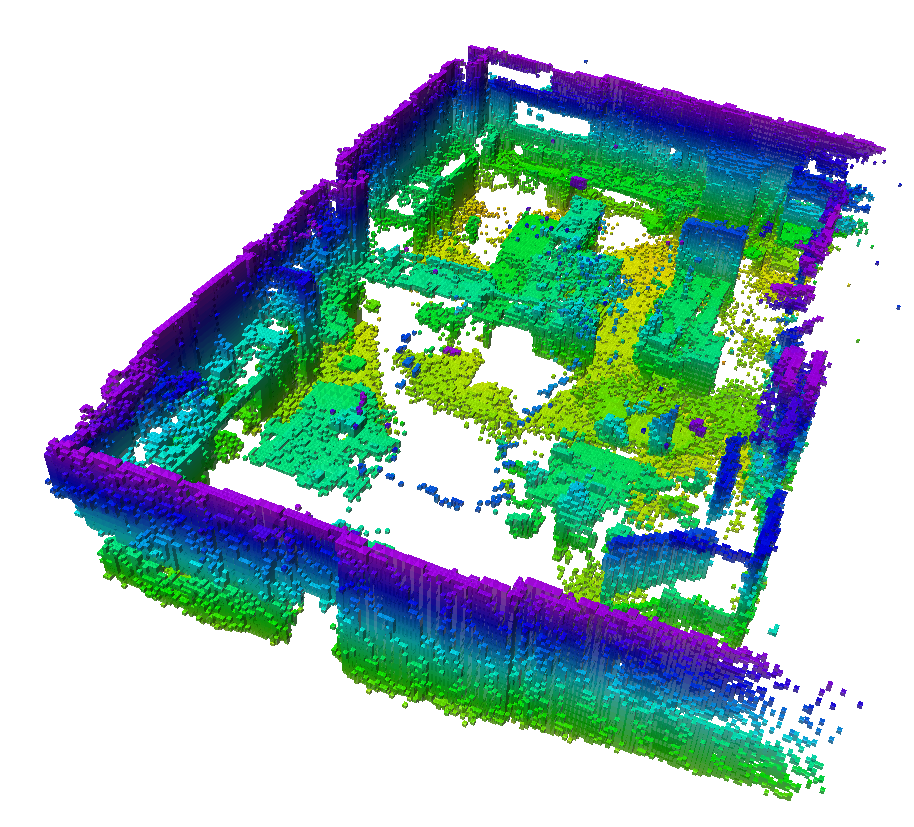

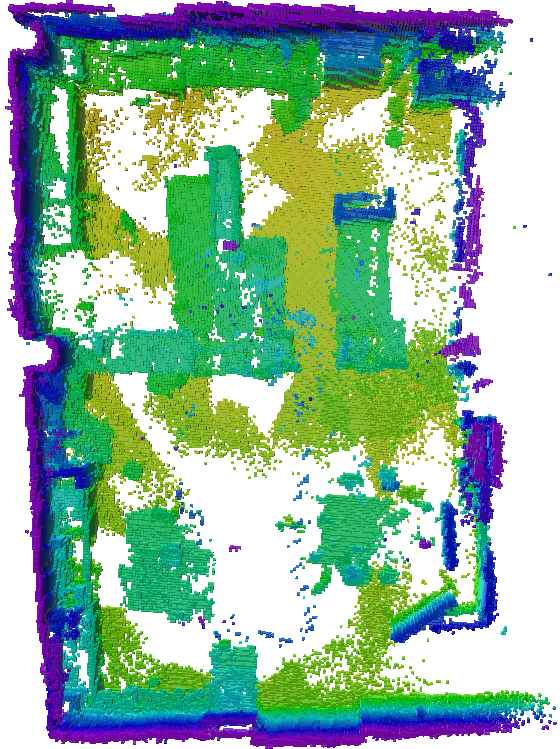

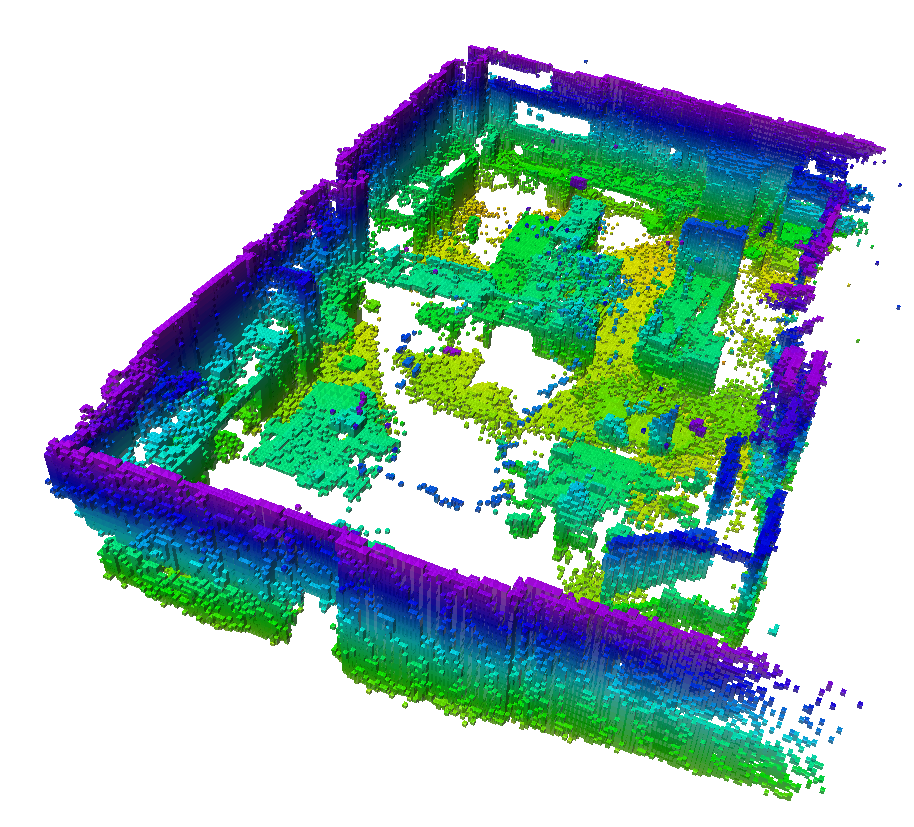

9.) Create a catkin workspace and setup your custom ROS package like any tutorial online will show you. Build the package, create a launch file, and run it. By visualizing the ToF data in the NED frame in RVIZ, you should see that objects are stabilized and the pointcloud moves around with the drone. A mapping package such as octomap_server can then create an occupancy map based on all of the ToF pointcloud data that is coming in.

This code is edited slightly from the exact code running in my ROS ecosystem, so it's not tested as-is, but let me know if you try it out and something isn't working. Good luck!