Implementing PX4 avoidance in mission mode using the voxl and QGC

-

I have set up the collision prevention system using the documentation given. Still, I see that there is no documentation for obstacle avoidance in mission mode, particularly the local planner node PX4 created. I have been able to fly a drone with PX4 avoidance using the Jetson TX2, but I do not know how to set this up on the voxl and get all the necessary dependencies installed for the local planner to function. For example, I wasn't able to install the pcl-ros package on the voxl. Is this possible to implement the mission mode version of obstacle avoidance using the voxl as the companion computer and the stereo pair, tracking camera, and the flight core? If not, are there some workarounds or different nodes I could use for the stereo camera pair, at least? I'd still like to use the algorithm implemented in the PX4 avoidance repo if possible.

-

You should be able to use the open source PX4 avoidance, but it may require a bit of an atypical architecture. When trying to use big ROS projects we recommended running Ubuntu on VOXL in Docker

The base Yocto layer should be ROS core and expose the VIO and depth from stereo modules:

Then the Ubuntu OS in the Docker should subscribe to the appropriate hardware layer topics to execute the PX4 Autonomy

We should have this better documented, but keep asking on this thread and we'll try to get you going. Shouldn't be any major blockers, but a few tweaks are probably necessary.

-

Thank you, I'll follow this and will let you know if I do it successfully or if there are any roadblocks in the way!

-

Alright, my first roadblock! I was able to set up a docker image running ubuntu and I have installed voxl-cam-ros, snap_vio, and the dfs node and have built the catkin workspace with no problems seemingly. My problem comes with what to do next exactly: I could continue with the PX4 avoidance README and try SITL but I'm not sure about that because that involves installing PX4's firmware and running a gazebo simulation, which seems too cumbersome both in space and graphics for the VOXL to do. The second option would be to run in hardware. Part of the process of running the PX4 local planner requires specifying the camera serial number along with the company name before running "generate_launchfile.sh" which adds several parameters to launch a specific launch file for the camera specified. The second option seems like the more likely option but I'm not sure.

So my question is two-fold: Should I try to run this in SITL at all or just run it in hardware and if so, what parameters/launch files do I need to include so I could run with this in hardware? Do I even need to set up the node for the stereo camera in the same way it is done for the Realsense or Occipital cameras (For reference, I'm specifically referring to the Run on Hardware section of the PX4 avoidance repo).

Thanks for the help already! Hopefully, I won't have too many more issues that I'll need more insight on.

-

We haven't tried the Avoidance stack in a while, so it's probably going to come down to what you are more comfortable with. If you are comfortable with Gazebo, SITL might be a good way to go.

The trick with ROS always comes down to making sure all of the nodes are talking to each other. There is a good tutorial on debugging ROS here

You'll want to have a list of the ROS topics the Ubuntu PX4 Avoidance stack requires, and then a list of the ROS topics the yocto base layer is providing. Then you'll need to map the two together.

You should only have one roscore, which should likely be in the Yocto layer. Your PC implementation should be able to see that if you have ROS_IP, etc configured properly.

The most valuable tool right now will probably be rostopic list and rostopic echo. Run ros topic on each of the layers:

- yocto base layer

- Ubuntu Docker

- PC workstation

Make sure you see the proper topics in each location and that they are publishing data.

Regarding stereo, I don't think that PX4 docs section is applicable. That RosDfsExample node should already be publishing a disparity map and point cloud. You can see it is publishing the disparity map here and point cloud here

-

Sounds easy enough, thanks for the quick reply!

-

Another roadblock! I decided to go straight to trying to run it in hardware as opposed to the simulator and I have been running into issues setting up the serial connection between the flight controller and VOXL. How do I specify the fcu_url on a serial port, specifically UART_J10 if I cannot talk to the ports with read and writes? I would not like to use udp and just use a serial connection but I'm confused about how to set up the fcu_url for a serial connection.

-

Not exactly familiar with that parameter. Have you seen how we implement mavros here https://docs.modalai.com/mavros? That might help. You might be able to use our voxl-vision-px4 to communicate with the flight controller, vio and mavros code: https://gitlab.com/voxl-public/ros/mavros_test

-

I've been working on this more but I'm still stuck at this point. I've tried using a stripped-down version of voxl-vision-px4 to essential just initiate the UART connection but this still doesn't help set up the serial connection for the avoidance repo. I have this running on the yocto base layer and I want to try running the voxl-vision-px4 or libvoxl_io functions in the docker image to initiate the UART connection and see if that does the trick. I've been having tons of problems trying to build these libraries on the roskinetic docker image, is their anyway to build the libvoxl_io library or voxl-vision-px4 on the roskinetic-xenial docker image?

-

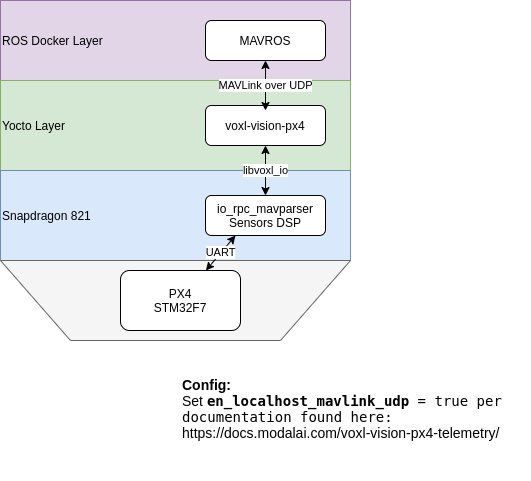

There is not a way for libvoxl_io to work inside of the Docker. It seems you have succesfully separated VIO from voxl-vision-px4, but you'll still need to use the MAVLink routing inside of voxl-vision-px4 which accepts MAVLink over UDP. Here's a diagram below to try and draw it out. You won't be able to use the ROS serial node directly in the docker. You'll need to pass the MAVLink over UDP, or run the ROS serial node in the Yocto layer and have it use libvoxl_io.

FWIW, the MPA architecture with the voxl-mpa-qvio-server will help separate VIO from vox-vision-px4, but that's still a month away from being stable.

-

Thank you for your help, the UDP connection seems to be working for the most part! The connection is initiated and the ROS connections seem to be connected but it seems like the pointcloud isn't being updated fast enough. I checked the pointcloud topic made by the snap_dfs manager and that was being updated at around 2Hz at most and the avoidance algorithm requires the pointcloud to updated at least 10Hz. I also opened up the pointcloud in rviz and saw that the pointcloud was updating at that rate. Is this normal behavior or is something else going on here? I apologize that I keep encountering roadblocks but I do think I am close to getting this!

-

Check out the bottom of this readme where it recommends different parameters to increase frame rate. https://github.com/ATLFlight/dfs-ros-example

Basically, in any computer vision task it's a tradeoff of frame rate and resolution

-

And FWIW, you don't need high resolution for obstacle avoidance. Just need to see things not to run in to!

-

@scottesicdrone curious to see if you were you able to get things working?

-

@Chad-Sweet

I had a look the link you shared: snap_vio

But I think it is not working correctly with the voxl module I have.

Currently, I am runningROS noeticon the voxl module, and try to run the VIO with downward camer.

By using snap_camera_ros (I edited it a little bit to use the downward camera), I can see the downward camera is correctly set up forsnap_vio. Also, I can seesnap_imuis working correctly, so I can see the output ofimu(angular_velocityandlinear_acceleration).But I cannot see any output of

/downward/vio/odometry.Could you please help me to run

snap_viowith the voxl module?

What should I check first? -

The running file is similar with this.

<!-- camera nodelet --> <!-- VIO nodelet --> <!-- fisheye camera info spoofer --> <!-- imu nodelet --> <!-- cpa nodelet --> -

@Chad-Sweet

Update.I have output

/downward/vio/odometrywith some error messages[ INFO] [1648150781.892213161]: [VISLAM] Got uav1/imu to uav1/dfc transform; Initializing VISLAM [ INFO] [1648150781.892816862]: [VISLAM] (from tf) tbc: x: 0.2 y: 0 z: -0.025 [ INFO] [1648150781.893485304]: [VISLAM] (from tf) ombc: X: 0 Y: 2.356 Z: 0 MachineVision is licensed as community user LNX_8074 supported? 1 LNX_8096 supported? 1 LNX_IA64 supported? 1 WINDOWS supported? 0 AR ERROR: arFileOpen(): Failed to open file: /data/.ros/vislam/Configuration.SF.xml FASTCV: fcvAvailableHardware Linux mempool cur block size 307200, new block size 307200 AR ERROR: arFileOpen(): Failed to open file: /data/.ros/na Error: TF_NAN_INPUT: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of a nan value in the transform (0.000000 0.000000 0.000000) (-nan -nan -nan nan) at line 244 in ~/geometry2/tf2/src/buffer_core.cpp Error: TF_DENORMALIZED_QUATERNION: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of an invalid quaternion in the transform (-nan -nan -nan nan) at line 257 in ~/geometry2/tf2/src/buffer_core.cpp [ERROR] [1648150782.073463584]: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of a nan value in the transform (0.000000 0.000000 0.000000) (-nan -nan -nan nan) [ERROR] [1648150782.077556213]: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of an invalid quaternion in the transform (-nan -nan -nan nan) [ERROR] [1648150782.094956631]: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of a nan value in the transform (0.000000 0.000000 0.000000) (-nan -nan -nan nan) [ERROR] [1648150782.095484499]: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of an invalid quaternion in the transform (-nan -nan -nan nan) Error: TF_NAN_INPUT: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of a nan value in the transform (0.000000 0.000000 0.000000) (-nan -nan -nan nan) at line 244 in ~/tf2/src/buffer_core.cpp Error: TF_DENORMALIZED_QUATERNION: Ignoring transform for child_frame_id "grav" from authority "unknown_publisher" because of an invalid quaternion in the transform (-nan -nan -nan nan) at line 257 in ~/geometry2/tf2/src/buffer_core.cppand repeated error message of

[ERROR] [1648150919.088496536]: [VISLAM] ERROR CODE = 2048, Stamp: 1648150919.022946523I am wondering how urdf should be set. Should there be any rotations of IMU or camera in urdf file?

urdf file I used has some rotations in y-axis for IMU and cameras. -

@Chad-Sweet

I am using customized urdf forsnap_viowhich has different numbers (positions and orientations) of sdf_pro.urdf.Is there any urdf file for VOXL?

-

@Chad-Sweet , No matter the tf setting in the urdf file is, the ros topic outputs are shown in the followings

rostopic echo mavros/imu/data(inbase_linkframe):xis backward,yis right,zis downrostopic echo downward/imu(inimuframe):xis right,yis backward,zis down

-

@modalab Hello, can you help me to resolve this problem?