M0149 camera refocusing and tuning parameters

-

@Alex-Kushleyev Thanks alot Alex for such detailed and insightful response. Willl keep this star marked for my understanding purpose.

1. We are working on some helper tools for our customer use to aid with evaluating the quality of focus. The tool will display an augmented image in voxl-portal, showing the results of the analysis (how sharp the image is). In general, you want to take a high contrast object (checkerboard), place it in the area where you want to focus and adjust the focus until the image is sharpest. The depth of field of a fisheye camera should be very large (everything beyond a small distance (a few inches) should be in focus). This means that placing an object 1-2 feet away from the camera can be easily used to calibrate the focus. I hope to share an initial version of the tool by the end of this week. Meanwhile, you may try to focus the camera by eye while having the camera pointed at a high contrast objectYes Please share the helper tool with me at the earliest to focus the camera in best possible way. Till then will do it manually.

2. This "shadow" artifact happens for two reasons : fisheye lens blocks more light at extreme angles and also camera sensor CRA (chief ray angle) contributes to reduced sensitivity at large angles. The standard way to fix this in camera pipeline is using a lens-specific tuning file, which contains a Lens Shading Correction table and the ISP can do it without any CPU overhead. However, for tracking cameras, we use RAW images (not going through ISP image processing) to avoid any image processing artifacts, so this lens shading correction feature is not available. Nevertheless, the image can be corrected on the CPU and it should not be too expensive to do that. The best place to do that would be during the 10->8 bit conversion that happens on the CPU (camera sends 10 bit data and we convert to 8 bit to use for feature tracking). Using 10 bit data will provide better quality image and will save cpu cycles because the pixels will already be in memory. The 10->8 bit conversion is happening in the voxl-camera-server application. This feature is something we discussed internally, but we have not implemented it yet. the corners of a fisheye camera are usually of a lesser value because the features there are going to be stretched, lower quality (due to optics) and may not appear in the frame for a long time. Nevertheless, with corrected image brightness in the corners, the performance of VIO could be even better.Great. Looking forward to image brightness enhancement at corners in future voxl-camera-server.

3. The exposure control algorithm that we implemented attempts to achieve a certain mean pixel (sample) value (MSV). it takes average of all pixels and if it is below the desired one, it increases gain and exposure to achieve the higher MSV. Gain and exposure both contribute to increasing image brightness, but they have their own side effects: long exposure will cause blur during motion and high gain will increase pixel noise. the QVIO algorithm applies a gaussian blur before doing feature detection / tracking, so it would be preferred to use higher gain than higher exposure and this is what we tuned for ov7251. I think going with those settings is a good start. However, the previous issue of dark corners can throw off the exposure control, driving image in the center to be too bright. The image you provided seems a bit too dark to my eye, perhaps you can increase the target MSV and see if that helps? were you able to track good features with QVIO using AR0144?Thanks for providing insights in preprocessing steps of QVIO. Yes I am able to track features with QVIO using AR0144 but I have certain observations. I knew about desired MSV and exposure control algorithm. In my indoor scenario I tried with ae_dessired_msv to 80 from default 60. QVIO worked well in few cases but I also saw drift as soon as UAV landed back on ground. In my last observation with OV7251 MSV of 80 helped in indoor scenerios where lighting conditions are low so I was trying with same setup on AR0144. In outdoor with MSV 80, the features which are tracked are always on horizon which ultimately reduces the QVIO quality to 1% probably leading to accumulated error in odometry since I saw UAV from origin showing wrong XYZ coordinates as compared to reality (I was flying in GPS mode and only observing QVIO). So in outdoor after changing MSV to 60 it worked again. So I believe there is need for profiling indoor and outdoor scenerio. Let me know your thoughts.

4. We are slowly switching over to using AR0144 cameras for tracking (VIO). In general M0149 camera module is a better camera module all around compared to ov7251 - better and higher resolution image sensor, better lens, so we expect better QVIO performance. I will double check with the team about any specific tuning for better QVIO performance using AR0144.Yes, My thought process was the same AR0144 should perform better. Let me know for any specific tuning would help QVIO further.

I have also observed as I have mentioned, there are multiple (probably) good features near to UAV but it still finds features extremely far leading to quality getting degraded and eventually might lead to failure. How can we improve feature detection sharply near to UAV?

Also when UAV rotates suddenly, I have seen features been detected after certain time at the center part of image, sometimes leading to no features also. Can this be related to camera focus?On a seperate note, Is there any tool which can visualize voxl-logger data csv files in better way. Maybe running voxl-portal and loading qvio_extended csv file to view trajectory and tracked features in 3D instead of live?

-

@Alex-Kushleyev Any update over here?

-

@Aaky , thanks for the follow up.

I should be ready to share a test version of a focus helper tool early next week. Then i can try the lens shading correction inside voxl-camera-server.

Regarding tuning target MSV, yes it is not straightforward especially when you are transitioning between indoor and outdoor environments. Those environments are so different and you may need to switch parameters for auto exposure control. For example, the auto exposure control in phone cameras also detects the scenario (indoor, outdoor, daylight, cloudy, even type of light indoors) and switches processing params according to the scenario. This is something we do not currently support. If you want to investigate this yourself, you should collect data logs (using

voxl-logger) using different MSV in the different types of environments and find the MSV that works best (detects most good features). You can also change the way MSV is computed -- for example if you are seeing a lot of bright sky outdoors, many pixels can be extremely bright, so using them in MSV computation is going to make the ground completely dark. We already have a param for ignoring a certain amount of saturated pixels) https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/blob/master/src/config_defaults.cpp?ref_type=heads#L162 -- this is the fraction of all pixels that will be ignored for MSV computation if saturated to max value. If you increase this value (from 0.2 to 0.3) then more saturated pixels will be ignored, if present, putting more weight on non-saturated pixels.Regarding features that are far away, you should double check your intrinsic calibration of the lens. If the lens is not calibrated well, then feature that are far away will create large errors in state estimation. Have you done the intrinsic camera calibration for AR0144?

@Aaky said in M0149 camera refocusing and tuning parameters:

Is there any tool which can visualize voxl-logger data csv files in better way

Can you specify what data you might want to visualize?

-

@Alex-Kushleyev said in M0149 camera refocusing and tuning parameters:

I should be ready to share a test version of a focus helper tool early next week. Then i can try the lens shading correction inside voxl-camera-server.

Sure. Will be waiting for this tool.

Regarding MSV tuning, Yes I will investigate this further.

@Alex-Kushleyev said in M0149 camera refocusing and tuning parameters:

Regarding features that are far away, you should double check your intrinsic calibration of the lens. If the lens is not calibrated well, then feature that are far away will create large errors in state estimation. Have you done the intrinsic camera calibration for AR0144?

Yes I have done the intrinsic calibration with AR0144. What is ideal reprojection error from camera calibration and is there anything to validate that intrinsic are correctly setted up for the camera?

@Alex-Kushleyev said in M0149 camera refocusing and tuning parameters:

Can you specify what data you might want to visualize?

I want to visualize the 3D VIO trajectory as shown in voxl-portal in the offline manner like after collecting data with voxl-logger.

Also is changing MSV effective with voxl-replay since it operates over live camera frame directly? What are the parameters which we cant tune with voxl-replay?

-

@Alex-Kushleyev One more input is needed to me in terms of QVIO tuning, I had recorded some dataset for QVIO with "voxl-logger --preset_odometry" which were saved successfully in /data/voxl-logger directory.

Now if I try to replay them with "voxl-replay -p /data/voxl-logger/log009/" say, then I get following error.voxl2:~$ voxl-replay -p /data/voxl-logger/log0009/ -d enabling debug mode opening json info file: /data/voxl-logger/log0009/info.json using log_format_version=1 log contains 7 channels log started at 1878796637772ns log channel 0: type: imu out path: /run/mpa/imu0/ log path: /data/voxl-logger/log0009//run/mpa/imu0/ total samples: 0 log channel 1: type: imu out path: /run/mpa/imu1/ log path: /data/voxl-logger/log0009//run/mpa/imu1/ total samples: 0 log channel 2: type: imu out path: /run/mpa/imu_apps/ log path: /data/voxl-logger/log0009//run/mpa/imu_apps/ total samples: 54491 log channel 3: type: cam out path: /run/mpa/tracking/ log path: /data/voxl-logger/log0009//run/mpa/tracking/ total samples: 1612 log channel 4: type: cam out path: /run/mpa/qvio_overlay/ log path: /data/voxl-logger/log0009//run/mpa/qvio_overlay/ total samples: 268 log channel 5: type: vio out path: /run/mpa/qvio/ log path: /data/voxl-logger/log0009//run/mpa/qvio/ total samples: 1594 log channel 6: type: qvio out path: /run/mpa/qvio_extended/ log path: /data/voxl-logger/log0009//run/mpa/qvio_extended/ total samples: 1595 opening csv file: /data/voxl-logger/log0009//run/mpa/imu0/data.csv failed to open csv file: /data/voxl-logger/log0009//run/mpa/imu0/data.csv error: No such file or directoryI need to include more pipes also while logging? I am actually unable to replay any of my collected data. Also Will I be able to visualize the results on voxl-portal once voxl-replay works well?

-

@Aaky said in M0149 camera refocusing and tuning parameters:

/data/voxl-logger/log0009//run/mpa/imu0/data.csv

can you check if this file exists?

/data/voxl-logger/log0009//run/mpa/imu0/data.csv -

@Alex-Kushleyev This file dosent exist. So in order to record this file, what parameter is essential in voxl-logger?

-

@Aaky oh, i believe imu0 is not accessible directly by the CPU (the DSP is connected to it), so it cannot be logged. Can you please double check

imu_appsto make sure it has data? I think it is possible that the logging preset was inherited from VOXL1, which could access IMU0.I believe imu_apps is used for VIO, so other IMUs are not needed. You can modify your logging preset to remove imu0 and imu1. and you should be able to modify the existing log to get rid of the imu0, imu1 entries (modify the info.json in the log)

-

@Alex-Kushleyev Thanks Alex. Will check this out.

Any update on optimized parameters for AR0144 camera over voxl-camera-server?

Also I have one doubt, let's say I navigate in indoor very slowly say at 0.5 m/sec of max velocity and even my altitude and Yaw movement would be slow, then can I expect my motion blurr problem (Affecting by exposure parameters) won't come up?

If this is true I can focus more on gain, MSV and ae_slope parameters then.

Also does ae_slope parameters increasing makes more sense in outdoor where we can see bright horizon right and not in indoor? -

@Alex-Kushleyev One more query,

Where to visualize output of VOXL-replay? On voxl-portal? Also I saw few people plotting the estimated x,y and z values of VIO. Is this plotting available somewhere?Also I am seeing very high Gain value in qvio_overlay image as compared to OV7251. On OV7251 gain used to be around 100-150 but with same environment in AR0144 it's around 400-500. Is this normal? Does this mean granularity of image is good?

Sorry I am throwing multiple queries to you at a time.

-

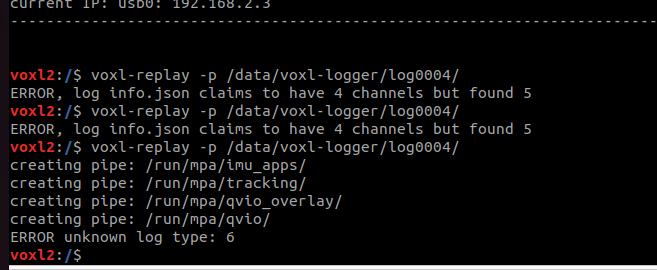

@Alex-Kushleyev One more problem is noticed in voxl-replay,

After removing imu0 and imu1 from my info.json, I keep getting below problem.

After digging in code, I found out 'qvio_extended' type of channel isn't handled in the code over here so this unknown log type: 6 is coming up.

Also I removed this channel and tried playing tracking, imu and qvio_overlay channels. Now I am unable to understand even if I change MSV in voxl-camera-server config file in logged data folder I am unable to see this change on camera streams over voxl-portal. How to ensure that the changes in voxl-camera-server.conf are replicated exactly and applied to camera frame in order to fetch qvio results?

-

@Aaky ,

I will double check with the team to see if we need to fix the log playback. Perhaps you should just try to collect a new log that does not have those extra imu channels which do not exist - have you tried that? Also if you dont collect the qvio extended log, the log playback should not complain. But i believe you are correct that there is an issue that the log replay cannot play back the extended qvio data (normally that is not needed since you want to play back the raw data, but not any of the results of qvio. That is if you want to run qvio again using a log, you don't want to play back the results of old qvio data because there would be an attempt for two processes to publish the qvio results during replay (log replay and actual qvio process)

Regarding the MSV tuning.. please understand that by replaying the log with images and IMU, you are bypassing the whole camera pipeline, so the camera exposure and gain cannot be changed. Perhaps i was not clear in my original suggestion, but let me clarify.

By logging data sets with different MSV target values (or different exposure control strategies), you can feed the data into the same VIO algorithm and see which one performs better. Ideally, you would collect exactly the same data set with different MSV parameters, but that is impossible in real life, but you can do something that is pretty close. For indoor environments, you can hand carry the drone along the same path and record the data sets with different MSV target values. Indoor environments are simpler and easier to reproduce the same conditions. Outdoors could be a little bit trickier but still possible.

Additionally, what you can do is after the log is collected, you can process the individual images using some scripts (opencv?) and see how different processing affects the behavior of VIO. Please keep in mind that that will not be exactly the same as changing gain and exposure on the camera (because changing exposure will affect blur and changing gain will affect how noisy the pixels are), but if you are trying to find good starting points for the MSV, you could try post processing the images before the playback. Another approach is to apply some kind of gamma correction but that can also affect the image noise.

-

@Alex-Kushleyev Thanks for the explanation. Yes will follow your guidelines for MSV tuning.

I have query in libmodal-exposure codebase.

Check code at this link . It says OV7251 needs few frames to be skipped for gain calculation. Is this valid for AR0144 also since I am assuming same MSV calcaulation codebase is been utilized for AR0144 as well at libmodal-exposure v0.1.0 branch.

-

@Aaky , yes there was originally an issue related to ov7251 configuration that resulted in some flashing behavior if gain was updated every frame. However, this has been fixed - we have updated the actual ov72551 sensor driver and also

voxl-camera-serveris setting updated register on the camera to allow gain update every frame : https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/blob/master/src/cci_direct_helpers.cpp?ref_type=heads#L44Here is the actual auto exposure configuration : https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/blob/master/src/config_defaults.cpp?ref_type=heads#L166 (both gain and exposure update period is set to 1)

AR0144 does not have this issue, the default params are here : https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/blob/master/src/config_defaults.cpp?ref_type=heads#L194

-

@Alex-Kushleyev Thanks for the input Alex. Much appreciated.

-

@Alex-Kushleyev By any chance is the camera focus application ready for testing? I am actually struggling to get proper focus on AR0144. Initially I was able to get very good focus but now image just looks very much blurry. Have a look at below images. These images are captured by voxl-logger.

First time focus :

Current focus :

Current image appears to be blurry and also I am having hard time to get camera calibration right (reprojection error below 0.5). Previously it was good and my camera calibration worked well very fast. Let me knoiw.

-

@Aaky , yes there is something you can test.

First, you will need to install updated version of opencv with python3 bindings. i built the package and it can be downloaded here : . Source is here :

Second, i have some experimental tools that let you subscribe and publish images via mpa. (source) . You can build the package yourself or grab it here. install it on voxl2.

after installing the two packages and starting camera server, execute this:

cd /usr/share/modalai/voxl-mpa-tools python3 pympa-focus-helper.py -i trackingif all goes well, it will receive images and print dots to show that it's running (note that i used hires camera for testing):

voxl2:/usr/share/modalai/voxl-mpa-tools$ python3 pympa-focus-helper.py output image dimensions : [1920, 1080] connecting to camera pipe hires_color subscribed to camera pipe /run/mpa/hires_color/, channel 0 created output pipe hires_debug, channel 0, flags 0 waiting for the first image .width=3840, height=2160, format=1, size=12441600 .............................then you can open voxl-portal and look at the new image

hires_debug- the image will be monochrome for now (but i found that mono image is better for focusing)Focus helper (source here https://gitlab.com/voxl-public/voxl-sdk/utilities/voxl-mpa-tools/-/blob/pympa-experimental/tools/python/pympa-focus-helper.py) has options for input image pipe name, so you can change that to your tracking camera (

python3 pympa-focus-helper.py -i <camera_name>) and other options.You will see the original image with overlay of zoomed in window (top-left) and output of edge detector (top-right). You can use the zoomed in ROI to tune the lens focus by hand. I suggest pointing the camera at something like a checkerboard at the distance that you want to focus at. Hold the camera steady and turn the lens to see the sharpness of the edges (you can also check the edge detector output). For a wide FOV camera, which has a large depth of field, i think everything beyond several inches away from the camera should be in focus. You can experiment a bit. Currently we do not output any metric, but it is actually pretty easy to tune by eye using the zoomed in ROI.

Please try it out and let me know if you run into any issues.

-

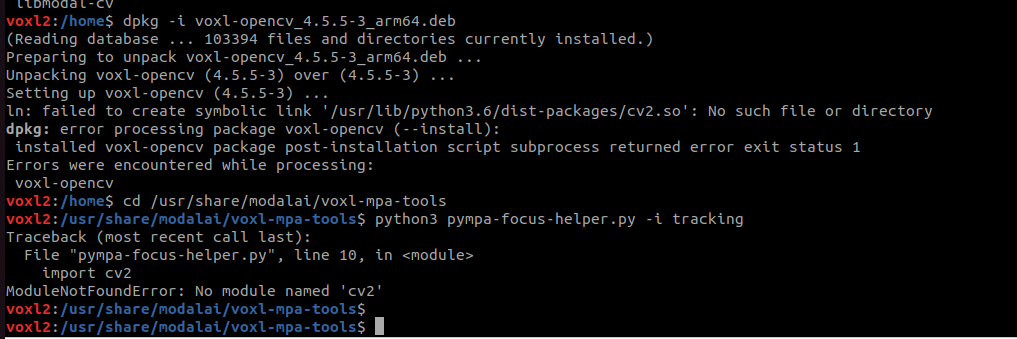

@Alex-Kushleyev Thank you Alex for providing this package. I am facing one problem in installing opencv with python3 bindings as shown below.

This issue is preventing me from running the command "python3 pympa-focus-helper.py -i tracking"

It isnt able to find cv2. I think the symlink isnt working since ```

/usr/lib/python3.6/dist-packages/cv2.soPlease let me know where to look for ahead. -

@Aaky ,

I will check this a bit later but can you verify that “ /usr/lib/python3.6/dist-packages/” path exists on voxl2, if not just create it “mkdir -p /usr/lib/python3.6/dist-packages/“ and then install the package again. Thanks!

-

@Alex-Kushleyev Thanks Alex. This fix worked. Now I can see hires_debug over voxl-portal.

On a seperate note, I need some hardware help over AR0144 camera. Actually while we were assembling this camera over our drone, the lens holder which is black color as shown in picture below came off from one side.

In order to fix this, we poured some fevi quick onto it, this made it sturdy but now voxl isnt able to find this camera. Even voxl-camera-server -l says 1 camera detected which is TOF.

I am aware this must be some hardware problem, but can you advice me anything else if I can check?

Logs :voxl2:~$ voxl-camera-server -d 0 ================================================================= configuration for 2 cameras: cam #0 name: tracking sensor type: ar0144 isEnabled: 1 camId: 0 camId2: -1 fps: 30 en_rotate: 0 en_rotate2: 0 en_preview: 1 pre_width: 1280 pre_height: 800 en_raw_preview: 1 en_small_video: 0 small_video_width: -1 small_video_height: -1 en_large_video: 0 large_video_width: -1 large_video_height: -1 en_snapshot: 0 snap_width: -1 snap_height: -1 ae_mode: lme_msv standby_enabled: 0 decimator: 1 independent_exposure:0 cam #1 name: tof sensor type: pmd-tof isEnabled: 1 camId: 1 camId2: -1 fps: 5 en_rotate: 0 en_rotate2: 0 en_preview: 1 pre_width: 224 pre_height: 1557 en_raw_preview: 1 en_small_video: 0 small_video_width: -1 small_video_height: -1 en_large_video: 0 large_video_width: -1 large_video_height: -1 en_snapshot: 0 snap_width: -1 snap_height: -1 ae_mode: off standby_enabled: 0 decimator: 5 independent_exposure:0 ================================================================= DEBUG: Attempting to open the hal module DEBUG: SUCCESS: Camera module opened on attempt 0 DEBUG: ----------- Number of cameras: 1 DEBUG: Cam idx: 0, Cam slot: 3, Slave Address: 0x007A, Sensor Id: 0x003D GPS server Connected DEBUG: Connected to cpu-monitor DEBUG: ------ voxl-camera-server: Starting 2 cameras Starting Camera: tracking (id #0) DEBUG: Checking Gain limits for Camera: tracking Using gain limits min: 54 max: 8000 DEBUG: cam ID 0 checking for fmt: 37 w: 1280 h: 800 o: 0 DEBUG: i: 0 fmt: 34 w: 176 h: 144 o:0 DEBUG: i: 4 fmt: 34 w: 176 h: 144 o:1 DEBUG: i: 8 fmt: 35 w: 176 h: 144 o:0 DEBUG: i: 12 fmt: 35 w: 176 h: 144 o:1 DEBUG: i: 16 fmt: 33 w: 176 h: 144 o:0 DEBUG: i: 20 fmt: 37 w: 224 h:1557 o:0 DEBUG: i: 24 fmt: 38 w: 224 h:1557 o:0 DEBUG: i: 28 fmt: 32 w: 224 h:1557 o:0 DEBUG: i: 32 fmt: 36 w: 224 h:1557 o:0 ERROR: Camera 0 failed to find supported preview config: 1280x800 WARNING: Failed to start cam tracking due to invalid resolution WARNING: assuming cam is missing and trying to compensate Starting Camera: tof (originally id #1) with id offset: 1 DEBUG: Checking Gain limits for Camera: tof Using gain limits min: 54 max: 8000 DEBUG: cam ID 0 checking for fmt: 38 w: 224 h: 1557 o: 0 DEBUG: i: 0 fmt: 34 w: 176 h: 144 o:0 DEBUG: i: 4 fmt: 34 w: 176 h: 144 o:1 DEBUG: i: 8 fmt: 35 w: 176 h: 144 o:0 DEBUG: i: 12 fmt: 35 w: 176 h: 144 o:1 DEBUG: i: 16 fmt: 33 w: 176 h: 144 o:0 DEBUG: i: 20 fmt: 37 w: 224 h:1557 o:0 DEBUG: i: 24 fmt: 38 w: 224 h:1557 o:0 DEBUG: i: 28 fmt: 32 w: 224 h:1557 o:0 DEBUG: i: 32 fmt: 36 w: 224 h:1557 o:0 VERBOSE: Successfully found configuration match for camera 0: 224x1557 VERBOSE: Adding preview stream for camera: 0 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI VERBOSE: Opened GBM fd gbm_create_device(156): Info: backend name is: msm_drm VERBOSE: Created GBM device DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Converted gralloc flags 0x20900 to GBM flags 0x1400000 VERBOSE: Dumping GBM flags DEBUG: Found flag GBM_BO_USAGE_CAMERA_WRITE_QTI DEBUG: Found flag GBM_BO_USAGE_HW_COMPOSER_QTI DEBUG: Allocated BO with width=224 height=1557 stride=336 aligned_w=336 aligned_h=1557 size=524288 flags=0x20900 format=GBM_FORMAT_RAW12 DEBUG: Successfully set up pipeline for stream: PREVIEW VERBOSE: Entered thread: cam0-request(tid: 2856) DEBUG: Started Camera: tof ------ voxl-camera-server: Started 1 of 2 cameras ------ voxl-camera-server: Camera server is now running VERBOSE: Entered thread: cam0-result(tid: 2857) VERBOSE: Found Royale module config: imagerType 2, illuminationConfig.dutyCycle: 4 temp_sensor_type: 5 VERBOSE: Found Royale module config: maxImgW 224 maxImgH 172 frameTxMode 1 camName X1.1_850nm_2W VERBOSE: Found Royale module config: tempLimitSoft 60.000000 tempLimitHard 65.000000 autoExpoSupported yes VERBOSE: Found Royale usecase: MODE_9_5FPS - phases: 9 fps: 5 VERBOSE: Found Royale usecase: MODE_9_5FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_9_5FPS - exposure group[1] = mod1 VERBOSE: Found Royale usecase: MODE_9_5FPS - exposure group[2] = mod2 VERBOSE: Found Royale usecase: MODE_9_5FPS - exp_limit[0] = (8 2200) VERBOSE: Found Royale usecase: MODE_9_5FPS - exp_limit[1] = (8 2200) VERBOSE: Found Royale usecase: MODE_9_5FPS - exp_limit[2] = (8 2200) VERBOSE: Found Royale usecase: MODE_9_5FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_9_5FPS - exp_time[1] = 2200 VERBOSE: Found Royale usecase: MODE_9_5FPS - exp_time[2] = 2200 VERBOSE: Found Royale usecase: MODE_9_10FPS - phases: 9 fps: 10 VERBOSE: Found Royale usecase: MODE_9_10FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_9_10FPS - exposure group[1] = mod1 VERBOSE: Found Royale usecase: MODE_9_10FPS - exposure group[2] = mod2 VERBOSE: Found Royale usecase: MODE_9_10FPS - exp_limit[0] = (8 1100) VERBOSE: Found Royale usecase: MODE_9_10FPS - exp_limit[1] = (8 1100) VERBOSE: Found Royale usecase: MODE_9_10FPS - exp_limit[2] = (8 1100) VERBOSE: Found Royale usecase: MODE_9_10FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_9_10FPS - exp_time[1] = 1100 VERBOSE: Found Royale usecase: MODE_9_10FPS - exp_time[2] = 1100 VERBOSE: Found Royale usecase: MODE_9_15FPS - phases: 9 fps: 15 VERBOSE: Found Royale usecase: MODE_9_15FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_9_15FPS - exposure group[1] = mod1 VERBOSE: Found Royale usecase: MODE_9_15FPS - exposure group[2] = mod2 VERBOSE: Found Royale usecase: MODE_9_15FPS - exp_limit[0] = (8 750) VERBOSE: Found Royale usecase: MODE_9_15FPS - exp_limit[1] = (8 750) VERBOSE: Found Royale usecase: MODE_9_15FPS - exp_limit[2] = (8 750) VERBOSE: Found Royale usecase: MODE_9_15FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_9_15FPS - exp_time[1] = 750 VERBOSE: Found Royale usecase: MODE_9_15FPS - exp_time[2] = 750 VERBOSE: Found Royale usecase: MODE_9_20FPS - phases: 9 fps: 20 VERBOSE: Found Royale usecase: MODE_9_20FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_9_20FPS - exposure group[1] = mod1 VERBOSE: Found Royale usecase: MODE_9_20FPS - exposure group[2] = mod2 VERBOSE: Found Royale usecase: MODE_9_20FPS - exp_limit[0] = (8 560) VERBOSE: Found Royale usecase: MODE_9_20FPS - exp_limit[1] = (8 560) VERBOSE: Found Royale usecase: MODE_9_20FPS - exp_limit[2] = (8 560) VERBOSE: Found Royale usecase: MODE_9_20FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_9_20FPS - exp_time[1] = 560 VERBOSE: Found Royale usecase: MODE_9_20FPS - exp_time[2] = 560 VERBOSE: Found Royale usecase: MODE_9_30FPS - phases: 9 fps: 30 VERBOSE: Found Royale usecase: MODE_9_30FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_9_30FPS - exposure group[1] = mod1 VERBOSE: Found Royale usecase: MODE_9_30FPS - exposure group[2] = mod2 VERBOSE: Found Royale usecase: MODE_9_30FPS - exp_limit[0] = (8 370) VERBOSE: Found Royale usecase: MODE_9_30FPS - exp_limit[1] = (8 370) VERBOSE: Found Royale usecase: MODE_9_30FPS - exp_limit[2] = (8 370) VERBOSE: Found Royale usecase: MODE_9_30FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_9_30FPS - exp_time[1] = 370 VERBOSE: Found Royale usecase: MODE_9_30FPS - exp_time[2] = 370 VERBOSE: Found Royale usecase: MODE_5_15FPS - phases: 5 fps: 15 VERBOSE: Found Royale usecase: MODE_5_15FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_5_15FPS - exposure group[1] = mod VERBOSE: Found Royale usecase: MODE_5_15FPS - exp_limit[0] = (8 1500) VERBOSE: Found Royale usecase: MODE_5_15FPS - exp_limit[1] = (8 1500) VERBOSE: Found Royale usecase: MODE_5_15FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_5_15FPS - exp_time[1] = 1500 VERBOSE: Found Royale usecase: MODE_5_30FPS - phases: 5 fps: 30 VERBOSE: Found Royale usecase: MODE_5_30FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_5_30FPS - exposure group[1] = mod VERBOSE: Found Royale usecase: MODE_5_30FPS - exp_limit[0] = (8 750) VERBOSE: Found Royale usecase: MODE_5_30FPS - exp_limit[1] = (8 750) VERBOSE: Found Royale usecase: MODE_5_30FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_5_30FPS - exp_time[1] = 750 VERBOSE: Found Royale usecase: MODE_5_45FPS - phases: 5 fps: 45 VERBOSE: Found Royale usecase: MODE_5_45FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_5_45FPS - exposure group[1] = mod VERBOSE: Found Royale usecase: MODE_5_45FPS - exp_limit[0] = (8 500) VERBOSE: Found Royale usecase: MODE_5_45FPS - exp_limit[1] = (8 500) VERBOSE: Found Royale usecase: MODE_5_45FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_5_45FPS - exp_time[1] = 500 VERBOSE: Found Royale usecase: MODE_5_60FPS - phases: 5 fps: 60 VERBOSE: Found Royale usecase: MODE_5_60FPS - exposure group[0] = gray VERBOSE: Found Royale usecase: MODE_5_60FPS - exposure group[1] = mod VERBOSE: Found Royale usecase: MODE_5_60FPS - exp_limit[0] = (8 370) VERBOSE: Found Royale usecase: MODE_5_60FPS - exp_limit[1] = (8 370) VERBOSE: Found Royale usecase: MODE_5_60FPS - exp_time[0] = 200 VERBOSE: Found Royale usecase: MODE_5_60FPS - exp_time[1] = 370 VERBOSE: sid: 0x00AC, addr: 0x0000, data: 0x0050 VERBOSE: sid: 0x00AC, addr: 0x0001, data: 0x004D VERBOSE: sid: 0x00AC, addr: 0x0002, data: 0x0044 VERBOSE: sid: 0x00AC, addr: 0x0003, data: 0x0054 VERBOSE: sid: 0x00AC, addr: 0x0004, data: 0x0045 VERBOSE: sid: 0x00AC, addr: 0x0005, data: 0x0043 VERBOSE: sid: 0x00AC, addr: 0x0006, data: 0x0007 VERBOSE: sid: 0x00AC, addr: 0x0007, data: 0x0000I dont know if we can recover this camera back it would be really helpful if someone can guide me if we can get some information about this camera also if in future this holder comes out, what should be used to fix this. My observations were due to his lens holder coming out our image was becoming completely blurry even after focusing it, also image quality reduced alot. What are better ways to fix this kind of lenses? Also better way to know if this camera has gone completely bad or can be recovered?