TOF and High Res Intrinsics

-

@Caio-Licínio-Vasconcelos-Pantarotto You also need to permute (1,0,2) the coordinates as they come from the sensor.. to be the way opencv expects

-

@Caio-Licínio-Vasconcelos-Pantarotto Thank you for replying, when you say you translated the point cloud to the RGB frame, where did you use the intrinsic of RGB?

Because as far as I understand you only need extrinsics of ToF wrt RGB and you can translate/rotate it to the RGB's POV.

My question is a little more specific then the representation you showed above, I want to get the ToF intrinsic matrix, since modal ai is already constructing a depth image in topic /tof_depth they must have this matrix buried in their code somewhere. (Although I did calibrate the intrinsic myself).

Once I have the intrinsic and the depth image, I can use the depth image itself for 3d mapping and other tasks.

Another way to see it is, running an object detector on a different resolution image and matching it with a sparse point cloud for getting 3d coordinates is inaccurate. But if I can get a similar resolution depth and RGB image, that increases the accuracy.

-

What do you mean by "running an object detector on a different resolution image and matching it with a sparse point cloud for getting 3d coordinates is inaccurate" ? You wanna use object detection on the RGB right ? Why you say that using the space point cloud is inaccurate ? Sorry I don't know much about this..

-

@Caio-Licínio-Vasconcelos-Pantarotto The point cloud has less resolution than the depth view given by the TOF ? I didn't really explored it..

-

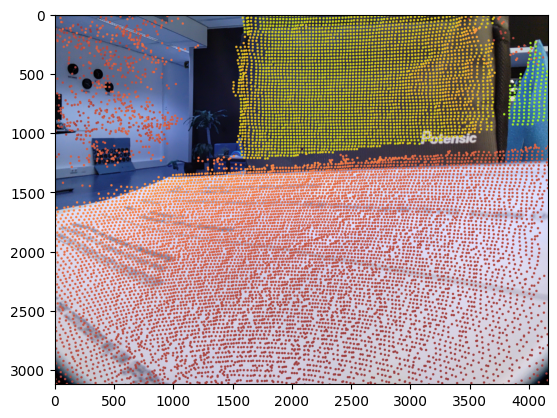

@Darshit-Desai If you have the 3D point cloud and you need to project it to a camera you need the intrinsics of the camera and the extrinsic... That's where I used the instrisic pf the RGB, on the image I sent you each point is a XYZ point projected to the RGB.. so with that you have the location in 3D space of the objects the camera is seeing.

-

@Caio-Licínio-Vasconcelos-Pantarotto Thank you, I got it now, so you use Extrinsics between ToF and RGB to translate the point cloud from ToF's POV to RGB's POV. And then you trace rays from those 3d points back to the camera plane of the RGB camera using RGB intrinsics, to find the corresponding pixel in the RGB image.

I was actually asking what if you want to do the reverse? You have the Region of Interest containing the pixel coordinates in RGB image (using a Deep learning detector like YOLO) and wanted to find the 3d pose of the RGB pixels, In the picture you shared (below) earlier, if there is some region of interest near the areas in RGB where there are no ToF points from the point cloud, what would you do?

@Caio-Licínio-Vasconcelos-Pantarotto said in TOF and High Res Intrinsics:

-

@Darshit-Desai Then I believe you cannot do anything.. .the TOF is limited to certain surfaces and texture...

-

Can the @Moderator answer for this questions?

-

@Darshit-Desai The TOF uses an IR VCSEL, so if the surface is IR absorbent the TOF sensor will not receive a reflection back for measurement

-

@Moderator That's fair, but can we have a aligned depth image, even if the tof doesnt have valid depth, a aligned RGB+Depth image would go a long way