@Eric-Katzfey @Alex-Kushleyev not exactly what I was looking for. I already implemented it using ffmpeg library. Basically I wanted to use voxl-send-command utility to send start/stop recording commands while also have the ability to save snapshots. I didn't like the idea of using gstreamer pipes for implementing this so I chose ffmpeg. I think my solution is good enough for now. I am able to start/stop recordings do snapshots and embed xmp metadata into the snapshots. Although I might come back to using OMX library if I decide I need the hardware encoding.

Posts made by AndriiHlyvko

-

RE: UVC camera video recording and encoding implementationposted in VOXL SDK

-

UVC camera video recording and encoding implementationposted in VOXL SDK

Hi, I am currently trying to implement video recording of UVC camera. My initial approach was to use OMX in the voxl-uvc-server to try to encode the NV12 raw frames to h264/h265 and then pass them to a modal pipe so that I could have voxl-mavcam-manager read from the encoded pipe and save the frames to a file. I was looking at how the voxl-camera-server was using OMX and HAL3 library to do the encoding but it seems pretty complex. As an alternative I was also considering using ffmpeg to do the encoding since its a simpler more high-level library but I am worried that ffmpeg won't be able to do hardware acceleration. I was wondering if any of the developers could give me some pointers or point me to any documentation resources to help me with the implementation.

Thanks.

-

RE: Sentinel segmentation fault after running voxl-uvc-server -v [camera id] -dposted in Sentinel

@Alex-Kushleyev sure. Should I make a merge request for it and tag you in it?

-

RE: Sentinel segmentation fault after running voxl-uvc-server -v [camera id] -dposted in Sentinel

@Alex-Kushleyev figured it out. I had a bug that I introduced. I broke the mapping between UVC_FRAME_FORMAT enum and the voxl IMAGE_FORMAT enum in my fork of the uvc-server. Right now uvc streaming is working well with the boson+

-

RE: Sentinel segmentation fault after running voxl-uvc-server -v [camera id] -dposted in Sentinel

@Alex-Kushleyev just tested with latest master. The seg fault is gone and it seems to be working fine. One new problem that I have after moving to libuvc master is that the video stream from the boson+ looks wrong (the video is green

). Its only wrong for boson+ and not the boson. I'll keep debugging the issue. I was thinking maybe the boson+ sends incomplete frames in the uvc callback but I'm not sure.

). Its only wrong for boson+ and not the boson. I'll keep debugging the issue. I was thinking maybe the boson+ sends incomplete frames in the uvc callback but I'm not sure. -

RE: Sentinel segmentation fault after running voxl-uvc-server -v [camera id] -dposted in Sentinel

Thanks @Alex-Kushleyev . Do you know if there are any differences between using the voxl fork of libuvc vs compiling the master libuvc? I was thinking of just building the master libuvc but I am not sure if modalai did any changes to it.

-

RE: Sentinel segmentation fault after running voxl-uvc-server -v [camera id] -dposted in Sentinel

@Róbert-Dévényi @Eric-Katzfey @Alex-Kushleyev I am getting the same seg fault with a boson+ camera. I determined that the seg fault occurs when the uvc_start_streaming function is called (I am running a modified version of voxl-uvc-server). I have the stack trace from gdb. If I figure out the cause of the problem I will post it here.

(gdb) run Starting program: /usr/bin/voxl-uvc-server [Thread debugging using libthread_db enabled] Using host libthread_db library "/lib/aarch64-linux-gnu/libthread_db.so.1". VOXL UVC Server loading config file VOXL UVC Server parsed argument voxl-uvc-server starting Image resolution 640x512, 30 fps chosen [New Thread 0x7ff7a7e1c0 (LWP 2189)] UVC initialized Device found [New Thread 0x7ff727d1c0 (LWP 2190)] Device opened Found desired frame format: 1 uvc_get_stream_ctrl_format_size succeeded for format NV12 bmHint: 0001 bFormatIndex: 2 bFrameIndex: 1 dwFrameInterval: 333333 wKeyFrameRate: 1 wPFrameRate: 0 wCompQuality: 0 wCompWindowSize: 0 wDelay: 0 dwMaxVideoFrameSize: 491520 dwMaxPayloadTransferSize: 983044 bInterfaceNumber: 1 [New Thread 0x7ff6a7c1c0 (LWP 2191)] [New Thread 0x7ff627b1c0 (LWP 2192)] [New Thread 0x7ff5a7a1c0 (LWP 2193)] Mavlink Onboard server Connected [New Thread 0x7fe70ef1c0 (LWP 2194)] Streaming starting [New Thread 0x7feffff1c0 (LWP 2195)] Thread 3 "voxl-uvc-server" received signal SIGSEGV, Segmentation fault. [Switching to Thread 0x7ff727d1c0 (LWP 2190)] __memcpy_generic () at ../sysdeps/aarch64/multiarch/../memcpy.S:185 185 ../sysdeps/aarch64/multiarch/../memcpy.S: No such file or directory. (gdb) bt #0 __memcpy_generic () at ../sysdeps/aarch64/multiarch/../memcpy.S:185 #1 0x0000007ff7ed32a0 in _uvc_process_payload () from /usr/lib/libuvc.so.0 Backtrace stopped: previous frame identical to this frame (corrupt stack?) -

RE: VOXL-MAVCAM-MANAGER crashes on MAV_CMD_VIDEO_START_CAPTURE mavlink command when trying to record videoposted in Software Development

@Eric-Katzfey I figured out the problem. I changed the mavlink pipe to mavlink_onboard keeping the channel number as 1. Setting the channel number as 2 seems to fix the problem. I haven't looked into the implementation of modal-pipe architecture but I thought that the channel number is just used by the client of the pipe (kind of like an open file descriptor number?). Basically it looks like having the channel number as 1 breaks the mavlink pipe somehow.

-

VOXL-MAVCAM-MANAGER crashes on MAV_CMD_VIDEO_START_CAPTURE mavlink command when trying to record videoposted in Software Development

Hi, I am trying to use the voxl-mavcam-manager to record videos on voxl by sending mavlink MAV_CMD_VIDEO_START_CAPTURE to it. I already successfully had sent MAV_CMD_IMAGE_START_CAPTURE commands and was able to capture snapshots. However, when I send the MAV_CMD_VIDEO_START_CAPTURE it crashes the voxl-mavcam-manager with a segfault. Has anyone experienced this before?

Here is the debug output from voxl-mavcam-manager:

Connected to video pipe hires_large_encoded ERROR channel 0 helper tried to read into NULL buffer ERROR channel 0 helper tried to read into NULL buffer ERROR channel 0 helper tried to read into NULL buffer starting new video: /data/video/video_2024-05-14_19:33:14.h265 ERROR validating mavlink_message_t data received through pipe: read partial packet read 85 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: 3 of 3 packets failed last magic number received was 128, expected MAVLINK_STX=253 ERROR validating mavlink_message_t data received through pipe: read partial packet read 1896 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 1734 bytes, but it should be a multiple of 44 ERROR: invalid metadata, magic number=-805240319, expected 1448040524 most likely client fell behind and pipe overflowed ERROR validating mavlink_message_t data received through pipe: read partial packet read 1682 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 1494 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet ........ read 832 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 731 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 705 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 682 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 656 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 681 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 650 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 687 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 675 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 642 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 889 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 813 bytes, but it should be a multiple of 44 lMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsg array is nullMsgERROR validating mavlink_message_t data received through pipe: read partial packet read 781 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 774 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 769 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 805 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 740 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 679 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 615 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 634 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 667 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 561 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 635 bytes, but it should be a multiple of 44 ERROR validating mavlink_message_t data received through pipe: read partial packet read 4570 bytes, but it should be a multiple of 44 ERROR: invalid metadata, magic number=-1912241965, expected 1448040524 most likely client fell behind and pipe overflowed ERROR validating mavlink_message_t data received through pipe: read partial packet read 192 bytes, but it should be a multiple of 44 Segmentation fault: Fault thread: voxl-mavcam-man(tid: 2626) Fault address: 0x4fd00000399 Address not mapped. Segmentation fault -

voxl-camera-server default exposure results in black snapshots + snapshot filename aliasingposted in VOXL SDK

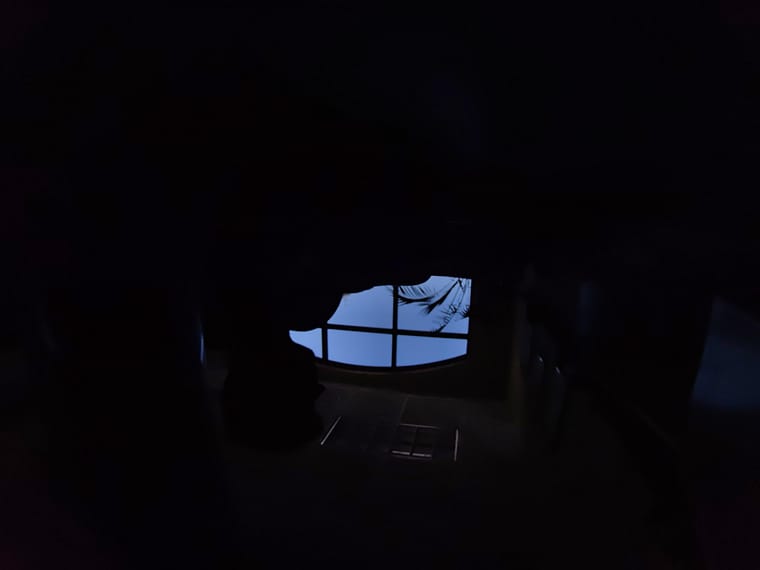

Hi dev-team, I found a few minor bugs in the voxl-camera-server related to snapshot capturing. The first bug is that when you use the default exposure setting of AE_ISP and you also don't have any video streams open and try to capture camera snapshots you will get very underexposed images. Here is an example taken using an IMX412 camera with default settings. It was taken in our office in the middle of the day.

Basically the fix I found for that is to explicitly tell the Camera3 HAL not to use auto exposure by setting the default ae_mode to AE_LME_MSV.

The other bug that I found is that you cannot capture snapshots faster than 1Hz. This is because the snapshot filenames are timestamps with 1s resolution. So when multiple snapshot commands come in in the same second they will have the same filename and overwrite each other on disk. The solution to that is to add a millis to the timestamp in the filename.

I made a merge request for this https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/merge_requests/45

On a separate note I would like to add in a feature to voxl-camera-server that adds XMP metadata to the snapshot images. This will be helpful for embedding UAV state information like attitude in the jpeg snapshots so they can later be used for mapping. For example DJI uses XMP to save its attitude and gimbal information. Would the modalai team be interested in me bringing in this feature?

Thanks.

-

RE: voxl-camera-server more exif metadata bugsposted in VOXL SDK

@Eric-Katzfey Here is the MR I made for this issue https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/merge_requests/44

I also made a few related changes that should allow handling of additional UAV metadata in the images

-

RE: voxl-camera-server more exif metadata bugsposted in VOXL SDK

@Zachary-Lowell-0 I managed to fix the code. Essentially I was updating the already existing jpeg exif block. Here is my solution. Its a little messy cuz I haven't had the time to clean it up. But I tested and it works.

Would you guys be interested in pulling in my changes?// Function to create a new tag or update an existing one static ExifEntry* create_or_update_tag(ExifData* exif, ExifIfd ifd, ExifTag tag, ExifFormat fmt, unsigned int size, unsigned int components) { ExifEntry* entry = exif_content_get_entry(exif->ifd[ifd], tag); if (!entry) { // Create a new entry if it doesn't exist printf("Creating entry: %d\n", (int)tag); //entry = exif_entry_new(); entry = create_tag(exif, ifd, tag, size); entry->tag = tag; entry->components = components; entry->format = fmt; ///entry->data = (unsigned char*)malloc(size); //exif_content_add_entry(exif->ifd[ifd], entry); ///exif_entry_unref(entry); } else { // Update the existing entry if (entry->size < size) { // If the existing entry size is insufficient, reallocate memory entry->data = (unsigned char*)realloc(entry->data, size); entry->size = size; } entry->components = components; entry->format = fmt; } return entry; } void PerCameraMgr::ProcessSnapshotFrame(image_result result) { BufferBlock* bufferBlockInfo = bufferGetBufferInfo(&snap_bufferGroup, result.second.buffer); // first write to pipe if subscribed camera_image_metadata_t meta; if(getMeta(result.first, &meta)) { M_WARN("Trying to process encode buffer without metadata\n"); return; } int start_index = 0; //int start_index2 = 0; uint8_t* src_data = (uint8_t*)bufferBlockInfo->vaddress; //int extractJpgSize = bufferBlockInfo->size; int extractJpgSize = find_jpeg_buffer_size(src_data, bufferBlockInfo->size, &start_index); assert(start_index == 0); //int _extractJpgSize = find_jpeg_buffer(src_data, bufferBlockInfo->size, &start_index2); // find APP1 block start and length size_t prev_exif_block_start_idx = 0; size_t prev_exif_block_size = find_exif_start(src_data, bufferBlockInfo->size, &prev_exif_block_start_idx); if(extractJpgSize == 1){ M_ERROR("Real Size of JPEG is incorrect"); return; } printf("Snapshot jpeg start: %6d len %8d\n", start_index, extractJpgSize); #ifndef APQ8096 // Load the EXIF data from the file unsigned char *exif_data; unsigned int exif_data_len; ExifEntry *entry; // can create exif data from raw jpeg image ExifData* exif = exif_data_new_from_data(src_data, extractJpgSize); //ExifData *exif = createExifData(); if (exif == nullptr) { printf("Issue getting exif data from origin\n"); return; } exif_data_fix(exif); //printf("Exif data before mods\n"); //exif_data_dump(exif); //printf("End Exif data before mods\n"); /** * @brief Good description of exif format https://www.media.mit.edu/pia/Research/deepview/exif.html * */ ExifByteOrder imageByteOrder = exif_data_get_byte_order(exif); /**< Each camera saves its Exif data in a different byte order */ //gps_data_t gps_grabbed_info = grab_gps_info(); // Code to add latitude to exif tag entry = create_or_update_tag(exif, EXIF_IFD_GPS, (ExifTag)EXIF_TAG_GPS_LATITUDE_REF, (ExifFormat)EXIF_FORMAT_ASCII, 2*sizeof(char), 1); if (_uav_state.lat_deg >= 0) { memcpy(entry->data, "N", sizeof(char)); } else { memcpy(entry->data, "S", sizeof(char)); _uav_state.lat_deg *= -1; } entry = create_or_update_tag(exif, EXIF_IFD_GPS, (ExifTag)EXIF_TAG_GPS_LATITUDE, (ExifFormat)EXIF_FORMAT_RATIONAL, 3*sizeof(ExifRational), 3); ExifLong degrees_lat = static_cast<ExifLong>(_uav_state.lat_deg); double fractional = (_uav_state.lat_deg - degrees_lat); ExifLong minutes_lat = static_cast<ExifLong>(fractional*60); fractional = (fractional*60) - minutes_lat; double seconds_lat = (fractional * 60); ExifRational degrees_r = { degrees_lat, 1 }; ExifRational minutes_r = { minutes_lat, 1 }; ExifRational seconds_r = { static_cast<ExifLong>(seconds_lat * 1000000), 1000000 }; // Increased precision for seconds exif_set_rational(entry->data, imageByteOrder, degrees_r); exif_set_rational(entry->data + sizeof(ExifRational), imageByteOrder, minutes_r); exif_set_rational(entry->data + 2 * sizeof(ExifRational), imageByteOrder, seconds_r); // Code to add longitude to exif tag entry = create_or_update_tag(exif, EXIF_IFD_GPS, (ExifTag)EXIF_TAG_GPS_LONGITUDE_REF, (ExifFormat)EXIF_FORMAT_ASCII, 2*sizeof(char), 1); if (_uav_state.lon_deg >= 0) { memcpy(entry->data, "E", sizeof(char)); } else { memcpy(entry->data, "W", sizeof(char)); _uav_state.lon_deg *= -1; } entry = create_or_update_tag(exif, EXIF_IFD_GPS, (ExifTag)EXIF_TAG_GPS_LONGITUDE, (ExifFormat)EXIF_FORMAT_RATIONAL, 3*sizeof(ExifRational), 3); ExifLong degrees_lon = static_cast<ExifLong>(_uav_state.lon_deg); fractional = (_uav_state.lon_deg - degrees_lon); ExifLong minutes_lon = static_cast<ExifLong>(fractional*60); fractional = (fractional*60) - minutes_lon; double seconds_lon = (fractional * 60); degrees_r = { degrees_lon, 1 }; minutes_r = { minutes_lon, 1 }; seconds_r = { static_cast<ExifLong>(seconds_lon * 1000000), 1000000 }; // Increased precision for seconds exif_set_rational(entry->data, imageByteOrder, degrees_r); exif_set_rational(entry->data + sizeof(ExifRational), imageByteOrder, minutes_r); exif_set_rational(entry->data + 2 * sizeof(ExifRational), imageByteOrder, seconds_r); // Code to add altitude to exif tag entry = create_or_update_tag(exif, EXIF_IFD_GPS, (ExifTag)EXIF_TAG_GPS_ALTITUDE, (ExifFormat)EXIF_FORMAT_RATIONAL, sizeof(ExifRational), 1); double alt_lon = _uav_state.alt_msl_meters; unsigned int tmp = static_cast<unsigned int>(alt_lon * 1000); exif_set_rational(entry->data, imageByteOrder, (ExifRational){tmp, 1000}); entry = create_or_update_tag(exif, EXIF_IFD_GPS, (ExifTag)EXIF_TAG_GPS_ALTITUDE_REF, (ExifFormat)EXIF_FORMAT_BYTE, sizeof(uint8_t), 1); entry->data[0] = 0; /**< 0 for above sea level */ // dumping all exif data entry = create_or_update_tag(exif, EXIF_IFD_EXIF, (ExifTag)EXIF_TAG_FOCAL_LENGTH, (ExifFormat)EXIF_FORMAT_RATIONAL, sizeof(ExifRational), 1); ExifRational focal_len_r = {static_cast<ExifLong>(9.99 * 1000000), 1000000}; exif_set_rational(entry->data, EXIF_BYTE_ORDER_MOTOROLA, focal_len_r); entry = create_or_update_tag(exif, EXIF_IFD_EXIF, (ExifTag)EXIF_TAG_FNUMBER, (ExifFormat)EXIF_FORMAT_RATIONAL, sizeof(ExifRational), 1); ExifRational f_number_r = {static_cast<ExifLong>(13.6 * 1000000), 1000000}; exif_set_rational(entry->data, EXIF_BYTE_ORDER_MOTOROLA, f_number_r); entry = create_or_update_tag(exif, EXIF_IFD_EXIF, (ExifTag)EXIF_TAG_FOCAL_LENGTH_IN_35MM_FILM, (ExifFormat)EXIF_FORMAT_SHORT, sizeof(ExifShort), 1); ExifShort f_35_mm_r = 17; exif_set_short(entry->data, EXIF_BYTE_ORDER_MOTOROLA, f_35_mm_r); exif_data_save_data(exif, &exif_data, &exif_data_len); //printf("Exif data after mods\n"); //exif_data_dump(exif); //printf("End Exif data after mods\n"); assert(exif_data != NULL); #endif meta.magic_number = CAMERA_MAGIC_NUMBER; meta.width = snap_width; meta.height = snap_height; meta.format = IMAGE_FORMAT_JPG; meta.size_bytes = extractJpgSize; pipe_server_write_camera_frame(snapshotPipe, meta, &src_data[start_index]); // now, if there is a filename in the queue, write it too if(snapshotQueue.size() != 0){ char *filename = snapshotQueue.front(); snapshotQueue.pop(); M_PRINT("Camera: %s writing snapshot to :\"%s\"\n", name, filename); //WriteSnapshot(bufferBlockInfo, snap_halfmt, filename); FILE* file_descriptor = fopen(filename, "wb"); if(! file_descriptor){ //Check to see if we were just missing parent directories CreateParentDirs(filename); file_descriptor = fopen(filename, "wb"); if(! file_descriptor){ M_ERROR("failed to open file descriptor for snapshot save to: %s\n", filename); return; } } #ifndef APQ8096 // first write the beginning of jpeg and stop where the previous exif APP1 header started // this will include the FFD8 marker printf("Start: 0, end:%lu\n", prev_exif_block_start_idx); if (fwrite(src_data, prev_exif_block_start_idx, 1, file_descriptor) != 1) { fprintf(stderr, "Error writing to file with jpeg %s\n", filename); } if (fwrite(exif_header, exif_header_len, 1, file_descriptor) != 1) { fprintf(stderr, "Error writing to file inin exif header %s\n", filename); } if (fputc((exif_data_len+2) >> 8, file_descriptor) < 0) { fprintf(stderr, "Error writing to file in big endian order %s\n", filename); } if (fputc((exif_data_len+2) & 0xff, file_descriptor) < 0) { fprintf(stderr, "Error writing to file with fputc %s\n", filename); } if (fwrite(exif_data, exif_data_len, 1, file_descriptor) != 1) { fprintf(stderr, "Error writing to file with data block %s\n", filename); } // next write from the last bits of the prev APP1 block size_t jpeg_start_idx = prev_exif_block_start_idx + prev_exif_block_size; size_t jpeg_size = extractJpgSize - prev_exif_block_size; //size_t jpeg_start_idx = prev_exif_block_start_idx + prev_exif_block_size + (exif_data_len+2+exif_header_len); //size_t jpeg_size = extractJpgSize - prev_exif_block_size + (exif_data_len+2+exif_header_len); printf("Start: %lu, end:%lu\n", jpeg_start_idx, jpeg_size); if (fwrite(src_data + jpeg_start_idx, jpeg_size, 1, file_descriptor) != 1) { fprintf(stderr, "Error writing to file with jpeg %s\n", filename); } free(exif_data); exif_data_unref(exif); -

RE: voxl-camera-server more exif metadata bugsposted in VOXL SDK

@AndriiHlyvko an example of jpeg image structure:

FFD8 (SOI) ... (optional markers like APP0/APP1) FFDB (DQT) FFC2 (SOF0) // Progressive DCT FFC4 (DHT) // huffman table FFDA (SOS) // First scan ... (compressed image data for first scan) FFDA (SOS) // Second scan ... (compressed image data for second scan) ... FFD9 (EOI) -

RE: voxl-camera-server more exif metadata bugsposted in VOXL SDK

@Zachary-Lowell-0 what I did find out is that inside ProcessSnapshotFrame the second Exif block gets created by createExifData and written to the image. This is because the jpeg did contain an exif block (I assume it was created by the android library).

The correct approach is to edit the already created exif block inside the jpeg. Also the find_jpeg_buffer function is wrong. I assume it tried to look for the start of the jpeg stream but it instead is looking for the SOI marker. The SOI marker and the start of the data section are not the same. The SOI actually marks the file itself as a jpeg type. -

RE: voxl-camera-server more exif metadata bugsposted in VOXL SDK

@Zachary-Lowell-0 thanks!

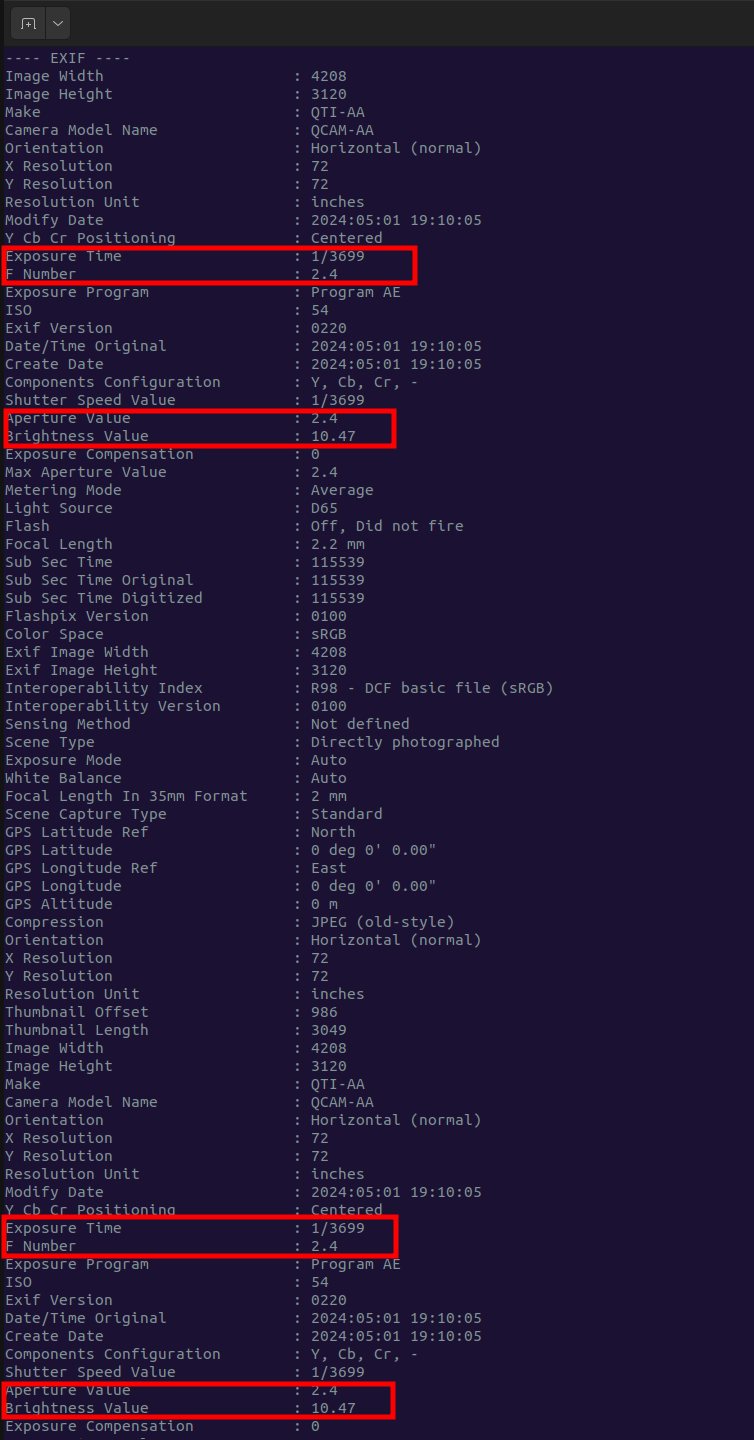

Just to give some context. I am trying to change the camera focal length in the exif data of the jpeg. But because somehow there are two Exif blocks in the jpeg only one is changes and not the other. There should only be one exif data block in the jpeg.

Here is an example output of the exiftool

-

voxl-camera-server more exif metadata bugsposted in VOXL SDK

Hi, I wound more bugs in the voxl-camera-server. The final jpeg images that are captured using the snapshot command contain two APP1 Exif sections instead of one. This is essentially because the ProcessSnapshotFrame function incorrectly processes the jpeg metadata (do binary header inspection of the snapshots to see the double APP1 headers). Anyway I am currently working on a bug fix for that. I was wondering if anyone could point me to some information on how the jpeg data actually gets piped into the voxl-camera-server. I found that its using some android "hardware" libraries to set up frame callbacks. So I am wondering if I can just get to raw image data instead of messing with moving around jpeg data.

Thanks.

Best, Andrii. -

RE: voxl-camera-server bugs with snapshot capture for mappingposted in VOXL SDK

@tom cool. I created an MR with wrong source branch by mistake so just delete that one. But this one is the right one: https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/merge_requests/42

-

RE: voxl-camera-server bugs with snapshot capture for mappingposted in VOXL SDK

@Moderator Whats the procedure for creating merge requests that are from someone outside of ModalAI? The repo doesn't allow pushing a new branch. I also tried to send an email a link generated by gitlab and that didn't work.

-

RE: voxl-camera-server bugs with snapshot capture for mappingposted in VOXL SDK

@Moderator sounds good I will try to create a merge request for this sometime early next week