Upgrade Tensorflow Version tflite-server

-

@Matt-Turi So i tried using your the new tflite-server 0.2.0 first with my own model. It loaded the model succesfully but gave the following error

CUSTOM TFLite_Detection_PostProcess: Operation is not supported.so i thought my model had the wrong postprocessing. But in netron everything looked fine and also quantization was made without errors. So i tried using your ssdlite_mobilnet_v2_coco.tflite same error as above but it still worked for like half a minute but then suddenly hangs and ssh sends broken pipe. Do you know if i installed the dev tflite-server wrong or if it could be something else? Full output of ssdlite_mobilenet_v2_coco.tflite model:

voxl:~$ voxl-tflite-server -t -d Enabling timing mode Enabling debug mode ================================================================= skip_n_frames: 5 ================================================================= model: /usr/bin/dnn/ssdlite_mobilenet_v2_coco.tflite ================================================================= input_pipe: /run/mpa/uvc/ ================================================================= delegate: gpu ================================================================= Loaded model /usr/bin/dnn/ssdlite_mobilenet_v2_coco.tflite Resolved reporter INFO: Created TensorFlow Lite delegate for GPU. ERROR: Next operations are not supported by GPU delegate: CUSTOM TFLite_Detection_PostProcess: Operation is not supported. First 114 operations will run on the GPU, and the remaining 1 on the CPU. INFO: Initialized OpenCL-based API. ------VOXL TFLite Server------ Connected to camera server Detected: 0 person, Confidence: 0.81 Detected: 0 person, Confidence: 0.79 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.79 Detected: 0 person, Confidence: 0.76 Detected: 0 person, Confidence: 0.75 Detected: 0 person, Confidence: 0.71 Detected: 0 person, Confidence: 0.70 Detected: 0 person, Confidence: 0.72 Detected: 0 person, Confidence: 0.75 Detected: 0 person, Confidence: 0.74 Detected: 0 person, Confidence: 0.79 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.77 Detected: 0 person, Confidence: 0.78 client_loop: send disconnect: Broken pipe ubuntu@user:~$Installation of tflite-server dev:

voxl-configure-opkg dev opkg update opkg install tflite-serverit throws some errros with existing installations but when i repeat the steps above it works.

-

Ok so it looks like its crashing when i switch to the voxl-portal tflite camera Stream. It works for 2 or three seconds, i can also see detection, then the voxl completely aborts but i can still reconnect over ssh after aborting so it's not shutting down.

Details:

System Image: voxl_platform_3-3-0-0.5.0-a

voxl-suite: stable release

Services Used:

voxl-uvc-server -r 640x512 -f 60 -d : Flir Boson Camera : dev release

voxl-portal : Avg(GPU): 35%, Avg(CPU): 15% : stable release

voxl-tflite-server -t -d : dev releaseHelp would be really appreciated for now i will fall back to the stable tflite-server release. But i would really like to use the new one because it's way better for custom models.

When using my new model on stable tflite-server i am getting a Segmentation Fault.

-

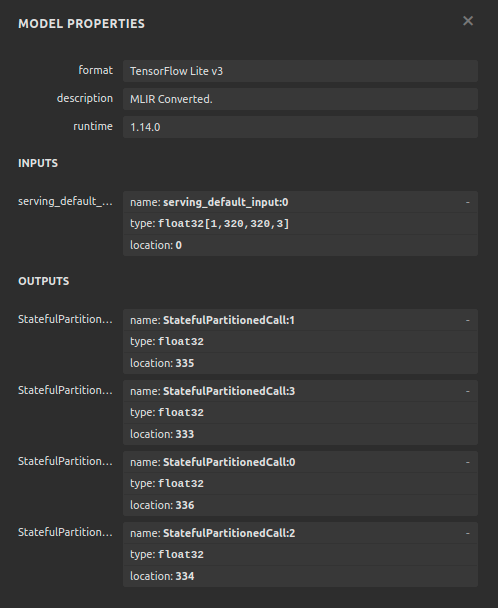

Netron Tflite Model In/Output Formats:

-

Output of Installation of tflite-server 0.2.0 from dev branch:

Output:voxl:~$ opkg install voxl-tflite-server Upgrading voxl-tflite-server from 0.1.6 to 0.2.0 on root. Downloading http://voxl-packages.modalai.com/dev/voxl-tflite-server_0.2.0_202202202255.ipk. Installing libmodal-pipe (2.1.4) on root. Downloading http://voxl-packages.modalai.com/dev/libmodal-pipe_2.1.4_202202040134.ipk. Installing libmodal-json (0.4.0) on root. Downloading http://voxl-packages.modalai.com/dev/libmodal-json_0.4.0_202202080557.ipk. To remove package debris, try `opkg remove libmodal-json`. To re-attempt the install, try `opkg install libmodal-json`. Collected errors: * check_data_file_clashes: Package libmodal-json wants to install file /usr/lib64/libmodal_json.so But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/include/cJSON.h But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/include/modal_json.h But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/lib/libmodal_json.so But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/bin/modal-test-json But that file is already provided by package * libmodal_json * opkg_install_cmd: Cannot install package voxl-tflite-server.This does not work:

opkg remove libmodal-json opkg install libmodal-jsonBut when trying to install again:

voxl:~$ opkg install voxl-tflite-server Upgrading voxl-tflite-server from 0.1.6 to 0.2.0 on root. Installing voxl-opencv (4.5.5) on root.it installed succesfully

-

Have been trying around some other models and got some funny confidences:

Detected: 0 person, Confidence: 10.00 Detected: 0 person, Confidence: 0.68 Detected: 0 person, Confidence: 0.73 Detected: 0 person, Confidence: 2.63 Detected: 0 person, Confidence: 1.24 Detected: 0 person, Confidence: 0.98 Detected: 0 person, Confidence: 2.23 Detected: 0 person, Confidence: 0.87 Detected: 0 person, Confidence: 1.26 Detected: 0 person, Confidence: 0.74 Detected: 0 person, Confidence: 0.72 Detected: 0 person, Confidence: 1.34 Detected: 0 person, Confidence: 1.82 Detected: 0 person, Confidence: 0.67 Detected: 0 person, Confidence: 1.50 Detected: 0 person, Confidence: 0.74 Detected: 0 person, Confidence: 1.37 Detected: 0 person, Confidence: 0.89 Detected: 0 person, Confidence: 1.56 Fault address: 0x20 Unknown reason. Segmentation faultdidn't know that you can have a confidence of 1000%

Model: SSD ResNet101 V1 FPN 640x640 -

Let me try to address these individually!

- The "error" complaining

CUSTOM TFLite_Detection_PostProcess: Operation is not supported.is simply due to the very last operation of the model not being supported for gpu delegation. This is because it is not a standard op, so it just gets delegated to the cpu with no issues!

-

The issue with tflite-server hanging is not due to an improper install. I was able to replicate this on my setup using a BAD apm, but swapping out with a new one seemed to alleviate this issue. voxl-tflite-server is a fairly power hungry application, so I recommend checking for fraying cables and things of that nature but if you can send a picture of your current setup with cables/batteries etc included that may help debug. In the meantime, if you have another apm or power supply to quickly swap to and test please give that a try.

-

I personally have not had much luck with the resnet architectures, but I would double check data types and output tensor lengths, because those confidence values seem way off.

-

One last note, the dev release does not directly support all image formats out of voxl-uvc-server, only IMAGE_FORMAT_NV12 as of now. So, if your camera input is coming in as either IMAGE_FORMAT_YUV422 or IMAGE_FORMAT_YUV422_UYVY you may need to add some extra handling for that.

-

@Matt-Turi thank you for the fast reply again. I will check the apm and Camera server output format tomorrow and also send you a picture of the setup.

-

@Matt-Turi I got it working with changing the apm. Thank you for the suggestion. So i looked up my Camera Supports and it should support nv12 and in voxl-uvc-server it shows the following:

uvc_get_stream_ctrl_format_size succeeded for format 2after looking at the voxl-uvc-server:

https://gitlab.com/voxl-public/modal-pipe-architecture/voxl-uvc-server/-/blob/dev/src/main.c

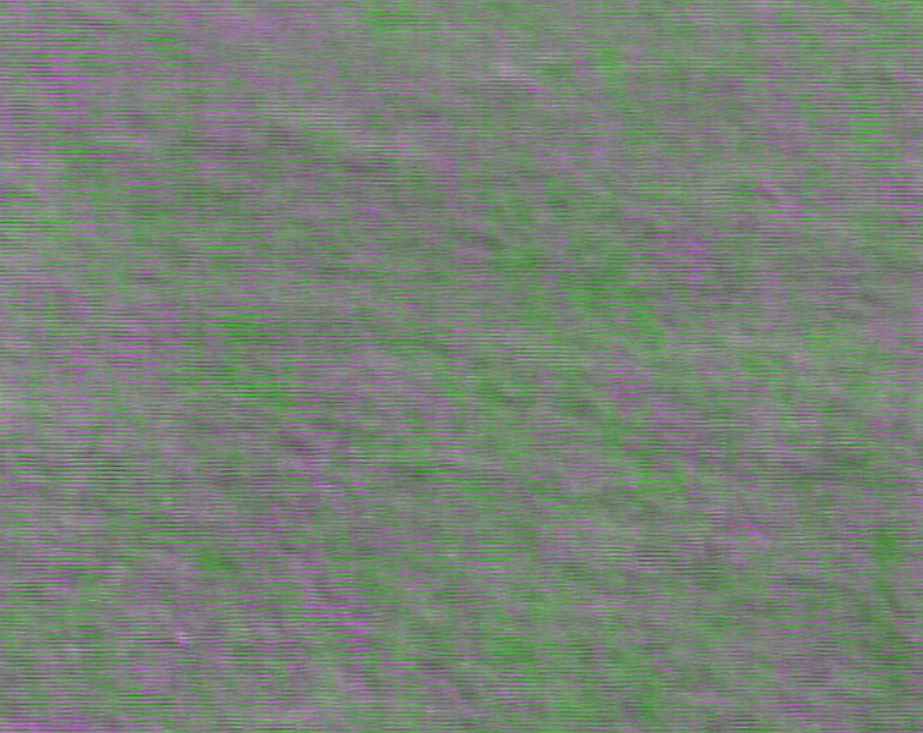

L: 307 There is a for loop looking for a format to choose and format 2 should be NV12 but for some reason when looking at the stream over voxl-portal i get green stripes in the ouput. Do you know what the problem could be?The Camera is a Boson 640 Black-White Thermal Camera.

-

Here is a Picture of the green stripes:

-

@Philemon-Benner Can you post the output of

lsusb,voxl-uvc-server -s, andvoxl-uvc-server -d -m -r 640x512? -

@Eric-Katzfey

lsusb:Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 003 Device 002: ID 09cb:4007 Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hubvoxl-uvc-server -s:

*** START DEVICE LIST *** Found device 1 Got device descriptor for 09cb:4007 134275 Found device 09cb:4007 DEVICE CONFIGURATION (09cb:4007/134275) --- Status: idle VideoControl: bcdUVC: 0x0100 VideoStreaming(1): bEndpointAddress: 129 Formats: UncompressedFormat(1) bits per pixel: 12 GUID: 4934323000001000800000aa00389b71 (I420) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-235929600 max frame size: 491520 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 UncompressedFormat(2) bits per pixel: 16 GUID: 5931362000001000800000aa00389b71 (Y16 ) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-629145600 max frame size: 655360 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 UncompressedFormat(3) bits per pixel: 12 GUID: 4e56313200001000800000aa00389b71 (NV12) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-235929600 max frame size: 491520 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 UncompressedFormat(4) bits per pixel: 12 GUID: 4e56323100001000800000aa00389b71 (NV21) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-235929600 max frame size: 491520 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 END DEVICE CONFIGURATION *** END DEVICE LIST ***voxl-uvc-server -d -m -r 640x512:

Enabling debug messages Enabling MPA debug messages voxl-uvc-server starting Image resolution 640x512, 30 fps chosen UVC initialized Device found Device opened uvc_get_stream_ctrl_format_size succeeded for format 2 Streaming starting Got frame callback! frame_format = 17, width = 640, height = 512, length = 491520, ptr = (nil) making new fifo /run/mpa/uvc/voxl-tflite-server0 opened new pipe for writing after 3 attempt(s) write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 1 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 * got image 30 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 -

Just checked the camera on my laptop. Camera it self is working fine

It's also working fine with nv12 format without the green lines:ffplay /dev/video4 -pixel_format nv12Output:

Input #0, video4linux2,v4l2, from '/dev/video4':B sq= 0B f=0/0 Duration: N/A, start: 33575.082223, bitrate: 235929 kb/s Stream #0:0: Video: rawvideo (NV12 / 0x3231564E), nv12, 640x512, 235929 kb/s, 60 fps, 60 tbr, 1000k tbn, 1000k tbc -

@Philemon-Benner It looks like voxl-uvc-server is working fine. The error messages seem to be related to voxl-tflite-server and not voxl-uvc-server. Can you use voxl-streamer to stream the output of voxl-uvc-server to VLC and see if there are still green lines? What are you currently using to stream the output of voxl-uvc-server?

-

@Philemon-Benner I'm guessing that there may be a version mismatch somewhere in there.

-

@Eric-Katzfey Yeah i will try that today. I'm currently showing the stream with voxl Portal but with the voxl-tflite-server pipe. So voxl-uvc-server --> voxl-tflite-server --> voxl-portal(green lines output).

-

@Eric-Katzfey what a exactly do you mean with version mismatch, and where. The thing i could think of is tflite server 0.2.0 as seen above in the first try of installation i had some errors because of existing dependencies.

-

@Eric-Katzfey Ok so i tried using it with voxl-streamer. It's working completely fine. Any suggestions?

-

I also tried changing the model but still the same result.

-

Update:

So i stepped back to TF1 and trained the ssdlite_mobilenet_v2. It's working great on the drone with tflite-server 0.1.8. Thanks for all the suggestions ·@Matt-Turi . But i am looking forward to using the tflite-server 0.2.0. If you still have suggestions for fixing the green stripes in the new version please let me know, because for custom models the new tflite-version is way easier to integrate and the code is more understandable for me. -

@Philemon-Benner Thanks for the follow up on this! We'll take a look.