Tflite Server Issue

-

I've been working with tflite server and encountered an issue when trying to run inference with my models.

When using version v0.3.1, the server works correctly with a normal model. However, when switching to a custom model with only two labels, the server shuts down due to a segmentation fault.

Upon testing with version v0.3.5, the server shuts down regardless of whether I use a custom model or a regular model. I've tried enabling debug mode, and it reveals that the issue occurs in the InferenceHelper::postprocess_yolov5 function. Specifically, the error arises in the getbbox function where it attempts to access an invalid memory location in the output_tensor float pointer.

Has anyone else encountered this issue or have suggestions for debugging this further? Is it a bug in the latest version, or could it be related to how the models are exported? Any guidance would be appreciated.

-

Hi @Karl-pan no I cannot say we have come accross this error before but will have a solution soon - just to confirm, can you paste you conf file in

/etc/modalai/voxl-tflite-server.confas well as runvoxl-inspect-cam -ato ensure the camera is outputting the frames. It sounds more like an issue with camera server than tflite if you are trying to access a nullptr that is the frame.Will look into this.

Zach -

Hi @Zachary-Lowell-0. We have confirmed that the camera is functioning correctly, so it seems unlikely that the issue is camera-related. Since the problem occurs at the output_tensor float pointer rather than a frame nullptr, the root cause appears to be elsewhere. Additionally, upon further inspection, I noticed that the label_in_use parameter no longer exists starting from v0.3.5. Could you clarify where the label file is defined in versions after v0.3.5? For now, we are using v0.3.4, which works as expected.

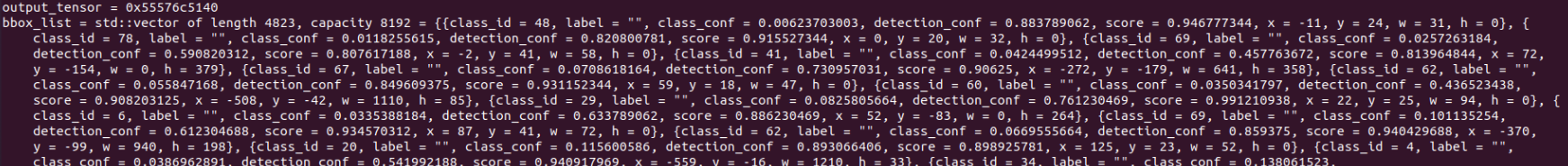

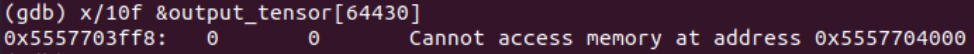

Picture above is a debugger mode result showing the output_tensor state, where data is present in bbox_list, confirming that the frame is working correctly. I am attempting to access data[index+4] in the file inference_helper.cpp on line 770, as shown in the picture below.

-

Hi @Karl-pan. There was a big refactor done on voxl-tflite-server not too long ago. Would you be able to test this on the dev branch?

Also please share your /etc/modalai/voxl-tflite-server.conf. I found a bug where the model path wasn't getting updated for custom models. I just made a PR to fix this, but you can manually edit it until that gets merged in.

Lastly, I'm not getting any seg fault with my custom models so if you're able to share an example model that could help narrow it down to the way you're exporting it.

Best,

Ted