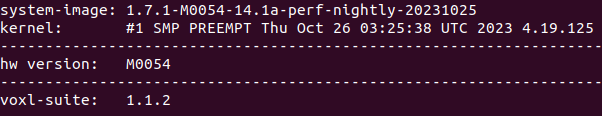

I've been working with tflite server and encountered an issue when trying to run inference with my models.

When using version v0.3.1, the server works correctly with a normal model. However, when switching to a custom model with only two labels, the server shuts down due to a segmentation fault.

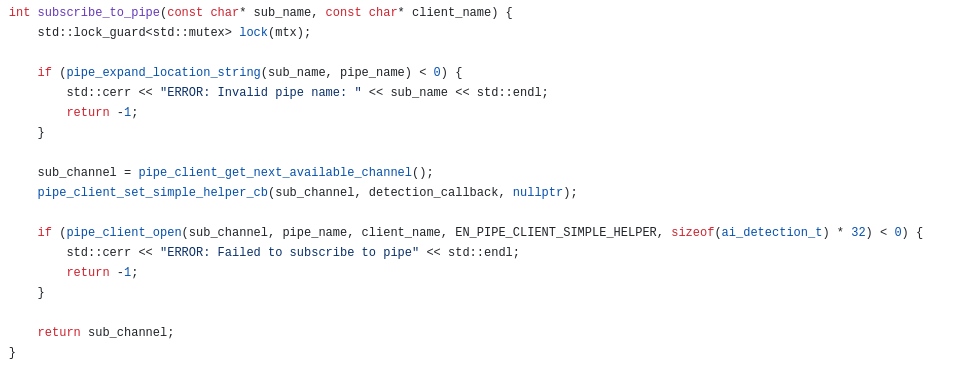

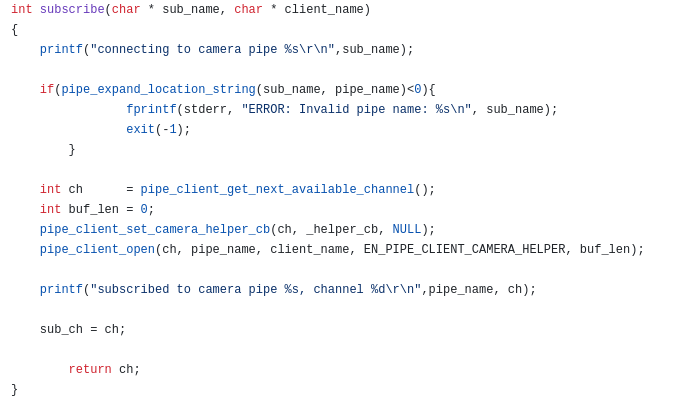

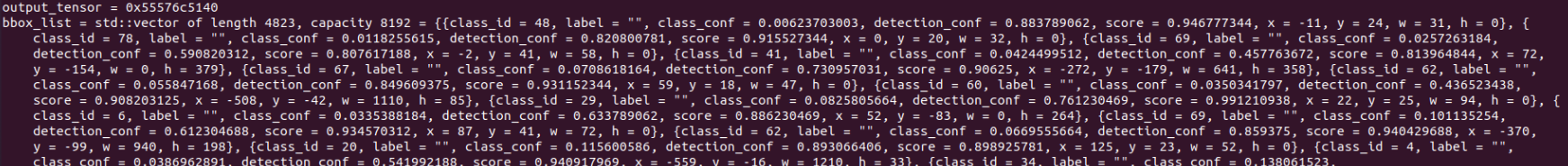

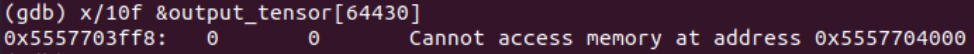

Upon testing with version v0.3.5, the server shuts down regardless of whether I use a custom model or a regular model. I've tried enabling debug mode, and it reveals that the issue occurs in the InferenceHelper::postprocess_yolov5 function. Specifically, the error arises in the getbbox function where it attempts to access an invalid memory location in the output_tensor float pointer.

Has anyone else encountered this issue or have suggestions for debugging this further? Is it a bug in the latest version, or could it be related to how the models are exported? Any guidance would be appreciated.