EIS merge

-

@m-costa , this is ModalAI EIS 1.0

. The computations are done within voxl-camera-server by processing the raw bayer data directly (cpu and gpu).

. The computations are done within voxl-camera-server by processing the raw bayer data directly (cpu and gpu).I actually have some updates and new videos will share shortly.

Also started testing on a flying platform (Starling 2 Max), will share initial results dual cam EIS (front facing and down facing).

I am curious what platforms folks would be using for testing EIS? One of ModalAI drones or something custom? If using a standard drone, i can document how to set up EIS for that type of drone, since there are a few things that need to be set up / verified in order for EIS to work properly.

Regarding Rolling Shutter Corrections, we have started implementing, will probably have some initial results in 1-2 weeks.

Alex

-

@Alex-Kushleyev So this is a custom implementation, not using any of Qualcomm's built in tools? If so how are you planning on implementing the RSC? Reading through some of the QC documentation, it seems like the supported QC EIS versions (1.0, 2.6) for the RB5 only correct rolling shutter for linear motion on a per frame basis. That doesn't help much on a high vibration platform where the intra-frame motion is non-linear.

Are you planning on implementing X and Y rolling shutter corrections on a per line basis? That would be sweet.

I'm looking to use this on a custom quadcopter that has pretty poor vibration isolation. Eager to see your results so far with the EIS.

-

@m-costa , yes it is a completely custom implementation.

For rolling shutter compensation, we are working on per-line compensation (not just X, Y, but full 3D rotation because the vibration can occur along all three axes x, y, and z). However in order for this approach to work well in environment with lots of high frequency vibrations, an IMU would need to be mounted directly on the camera (very rigid mechanical coupling, so that IMU picks up the exact vibrations that the camera is experiencing).

Unfortunately we do not currently offer any cameras with a built-in IMU. However, VOXL2 / VOXL2 mini both have an additional SPI port which can be used for an IMU. The current approach is to use an external IMU in addition to the IMU on VOXL2 and compare the EIS results. Voxl-imu-server already has support for an external IMU over SPI.

Also i should note that it is unlikely that we will be testing on RB5-based platform (because it is EOL in terms of our support) - I am not sure whether you were implying that you are using an RB5.

Finally, you should also investigate your camera mount. Vibration reduction is a whole separate subject, but you should make sure that the camera mount is very rigid and it is rigidly attached to the frame. Sometimes a little flex in the mount can amplify the existing vibrations, so in this case the solution would be to actually make the mount more rigid instead of isolating it with a soft material. Actually using dual IMU approach can reveal if the camera is vibrating more than the main board (voxl2) by comparing the IMU data.

Speaking of the camera - what camera are you using on your quadcopter?

Finally, some new videos. No RSC yet.

- dual camera EIS on Starling 2 Max (hand carried) : https://www.youtube.com/watch?v=-BA_nU4kjQs

- down-facing camera EIS on Starling 2 Max (flight) : https://www.youtube.com/watch?v=-B5xKmBBCAc

Notes about videos

- the ROI tracking still needs some work (it has not been tuned for the down-facing use case)

- down-facing camera had almost no vibration picked up by the camera. Some rolling shutter artifacts can be observed in a few cases.

- the front camera was also tested, but due to vibration + rolling shutter, the video did not turn out great, so I did not post it. Camera mount needs a little bracing to remove flex and will be tested again. Once I fix the camera mount bracing issue, i can post the before and after videos.

- in the dual cam video, you can see some rolling shutter artifacts which appear as slanted vertical features and a little motion due to shaking. This type of distortion is the "easy" one to fix using RSC.

Alex

-

@Alex-Kushleyev Sounds like we are pursuing the same sort of hardware setup. We have been using the SPI port connected to a 42688 IMU mounted directly on the rear of an OV64B sensor.

If you aren't targeting VOXL2/VOXL2 mini (both RB5 based), what hardware are you targeting? -

@m-costa , the target platform is indeed voxl2 / voxl2 mini. Just to clarify, RB5 and Voxl2 both use the same SOC, which is qrb5165. RB5 is the name of the development board from Qualcomm. One of our EOL dev kits was using RB5 boards.

Is your ov64B part of Hadron module or standalone? We do have support for Hadron and I was planning to test EIS with that camera as well.

Alex

-

@Alex-Kushleyev Ahh I see. I was just using RB5 as an abbreviation for qrb5165.

It is a standalone module, but since the hadron just passes through the OV64B MIPI/I2C its quite similar.Qualcomm advises using the SSC QUPs to interface with IMUs for EIS (I'm guessing for lower latency). Have you seen any differences in latency when comparing the SSC connected 42688 onboard the voxl2 vs the one connected to the generic QUP? Additionally, are you utilizing the 42688 interrupts to synchronize the data with the frames from the camera?

-

@m-costa ,

SSC can certainly achieve lower latency in terms of uncertainty of the time overhead for doing an SPI FIFO read from the IMU sensor. This is true because the SSC is typically doing a lot less than the CPU, but the SSC is still not a real time processor (compared to a "bare metal" microcontroller implementation). However, i believe the imu server on CPU is running at high priority and also we recently (6 months ago) made an update in the Linux kernel to increase the priority of the SPI interrupts. : here is the change that enables RT SPI message queue priority, if that is of interest: https://gitlab.com/voxl-public/system-image-build/meta-voxl2-bsp/-/commit/b5c3189536a80e8ea55846e7705dadbc5a597ff8

There is some logic to estimate the timestamps of each sample in voxl-imu-server with a fudge factor which as been verified against running VIO, which estimates the time offset between IMU and global shutter camera. Here is a link to the icm42688 driver and the note about time sync https://gitlab.com/voxl-public/voxl-sdk/services/qrb5165-imu-server/-/blob/master/server/src/icm42688.c#L68 .

There is also a timestamp filter which helps with removing timestampt jitter coming from the individual FIFO reads. Since the IMU sensor is running at a constant rate (in FIFO mode), there will not be any short term fluctuations in the sample read times on the sensor itself. The timestamp filter + fudge factor should do a good job quickly latching on to the time sync.

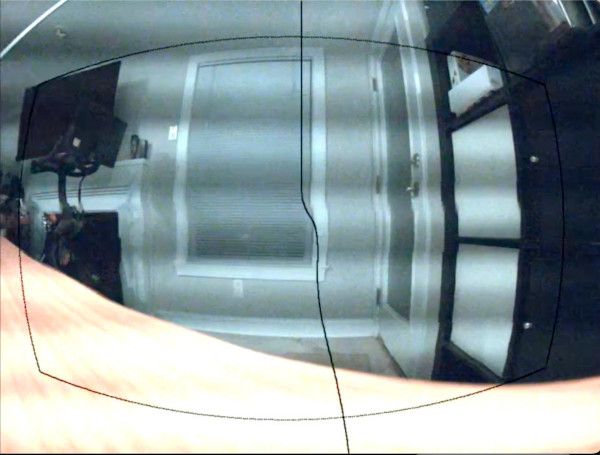

I have also recently done an overlay of camera frame with a rolling shutter artifact and overlaid IMU data at 4khz (well, this is actually a yaw estimate change since the start of the frame show as the vertical line running down the middle). The vertical line shows deflection when the IMU / camera start rotating. It is not perfect because in reality the rotation is 3D but only yaw is shown.

Please ignore the light "waves" here as they are from LED lighting and very low exposure. I reduced the exposure to 1 or 2 ms to avoid motion blur.

I do realize that the IMU sync fudge factor was tuned at 1khz (per note in the link above) and I changed the frequency to 4Khz, so I will definitely double check this, but it looks OK based on the image. It is good enough for initial testing, at least.

The plan for now is to use the SPI IMU connected to the regular QUP, accessed from CPU. Once the rolling shutter compensation pipeline is up and running, the sync can be tested and I am planning to have a way to run the pipeline offline using logged RAW images and IMU data to replicate the exact real-time processing pipeline (so that different IMU time offsets can be tested for dev purposes)

Alex

-

@Alex-Kushleyev said in EIS merge:

You will need to build the voxl-camera-server and voxl-portal packages from the appropriate branches. Hopefully you can do that by yourself. If not, i can post the test debs.

Hi @Alex-Kushleyev I want to use the EIS features. Would you be able to help me guide in the right direction of how to setup a build environment and build from the voxl-camera-server eis branch? Thank you.

-

I am working on merging the current EIS features to our main branch, this should be done sometime next week.

Meanwhile, it would help if you briefly describe your use case for EIS, so I can make sure it is supported in the release. Are you using IMX412 camera?

The Rolling Shutter correction won't yet be supported, but I wanted to release what we have so far, which works pretty under low vibration conditions.

If you don't have the build environment set up for building

voxl-camera-server, i can provide the deb package for you to test what is on EIS branch right now.Alex

-

@Alex-Kushleyev thank you so much. I can wait till the changes are merged and available to update directly on the voxl2.

Yes, I am using the IMX412 camera.

I can start off with whatever you have got for now and hope for a good video feed on my drone without any gimbal. As new changes come along, I will update.

I have never setup a build environment for voxl repos. I am used to building PX4 etc. with some of my custom changes. I would love to learn how to setup the build environment for the voxl repos. So if you can point me in the right direction, that would be great! I see in the docs, under "voxl SDK > custom voxl applications", there are some instructions.

Thank you!

-

-

Hi @h3robotics, Thanks for your patience.

Actually as of right now, the EIS branch has been updated to have all the latest features from dev.

One big improvement from prior EIS version is that now for each camera we support up to 4 MISP outputs of different resolution, fps, encoder params and each stream can have EIS on or off.

Please hang tight, i just need to tie up some loose ends and add documentation. I will post an update most likely tomorrow. I will also share a

voxl-camera-server.confwith the usage examples.Meanwhile please make sure you can build the

eisbranch from here, https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/tree/eis , usingvoxl-cross:4.0docker image, which is required.Alex

-

Hi All,

Sorry for the delay again. We were chasing down a vibration issue which was apparently causing significant rolling shutter artifacts on the front camera in my tests. Apparently some of the lenses for IMX412 cameras have loose internal components, causing the vibration which is impossible to correct. On my test platform (Starling 2 Max), I had this issue and no frame modifications improved anything. The last thing to try was to switch out the lens and the issue was completely gone.

We will investigate the reason for the loose lenses and the solution. In my case, shaking the lens would result in audible rattle inside the lens. If anyone has "shaky" lens, we will send a replacement.

I will post some sample videos of what a bad lens might look like.

The good news is that there is actually no major vibration issue on the Starling 2 Max and I can move forward with the EIS sw release.. stay tuned for another day or so!

Alex

-

Hi @Alex-Kushleyev

Some of the videos and photos taken with the IMX412 I have on hand were blurry due to vibration.

I tried various things but couldn’t resolve the issue—however, it was resolved after I replaced the lens with a different one.

Would it be possible to include a replacement lens with my current order?

Also, I have a few units in stock, and I’m concerned there might be similar issues with them. -

@JP-Drone , I will double check, but we should be able to send replacements. However, we first need to figure out what's going on with these lenses and make sure the replacements are good.

Here is an example of EIS running with bad and good lenses (just a short test):

bad : https://drive.google.com/file/d/1g3dCVs64bBuDhSWJ-ZiKMbyHUBmR7AgB/view?usp=drive_link

good: https://drive.google.com/file/d/1R6GCH3l2d6CbwRPCrMRbvD21e2yg3vfq/view?usp=drive_linkPlease note that when tested indoor (low light conditions), the rolling shutter "jello" may not be as obvious, since it will get smoothed out by the long exposure times.

Alex

-

The EIS branch is ready for initial tested. Please see the doc file:

https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/blob/eis/voxl2-eis.md

You will need to build the camera server from this branch (and it's best to be able to do that anyway, if experimenting with eis..). Please note that you will need the latest

voxl-cross:V4.0docker image to build the camera server.The eis branch is sync'ed to latest dev as of today. Added basic params for specifying EIS behavior, as documented (

eis_mode,eis_view,eis_follow_rate). Parameter usage is documented as well as basic voxl-camera-server.conf example is provided. Parameter names may change in the future, but the changes will be documented.Please use the latest IMX412 driver as instructed. For best results, use input resolution of 4040x3040 and output resolution can be anything. including 3840x2160. 4K60 output is supported (h265 strongly recommended), but you will not be able to view that via the voxl-portal.

This release also supports eis on 3 additional MISP channels (can be different resolutions).

We will be focusing next on improving stabilized ROI behavior for smoother output and experimenting more with rolling shutter compensation.

Please try it out, i would be excited to get initial feedback.

Alex

-

Hi @Alex-Kushleyev

Happy to test it.

My IMX412 is mounted upside-down on J7-Lower (cam ID 2).

Could you provide a flip version of the EIS driver, e.g. com.qti.sensormodule.imx412_fpv_flip_eis_2.bin?Thanks a lot for all the support!

-

@JP-Drone , If your camera is flipped (rotated 180), you just need to update your extrinsics to tell EIS the orientation of camera w.r.t IMU:

Instead of right side up

"RPY_parent_to_child": [0, 90, 90]your extrinsic file should be

"RPY_parent_to_child": [0, 90, -90]In other words, your

/etc/modalai/extrinsics.confshould contain the following entry:{ "parent": "imu_apps", "child": "<camera_name>", "T_child_wrt_parent": [0.0, 0.0, 0.0], "RPY_parent_to_child": [0, 90, -90] }(I am assuming VOXL2 is mounted in normal orientation, right side up)

Please try.

Also, we recently merged the EIS feature to dev branch of camera server, still working on better documentation.

Alex

-

Initial docs are up : https://docs.modalai.com/camera-video/electronic-image-stabilization/

-

Hi @Alex-Kushleyev! Where can I find the IMX214 drivers for EIS? Thanks!