@Philemon-Benner Seems like some else had the same issue but there was no further investigation on the problem. https://forum.modalai.com/topic/2125/voxl-mavlink-server-external-fc-bus-error?_=1688565579723

Posts made by Philemon Benner

-

RE: Voxl 1 voxl-mavlink-server bus errorposted in Software Development

-

Voxl 1 voxl-mavlink-server bus errorposted in Software Development

Hey,

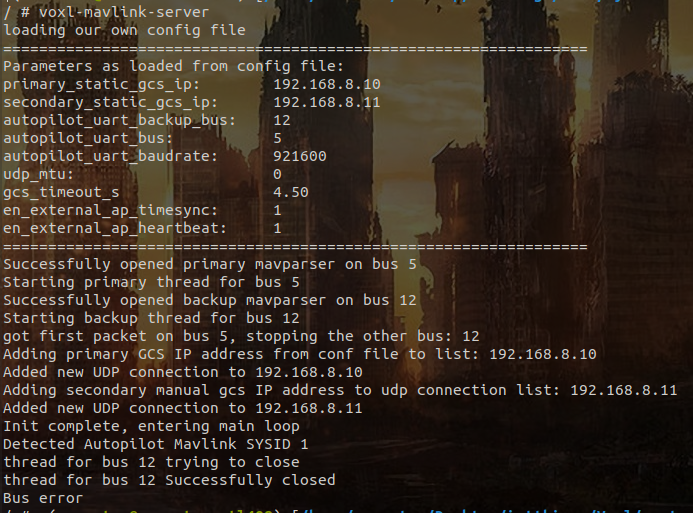

i am trying to move to new voxl-mavlink-server. After upgrading, when running voxl-mavlink-server i get the following error:

After that error the device goes offline, and i have to powercycle the Voxl in order to access it again. I configured voxl-mavlink-server with voxl-configure*. I am migrating from voxl-mavlink-server v0.3.0 wich runs perfectly fine, i also tried using a different Voxl but same issue persists.voxl-mavlink-server: v1.2.0

libapq8096-io: 0.6.0

system image: 3.8.0 / 4.0.0

hw version: VOXL -

Missing newest apq8096 and qrb5165 proprietaryposted in Software Development

Hey,

i want to upgrade voxl-cross to 2.5 with voxl-docker, but unfortunately new proprietary ipk and deb packages are missing in Modalai protected downloads.apq8096-proprietary_0.0.4.ipk qrb5165-proprietary_0.0.3_arm64.debare they store somewhere different from the old location ?

If not would be nice i you could add them. -

RE: M0025-1 Hires Camera capture with opencvposted in Ask your questions right here!

@Chad-Sweet what is the reason for using Gralloc allocator on apq8096 for buffer allocation instead of ION memory allocator used in the qrb5165 implementation ?

-

RE: M0025-1 Hires Camera capture with opencvposted in Ask your questions right here!

Thanks for the fast reply

-

M0025-1 Hires Camera capture with opencvposted in Ask your questions right here!

Hey,

i want to integrate the https://docs.modalai.com/M0025/ Camera from you in my application. I'm currently using opencv to capture video frames.

Is it required that i use the hal3 module used in https://gitlab.com/voxl-public/voxl-sdk/services/voxl-camera-server/-/tree/master/ ?

If yes wich libraries do i have to link to my application in order to use the hal3 module ?

And can i build these on my own or are these built alongside the system-image ?Platform: Voxl 1 ap8096

-

RE: voxl-modem-start.sh issueposted in Software Development

@tom Now it's working again, but might be worth a look, it's important that it always works. Parsing seems fine:

apq8096 detected... 'v2' len: 2 Initializing v2 modem... Waiting for ttyUSB2... ttyUSB2 -

voxl-modem-start.sh issueposted in Software Development

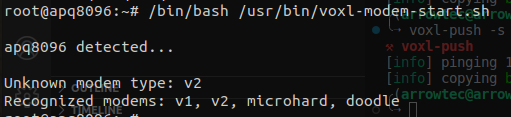

Hey,

i'm currently trying to do something similiar to modallink_relink.py, but i need the return value of voxl-modem-start.sh and it should be blocking, so i wanted to directly run that instead of using:systemctl restart voxl-modemBut when i'm running voxl-modem-start.sh from voxl terminal i get the following error:

the modem is configured and with the service file it works. I also tried playing around with the if conditions in voxl-modem-start.sh but without success.

System Image: 3.8.0

voxl-modem Version: 0.16.1 -

Fork Modal Pipe & Modal Jsonposted in FAQs

Hey,

i am using modal pipe and modal json in my project as external project , but sadly the cmake install path is always /usr/lib64, so i wanted to ask if i am allowed to fork modal pipe and modal json and create a public gitlab project including these, where i only change the cmake files ? -

RE: voxl-wifi softap issuesposted in Software Development

Also a list of country codes conforming the (ISO/IEC 3166-1) of the hostapd.conf:

Could be put into the print_usage:echo "List of available Country Codes: https://en.wikipedia.org/wiki/List_of_ISO_3166_country_codes" -

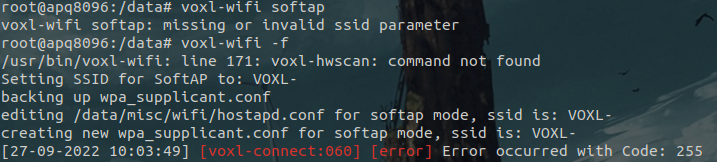

voxl-wifi softap issuesposted in Software Development

Hey,

i'm currently working on setting my voxl to softap mode. While doing that i encountered some issues that i wanted to let u know.Voxl: Voxl 1

Voxl System Image: 3.8.0

Voxl Suite: 0.7.0So when i'm trying to set the voxl into softap factory mode i'm getting the following error:

voxl-wifi -fOutput:

Seems like voxl-hwscan doesn't exist anymore in the new system-image ?Also when i tried editing the default password in:

/usr/bin/voxl-wifi l. 5-7DEFAULT_SSID="VOXL-BLA" DEFAULT_PASS="somesecretpassword" DEFAULT_COUNTRY_CODE="mycountrycode"it will still use the standart password 1234567890. So i had a look at the hostapd.conf,

and it seems like there also is a wpa_passphrase var at line 997 and a country code at line 99. Modifying voxl-wifi in line 54 to:function create_softap_conf_file () { echo "editing ${hostapd_conf_file} for softap mode, ssid is: ${ssid}" sed -i "/^ssid=/c\ssid=${ssid}" ${hostapd_conf_file} sed -i "/^wpa_passphrase=/c\wpa_passphrase=${DEFAULT_PASS}" ${hostapd_conf_file} sed -i "/^country_code=/c\country_code=${DEFAULT_COUNTRY_CODE}" ${hostapd_conf_file} echo "creating new $conf_file for softap mode, ssid is: ${ssid}" cat > ${conf_file} << EOF1 country=${country_code}seems to work. Maybe you could include that in future releases, or let me know if i did something nasty.

-

RE: voxl-tflite-server v0.3.0 apq8096 yolov5posted in FAQs

@Matt-Turi Ah ok that sounds interesting might be worth a try. Good to know that. Thanks for the fast answer as always.

-

Voxl-Wifi set softap passwordposted in FAQs

Hey,

as mentioned above, i want to change the default password of softap mode. Is it only possible with manually changing the default password in the shell script ?

Something like:echo DEFAULT_PASS="bla" >> /usr/bin/voxl-wifi -

RE: voxl-vision-px4 1.* not working on apq8096posted in Ask your questions right here!

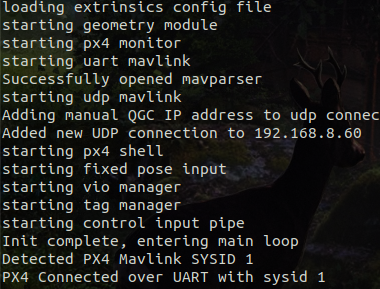

Ok i resolved the issue. You actually need to run voxl-mavlink-server and voxl-vision-px4 to get Autopilot enabled for Mavsdk.

-

RE: voxl-tflite-server v0.3.0 apq8096 yolov5posted in FAQs

@Matt-Turi also is there any particular reason for having a custom resize Function instead of Opencv's resize function?

-

RE: voxl-tflite-server v0.3.0 apq8096 yolov5posted in FAQs

@Matt-Turi also besides the topic you could add:

InferenceHelper.h//gpu Opts for more Info see: https://github.com/tensorflow/tensorflow/blob/v2.8.0/tensorflow/lite/delegates/gpu/delegate.h static constexpr TfLiteGpuDelegateOptionsV2 gpuOptions = {-1, //Allow Precision Loss TFLITE_GPU_INFERENCE_PREFERENCE_SUSTAINED_SPEED, //Preference TFLITE_GPU_INFERENCE_PRIORITY_MIN_LATENCY, //Prio1 TFLITE_GPU_INFERENCE_PRIORITY_AUTO, //Prio2 TFLITE_GPU_INFERENCE_PRIORITY_AUTO, //Prio3 TFLITE_GPU_EXPERIMENTAL_FLAGS_ENABLE_QUANT, //Experimental Flags 1, nullptr, nullptr //Serializiation Stuff probably don't touch };And might:

constexpr TfLiteGpuDelegateOptionsV2 InferenceHelper::gpuOptions;InferenceHelper.cpp (because C++11 sucks with constepxr).

With that you can move the creation of the gpu Options to Compile time, and these should always be the same. Tensorflow recommends doing this because the default configuration might change.

Here is the function that you are calling when you are creating the options:

https://github.com/tensorflow/tensorflow/blob/v2.8.0/tensorflow/lite/delegates/gpu/delegate.cc#:~:text=TfLiteGpuDelegateOptionsV2-,TfLiteGpuDelegateOptionsV2Default,-() { -

RE: voxl-tflite-server v0.3.0 apq8096 yolov5posted in FAQs

@Matt-Turi Thanks for the answer. What do you mean with implementing a tracker, that selectively feed different portions of the frame ? Something like just running Inference on the Roi of the Image ?

-

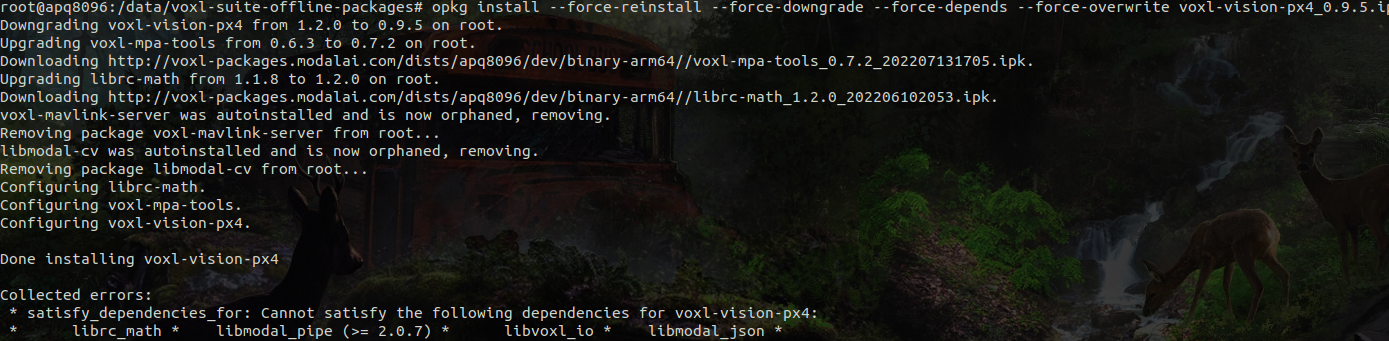

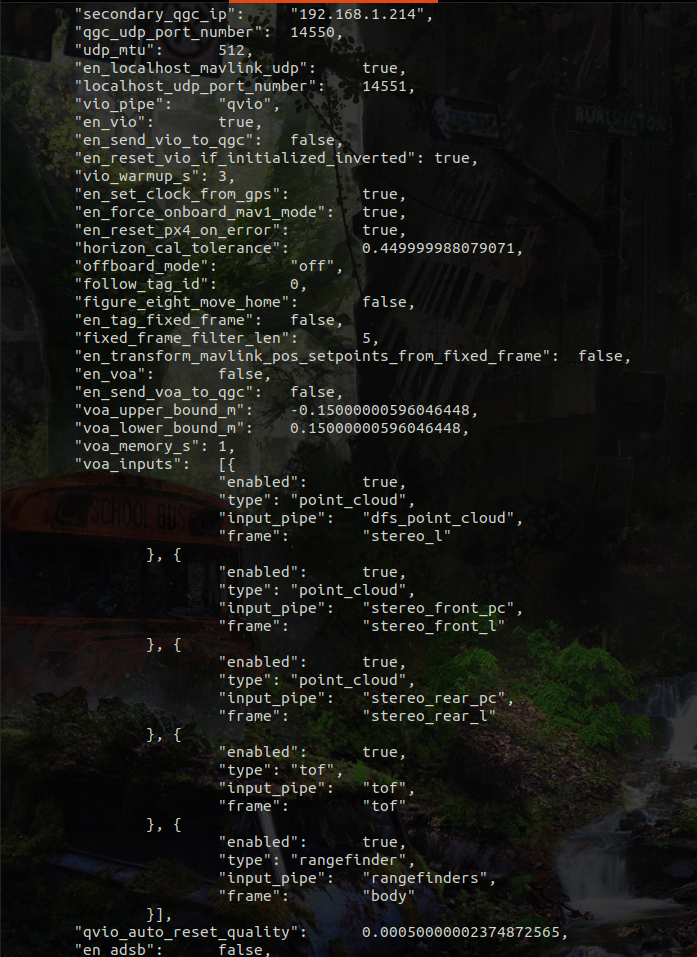

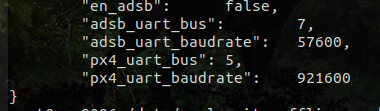

voxl-vision-px4 1.* not working on apq8096posted in Ask your questions right here!

Hey,

i have recently adapted to the new system Image. Now i am having issues, not getting the px4 recognized in voxl-vision-px4:

voxl-vision-px4(0.9.5):

with voxl-vision-px4 > 0.9.5, its not detecting the px4 ?

The old Version is working on the new System Image but i am getting the following errors:

Can i just ignore these ?

Additionial Info:

System Image: 3.8.0

Voxl: apq8096

voxl-vision-px4 versions tried: 1.2.0, 1.0.5.

Config File:

-

RE: voxl-tflite-server v0.3.0 apq8096 yolov5posted in FAQs

@Matt-Turi Is integer Quantisation faster than floating point model ? Yeah Problem is that we are using a higher resolution camera, and we are flying at high altitudes, so cropping images down to a smaller resolution drastically changes the Precision. Also wich input dimensions are you using for testing(Camera size), and have u also tested the model outside of your office ?

-

RE: voxl-tflite-server v0.3.0 apq8096 yolov5posted in FAQs

Also the Model that i trained is slower than your's, is that because of the bigger model image size. I use 640x640, your's is 320x320, with your model i get around 41ms and with mine around 110ms per Image.

Edit: I Also used yolov5s and trained from scratch, i exported with tflite and half option.