@thomas hey there Thomas, thank you for your reply!

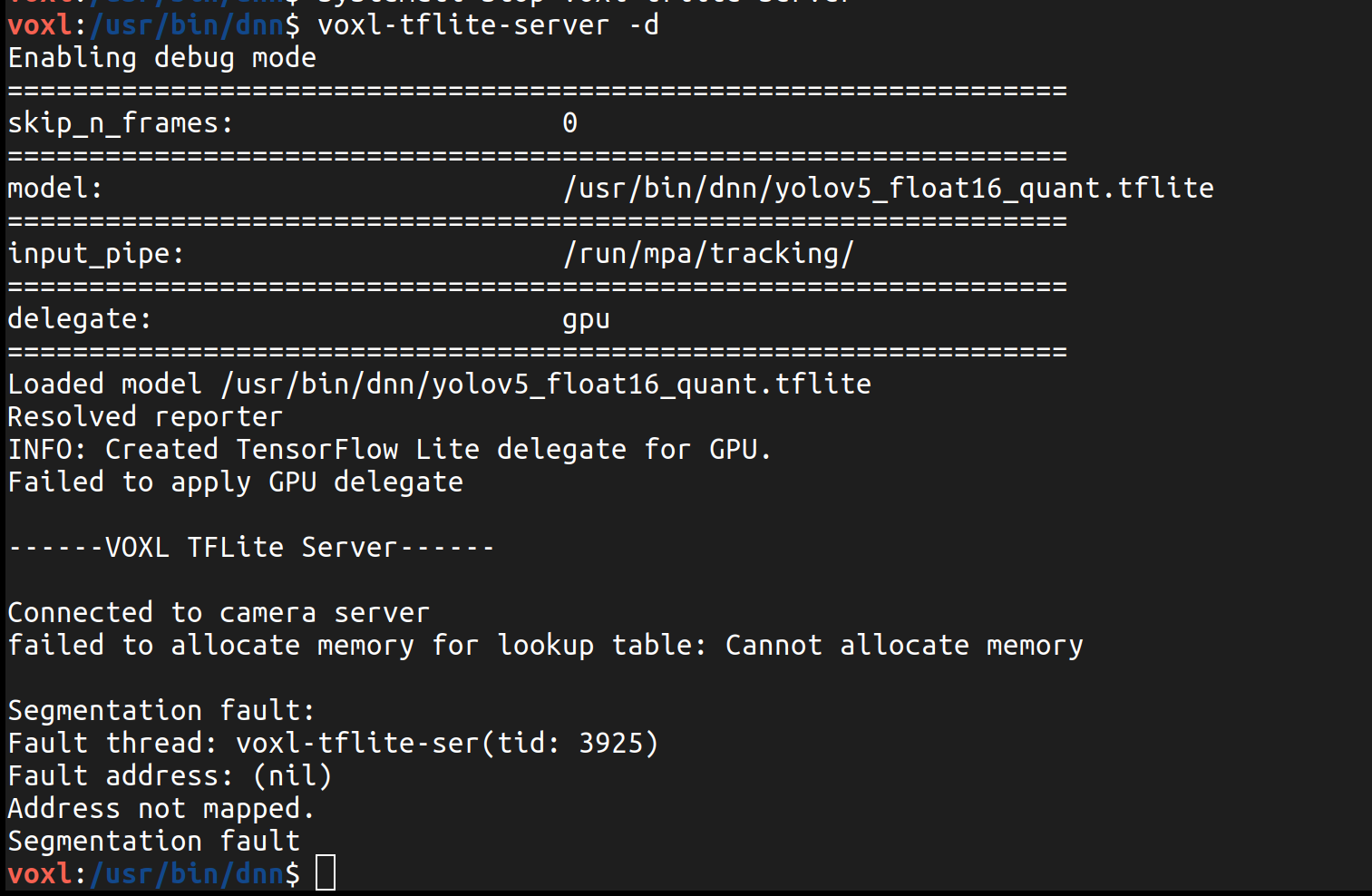

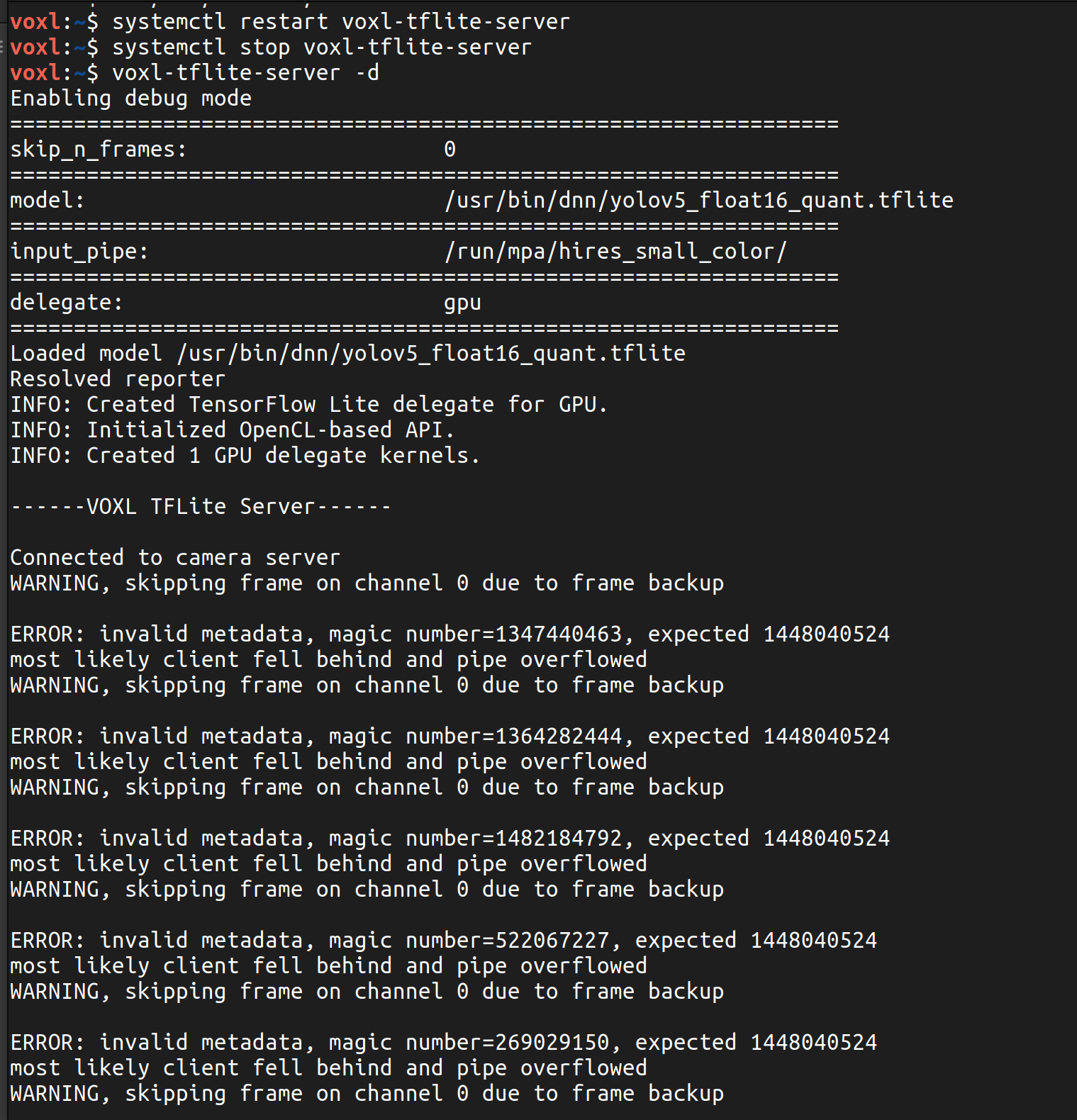

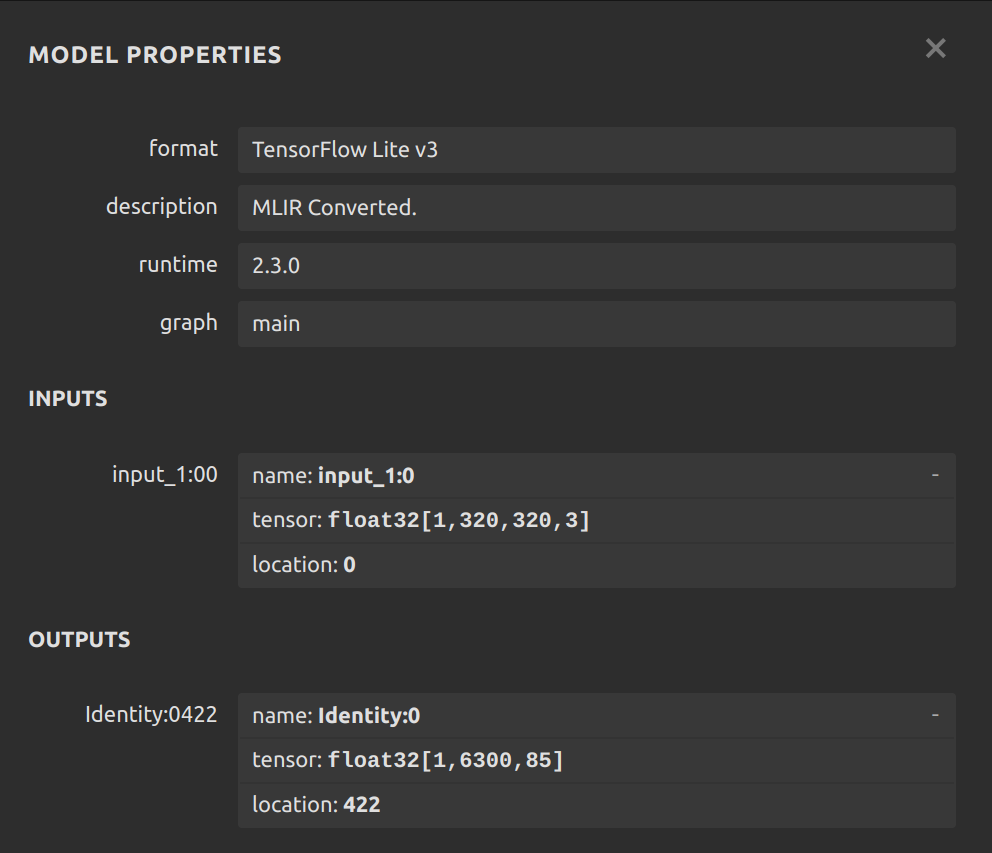

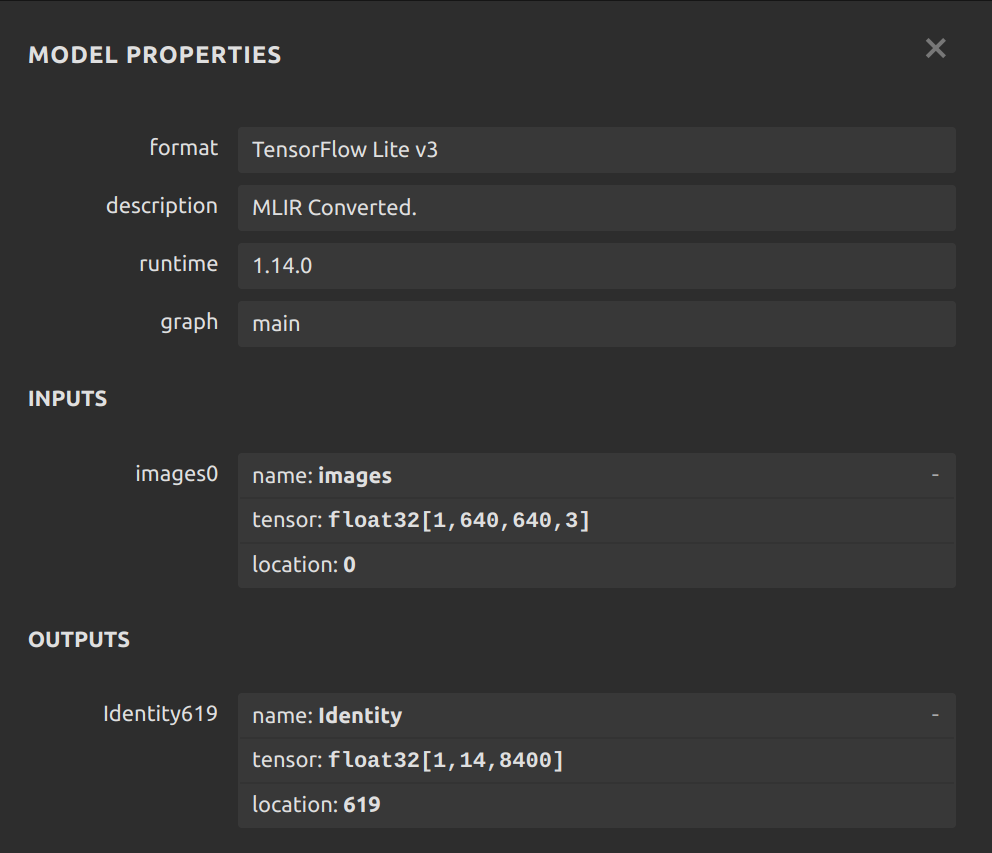

Understandable that it's harder because of VOXL 1 rather than 2. I've tested all the other models on board and they produce very similar results as described by the Deep Learning documentation guide. The YOLOv5 model already on board gives about 20 FPS and 40-50ms inferences with the hires small color camera (similar with other pre-loaded models both on hires and tracking cameras). So, it seems like the VOXL 1 is running YOLO at a good speed, just not the one I uploaded. However, it seems the one I uploaded is much bigger (180MB) as compared to the one that came on it (14MB). I used the float 16 quantization as described in the documentation, but should I try using a smaller model like YOLOv5s versus YOLOv5x?