@Matt-Turi I wanted to try the resnet architecture as the accuracy/speed ratio is a bit better than the mobilenet architecture. But it's not a hard requirement, so I'll just use the mobilenet architectures. Thanks!

Latest posts made by Steve Arias

-

RE: Trying to make my own custom tflite model for voxl-tflite-serverposted in Ask your questions right here!

-

RE: Trying to make my own custom tflite model for voxl-tflite-serverposted in Ask your questions right here!

@Matt-Turi Yep, looks like 'image_tensor' and 'detection_classes' are the input and outputs names, respectively.

converter = tf.compat.v1.lite.TFLiteConverter.from_frozen_graph( graph_def_file = 'faster_rcnn_resnet101_coco_2018_01_28/frozen_inference_graph.pb', input_arrays = ['image_tensor'], input_shapes={'image_tensor': [1,300,300,3]}, output_arrays = ['detection_classes'] ) converter.use_experimental_new_converter = True converter.allow_custom_ops = True converter.target_spec.supported_types = [tf.float16] tflite_model = converter.convert() # Save the model. with open('custom_model.tflite', 'wb') as f: f.write(tflite_model)When I run the code now, I get the following:

(tflite_env) stevearias@Steves-Macbook-Pro tflite_env % python3 convert_to_tflite_model.py 2022-02-14 14:41:58.792670: I tensorflow/core/platform/cpu_feature_guard.cc:143] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA 2022-02-14 14:41:58.827082: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x7f9606206700 initialized for platform Host (this does not guarantee that XLA will be used). Devices: 2022-02-14 14:41:58.827104: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version Traceback (most recent call last): File "convert_to_tflite_model.py", line 22, in <module> main() File "convert_to_tflite_model.py", line 14, in main tflite_model = converter.convert() File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/lite/python/lite.py", line 1084, in convert **converter_kwargs) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/lite/python/convert.py", line 496, in toco_convert_impl enable_mlir_converter=enable_mlir_converter) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/lite/python/convert.py", line 227, in toco_convert_protos raise ConverterError("See console for info.\n%s\n%s\n" % (stdout, stderr)) tensorflow.lite.python.convert.ConverterError: See console for info. 2022-02-14 14:42:07.697347: W tensorflow/compiler/mlir/lite/python/graphdef_to_tfl_flatbuffer.cc:144] Ignored output_format. 2022-02-14 14:42:07.697406: W tensorflow/compiler/mlir/lite/python/graphdef_to_tfl_flatbuffer.cc:147] Ignored drop_control_dependency. Traceback (most recent call last): File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/bin/toco_from_protos", line 8, in <module> sys.exit(main()) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/lite/toco/python/toco_from_protos.py", line 93, in main app.run(main=execute, argv=[sys.argv[0]] + unparsed) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/python/platform/app.py", line 40, in run _run(main=main, argv=argv, flags_parser=_parse_flags_tolerate_undef) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/absl/app.py", line 312, in run _run_main(main, args) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/absl/app.py", line 258, in _run_main sys.exit(main(argv)) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/lite/toco/python/toco_from_protos.py", line 56, in execute enable_mlir_converter) Exception: Merge of two inputs that differ on more than one predicate {s(SecondStagePostprocessor/BatchMultiClassNonMaxSuppression/map/while/PadOrClipBoxList/Greater:0,else), s(SecondStagePostprocessor/BatchMultiClassNonMaxSuppression/map/while/PadOrClipBoxList/cond/cond/pred_id/_564__cf__570:0,then), s(SecondStagePostprocessor/BatchMultiClassNonMaxSuppression/map/while/PadOrClipBoxList/cond/Greater/_563__cf__569:0,then)} and {s(SecondStagePostprocessor/BatchMultiClassNonMaxSuppression/map/while/PadOrClipBoxList/Greater:0,else), s(SecondStagePostprocessor/BatchMultiClassNonMaxSuppression/map/while/PadOrClipBoxList/cond/cond/pred_id/_564__cf__570:0,else), s(SecondStagePostprocessor/BatchMultiClassNonMaxSuppression/map/while/PadOrClipBoxList/cond/Greater/_563__cf__569:0,else)} for node {{node SecondStagePostprocessor/BatchMultiClassNonMaxSuppression/map/while/PadOrClipBoxList/cond/cond/Merge}}Any idea on what the issue is?

-

Trying to make my own custom tflite model for voxl-tflite-serverposted in Ask your questions right here!

I made a virtualenv environment which has Python3.7 and Tensorflow (version 2.2.3). I am trying to convert the faster_rcnn_resnet101_coco model (labelled as "faster_rcnn_resnet101_coco" in https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf1_detection_zoo.md) to a tflite model so that I can put it on the board and run via voxl-tflite-server. I have read the documentation on the voxl-tflite-server. I understand that quantization will allow GPU support for the model. Here's what my conversion code looks like so far:

converter = tf.compat.v1.lite.TFLiteConverter.from_frozen_graph( graph_def_file = 'faster_rcnn_resnet101_coco_2018_01_28/frozen_inference_graph.pb', input_arrays = ['normalized_input_image_tensor'], input_shapes={'normalized_input_image_tensor': [1,300,300,3]}, output_arrays = ['TFLite_Detection_PostProcess', 'TFLite_Detection_PostProcess:1', 'TFLite_Detection_PostProcess:2', 'TFLite_Detection_PostProcess:3'] ) converter.use_experimental_new_converter = True converter.allow_custom_ops = True converter.target_spec.supported_types = [tf.float16] tflite_model = converter.convert() # Save the model. with open('custom_model.tflite', 'wb') as f: f.write(tflite_model)I get the following output:

(tflite_env) stevearias@Steves-Macbook-Pro tflite_env % python3 convert_to_tflite_model.py 2022-02-12 19:48:02.033093: I tensorflow/core/platform/cpu_feature_guard.cc:143] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA 2022-02-12 19:48:02.071654: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x7f925aaec760 initialized for platform Host (this does not guarantee that XLA will be used). Devices: 2022-02-12 19:48:02.071679: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version Traceback (most recent call last): File "convert_to_tflite_model.py", line 22, in <module> main() File "convert_to_tflite_model.py", line 8, in main output_arrays = ['TFLite_Detection_PostProcess', 'TFLite_Detection_PostProcess:1', 'TFLite_Detection_PostProcess:2', 'TFLite_Detection_PostProcess:3'] File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/lite/python/lite.py", line 780, in from_frozen_graph sess.graph, input_arrays) File "/Users/stevearias/Documents/src/ece-498-repo/python-scripts/tflite_env/tflite_env/lib/python3.7/site-packages/tensorflow/lite/python/util.py", line 128, in get_tensors_from_tensor_names ",".join(invalid_tensors))) ValueError: Invalid tensors 'normalized_input_image_tensor' were found.Did you encounter this error when trying to convert the ssdlite_mobilenet_v2_coco model to a tflite model?

-

RE: How to use custom libmodal_pipe in MPA serviceposted in Ask your questions right here!

@James-Strawson thanks for the suggestions!

-

How to use custom libmodal_pipe in MPA serviceposted in Ask your questions right here!

I've forked libmodal_pipe (https://gitlab.com/SteveArias1/libmodal_pipe) and have made changes to this forked repo. I have generated and installed an ipk package of this custom libmodal_pipe via

voxl-docker -i voxl-crossand the other usual commands that follow (./install_build_deps.sh stable, etc.). I'm able to install this custom version of libmodal_pipe on the VOXL board. It's running this custom libmodal_pipe on the board as we speak..I've made a new MPA service that uses libmodal_pipe. I've been using voxl-docker to build and install this service to the VOXL board. Specifically, I use the voxl-cross docker image and run a custom

./install_build_deps.shscript which downloads libmodal_pipe, libmodal_json and voxl-mavlink from the stable opkg repo (http://voxl-packages.modalai.com/stable).I want to use a custom function that I made in the custom libmodal_pipe library in my MPA service. How can I use the custom libmodal_pipe library that I have? I can't use opkg to get it since the stable repo only has the stable ipk packages that ModalAI provides by default.

-

using boost c++ library on VOXLposted in Ask your questions right here!

I want to use the boost C++ library (https://www.boost.org/) on one of the MPA services (voxl-tflite-server, https://gitlab.com/voxl-public/modal-pipe-architecture/voxl-tflite-server). I read a post where someone was trying to use

apt-getfunctionality, where the answer was to look at the documentation on running Ubuntu on VOXL (https://docs.modalai.com/docker-on-voxl/). I have been developing on VOXL by doing the following dev flow:- Make changes on local PC running Ubuntu

- Run

voxl-docker -i voxl-crosson PC which runs the voxl-cross docker image - In docker image:

- Run

./install_build_deps.sh stablewhich installs the dependencies of the MPA service via opkg - Build via

./build.sh - Make ipk package via

./make_package.sh - Exit docker image

- Run

- Run

install_on_voxl.shwhich pushes ipk package to VOXL board via ADB and installs it via opkg

Do I need to create my own custom docker image that has boost installed on it, or can I install boost on my voxl-cross docker image?

-

RE: hires not runningposted in Modal Pipe Architecture (MPA)

This is what I get on voxl-portal.

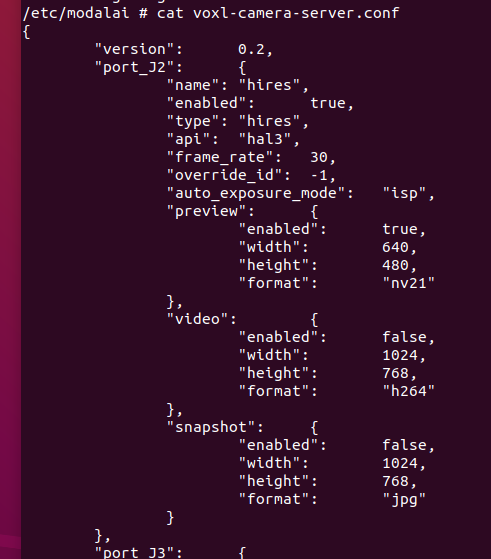

It looks like the preview for hires is enabled. -

RE: hires not runningposted in Modal Pipe Architecture (MPA)

I'm asking because voxl-tflite-server isn't receiving any camera frames. It used to work fine since hires was up and running. But for some reason, hires is gone and I don't know how to start it up again.

-

hires not runningposted in Modal Pipe Architecture (MPA)

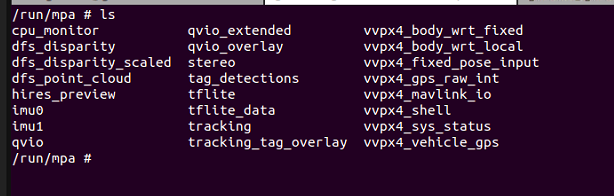

I see that hires_preview is running but not hires. How do I start up hires?