Upgrade Tensorflow Version tflite-server

-

Hey,

i am currently trying to make a custom model for tflite-server. I am running into the following error when trying to run inference on the model.Resolved reporter ERROR: Unsupported data type 14 in tensor ERROR: Unsupported data type 14 in tensor ERROR: Unsupported data type 14 in tensor ERROR: Unsupported data type 14 in tensor ERROR: Unsupported data type 14 in tensor ERROR: Unsupported data type 14 in tensorin Stackoverflow there is a suggestion to upgrade tensorflow version. My Question is can i just upgrade the tensorflow version from tflite-server or is it not possible.

Stackoverflow Link: https://stackoverflow.com/questions/70297027/valueerror-unsupported-data-type-14-in-tensor

Model: Efficcentnet-D0 from model zoo tf2.

PS: It would be nice if you would mention in the docs for custom training that the tflite-server only accepts models with the name of the ones that come with tflite-server, and that when compiling to tflite Input Tensor names vary from model to model.Update:

Tried the Following Model Zoo TF2 Models:

SSD MobileNet v2 320x320

SSD MobileNet V2 FPNLite 320x320

both having the same Output in Tflite:Loaded model /usr/bin/dnn/ssdlite_mobilenet_v2_coco.tflite Resolved reporter INFO: Created TensorFlow Lite delegate for GPU. GPU acceleration is SUPPORTED on this platform ERROR: Attempting to use a delegate that only supports static-sized tensors with a graph that has dynamic-sized tensors. Failed to apply delegate ------Setting TFLiteThread to ready!! W: 300 H: 300 C:3 ------Popping index 0 frame 872 ...... Queue size: 1 Image resize time: 3.98msafter that it just hangs.

-

Also in some Thread in the Forum(i don't find it anymore) you mentioned that tf1 model zoo models work and some of tf2 model zoo worked . Can you tell wich tf2 model zoo Models did work on Voxl.

-

Hi @Philemon-Benner,

Upgrading the tensorflow version has not been possible yet due to the need for a more current gcc compiler. I plan on trying to get around this, but for now the latest version we can run is 2.2.3.

For your unsupported data type error, do you know which data type it is complaining about in your model? This may be an easy conversion to something that is supported.

For the static-sized tensor error, this is either due to a conversion error with undefined input/output tensors or an unsupported operator in your graph that produces a dynamically shaped output. See this conversation: https://github.com/tensorflow/tensorflow/issues/38036. From our docs on using your own model, I explicitly define the input arrays, input shapes, and output arrays in order to create static tensors for input/output. I will update the docs today and try to make this process clearer, including your suggestions.

On the drop-in functionality of tf1/tf2 model zoo variants, I cannot explicitly say which models work or not since I have not tested every variant. In general though, the tf1 model zoo is a safer resource as many of the tf2 models use unsupported architectures for our current tensorflow version.

-

@Matt-Turi Thank you for your fast answer i will have a look into your suggestions

-

Update:

So for anyone who is facing the same problems as me.- If you want to train using tf2 you can't really take models form tf1 model zoo because tf2 feature extractors are not for the ones in tf1(even if tensorflow mentioned that you can use every tf1 model for later versions maybe outdated)

- So for tf1 Model Zoo go with tf1 1.15.1 for tf2 Model Zoo just take the newest tf version

- For tf2 take a ssd model from tf2 model zoo(ssd is only supported in export for tflite use)

- (Expected that you set up model zoo dir and installed all pip packages for them):

4.1 go to models/research/object_detection

4.2 .use export_tflite_ssd_graph.py

4.3. use the code mentioned in the docs from modalai to make tflite model

4.4 put the tflite model on the voxl

4.5 cd to the dir where your_model.tflite is type:

mv your_model.tflite /usr/bin/dnn/ssdlite_mobilenet_v2_coco.tfliteWhy renaming the model?

Somewhere i Modal AI Code for tflite server theres a line that only take model with the name.

If your not renaming it you will get a (Model is not supported Error).

4.6 Replace the classes in the file beyond with your classesvi /usr/bin/dnn/coco_labels.txt4.7 Run tflite Server

For me that worked with SSD MobileNet v2 320x320(don't get confused its actually 300x300 don't know why) from:

https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf2_detection_zoo.md

with the base model given there. Ill have to see if it really works after training.

I will probably make a github repository in the Future when i have time featuring:

+setting up tf2 and tf1 on your local machine with gpu

+training

+export tflite and put on voxl

because it was such a pain in the ass to setup all this when not being familiar with machine learning, and poor documentation. -

@Matt-Turi thank you for updating your tflite-server code/documentation to adept better to custom models

-

@Philemon-Benner no problem, appreciate you sharing some of your problems and solutions as well. Their are quite a few quirks that come with using tensorflow lite, but I strongly recommend setting up two separate virtualenvs on a host pc, one for tf2 and one for tf1 with some helper scripts for things like post training conversion and some of the handy tensorflow model retraining scripts. If that is a bit too confusing, tools like google colab can make your tensorflow workflow a bit easier to manage if you are willing to sacrifice some training speed.

-

@Matt-Turi So i tried using your the new tflite-server 0.2.0 first with my own model. It loaded the model succesfully but gave the following error

CUSTOM TFLite_Detection_PostProcess: Operation is not supported.so i thought my model had the wrong postprocessing. But in netron everything looked fine and also quantization was made without errors. So i tried using your ssdlite_mobilnet_v2_coco.tflite same error as above but it still worked for like half a minute but then suddenly hangs and ssh sends broken pipe. Do you know if i installed the dev tflite-server wrong or if it could be something else? Full output of ssdlite_mobilenet_v2_coco.tflite model:

voxl:~$ voxl-tflite-server -t -d Enabling timing mode Enabling debug mode ================================================================= skip_n_frames: 5 ================================================================= model: /usr/bin/dnn/ssdlite_mobilenet_v2_coco.tflite ================================================================= input_pipe: /run/mpa/uvc/ ================================================================= delegate: gpu ================================================================= Loaded model /usr/bin/dnn/ssdlite_mobilenet_v2_coco.tflite Resolved reporter INFO: Created TensorFlow Lite delegate for GPU. ERROR: Next operations are not supported by GPU delegate: CUSTOM TFLite_Detection_PostProcess: Operation is not supported. First 114 operations will run on the GPU, and the remaining 1 on the CPU. INFO: Initialized OpenCL-based API. ------VOXL TFLite Server------ Connected to camera server Detected: 0 person, Confidence: 0.81 Detected: 0 person, Confidence: 0.79 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.79 Detected: 0 person, Confidence: 0.76 Detected: 0 person, Confidence: 0.75 Detected: 0 person, Confidence: 0.71 Detected: 0 person, Confidence: 0.70 Detected: 0 person, Confidence: 0.72 Detected: 0 person, Confidence: 0.75 Detected: 0 person, Confidence: 0.74 Detected: 0 person, Confidence: 0.79 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.78 Detected: 0 person, Confidence: 0.77 Detected: 0 person, Confidence: 0.78 client_loop: send disconnect: Broken pipe ubuntu@user:~$Installation of tflite-server dev:

voxl-configure-opkg dev opkg update opkg install tflite-serverit throws some errros with existing installations but when i repeat the steps above it works.

-

Ok so it looks like its crashing when i switch to the voxl-portal tflite camera Stream. It works for 2 or three seconds, i can also see detection, then the voxl completely aborts but i can still reconnect over ssh after aborting so it's not shutting down.

Details:

System Image: voxl_platform_3-3-0-0.5.0-a

voxl-suite: stable release

Services Used:

voxl-uvc-server -r 640x512 -f 60 -d : Flir Boson Camera : dev release

voxl-portal : Avg(GPU): 35%, Avg(CPU): 15% : stable release

voxl-tflite-server -t -d : dev releaseHelp would be really appreciated for now i will fall back to the stable tflite-server release. But i would really like to use the new one because it's way better for custom models.

When using my new model on stable tflite-server i am getting a Segmentation Fault.

-

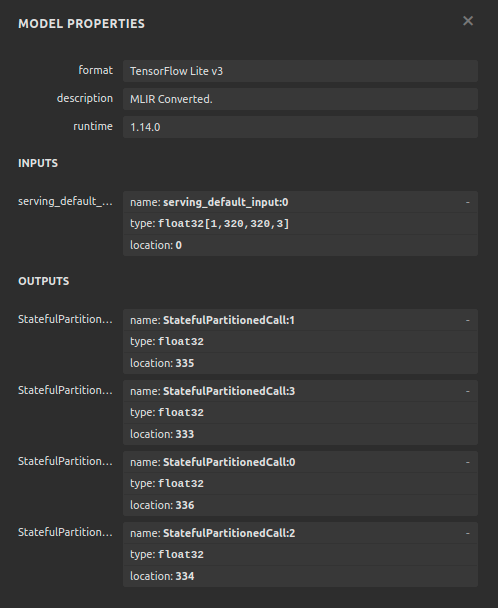

Netron Tflite Model In/Output Formats:

-

Output of Installation of tflite-server 0.2.0 from dev branch:

Output:voxl:~$ opkg install voxl-tflite-server Upgrading voxl-tflite-server from 0.1.6 to 0.2.0 on root. Downloading http://voxl-packages.modalai.com/dev/voxl-tflite-server_0.2.0_202202202255.ipk. Installing libmodal-pipe (2.1.4) on root. Downloading http://voxl-packages.modalai.com/dev/libmodal-pipe_2.1.4_202202040134.ipk. Installing libmodal-json (0.4.0) on root. Downloading http://voxl-packages.modalai.com/dev/libmodal-json_0.4.0_202202080557.ipk. To remove package debris, try `opkg remove libmodal-json`. To re-attempt the install, try `opkg install libmodal-json`. Collected errors: * check_data_file_clashes: Package libmodal-json wants to install file /usr/lib64/libmodal_json.so But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/include/cJSON.h But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/include/modal_json.h But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/lib/libmodal_json.so But that file is already provided by package * libmodal_json * check_data_file_clashes: Package libmodal-json wants to install file /usr/bin/modal-test-json But that file is already provided by package * libmodal_json * opkg_install_cmd: Cannot install package voxl-tflite-server.This does not work:

opkg remove libmodal-json opkg install libmodal-jsonBut when trying to install again:

voxl:~$ opkg install voxl-tflite-server Upgrading voxl-tflite-server from 0.1.6 to 0.2.0 on root. Installing voxl-opencv (4.5.5) on root.it installed succesfully

-

Have been trying around some other models and got some funny confidences:

Detected: 0 person, Confidence: 10.00 Detected: 0 person, Confidence: 0.68 Detected: 0 person, Confidence: 0.73 Detected: 0 person, Confidence: 2.63 Detected: 0 person, Confidence: 1.24 Detected: 0 person, Confidence: 0.98 Detected: 0 person, Confidence: 2.23 Detected: 0 person, Confidence: 0.87 Detected: 0 person, Confidence: 1.26 Detected: 0 person, Confidence: 0.74 Detected: 0 person, Confidence: 0.72 Detected: 0 person, Confidence: 1.34 Detected: 0 person, Confidence: 1.82 Detected: 0 person, Confidence: 0.67 Detected: 0 person, Confidence: 1.50 Detected: 0 person, Confidence: 0.74 Detected: 0 person, Confidence: 1.37 Detected: 0 person, Confidence: 0.89 Detected: 0 person, Confidence: 1.56 Fault address: 0x20 Unknown reason. Segmentation faultdidn't know that you can have a confidence of 1000%

Model: SSD ResNet101 V1 FPN 640x640 -

Let me try to address these individually!

- The "error" complaining

CUSTOM TFLite_Detection_PostProcess: Operation is not supported.is simply due to the very last operation of the model not being supported for gpu delegation. This is because it is not a standard op, so it just gets delegated to the cpu with no issues!

-

The issue with tflite-server hanging is not due to an improper install. I was able to replicate this on my setup using a BAD apm, but swapping out with a new one seemed to alleviate this issue. voxl-tflite-server is a fairly power hungry application, so I recommend checking for fraying cables and things of that nature but if you can send a picture of your current setup with cables/batteries etc included that may help debug. In the meantime, if you have another apm or power supply to quickly swap to and test please give that a try.

-

I personally have not had much luck with the resnet architectures, but I would double check data types and output tensor lengths, because those confidence values seem way off.

-

One last note, the dev release does not directly support all image formats out of voxl-uvc-server, only IMAGE_FORMAT_NV12 as of now. So, if your camera input is coming in as either IMAGE_FORMAT_YUV422 or IMAGE_FORMAT_YUV422_UYVY you may need to add some extra handling for that.

-

@Matt-Turi thank you for the fast reply again. I will check the apm and Camera server output format tomorrow and also send you a picture of the setup.

-

@Matt-Turi I got it working with changing the apm. Thank you for the suggestion. So i looked up my Camera Supports and it should support nv12 and in voxl-uvc-server it shows the following:

uvc_get_stream_ctrl_format_size succeeded for format 2after looking at the voxl-uvc-server:

https://gitlab.com/voxl-public/modal-pipe-architecture/voxl-uvc-server/-/blob/dev/src/main.c

L: 307 There is a for loop looking for a format to choose and format 2 should be NV12 but for some reason when looking at the stream over voxl-portal i get green stripes in the ouput. Do you know what the problem could be?The Camera is a Boson 640 Black-White Thermal Camera.

-

Here is a Picture of the green stripes:

-

@Philemon-Benner Can you post the output of

lsusb,voxl-uvc-server -s, andvoxl-uvc-server -d -m -r 640x512? -

@Eric-Katzfey

lsusb:Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 003 Device 002: ID 09cb:4007 Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hubvoxl-uvc-server -s:

*** START DEVICE LIST *** Found device 1 Got device descriptor for 09cb:4007 134275 Found device 09cb:4007 DEVICE CONFIGURATION (09cb:4007/134275) --- Status: idle VideoControl: bcdUVC: 0x0100 VideoStreaming(1): bEndpointAddress: 129 Formats: UncompressedFormat(1) bits per pixel: 12 GUID: 4934323000001000800000aa00389b71 (I420) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-235929600 max frame size: 491520 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 UncompressedFormat(2) bits per pixel: 16 GUID: 5931362000001000800000aa00389b71 (Y16 ) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-629145600 max frame size: 655360 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 UncompressedFormat(3) bits per pixel: 12 GUID: 4e56313200001000800000aa00389b71 (NV12) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-235929600 max frame size: 491520 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 UncompressedFormat(4) bits per pixel: 12 GUID: 4e56323100001000800000aa00389b71 (NV21) default frame: 1 aspect ratio: 0x0 interlace flags: 00 copy protect: 00 FrameDescriptor(1) capabilities: 02 size: 640x512 bit rate: 10592000-235929600 max frame size: 491520 default interval: 1/60 interval[0]: 1/60 interval[1]: 1/30 END DEVICE CONFIGURATION *** END DEVICE LIST ***voxl-uvc-server -d -m -r 640x512:

Enabling debug messages Enabling MPA debug messages voxl-uvc-server starting Image resolution 640x512, 30 fps chosen UVC initialized Device found Device opened uvc_get_stream_ctrl_format_size succeeded for format 2 Streaming starting Got frame callback! frame_format = 17, width = 640, height = 512, length = 491520, ptr = (nil) making new fifo /run/mpa/uvc/voxl-tflite-server0 opened new pipe for writing after 3 attempt(s) write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 1 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 * got image 30 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 40 errno: 0 previous client state was 2 write to ch: 0 id: 0 result: 491520 errno: 0 -

Just checked the camera on my laptop. Camera it self is working fine

It's also working fine with nv12 format without the green lines:ffplay /dev/video4 -pixel_format nv12Output:

Input #0, video4linux2,v4l2, from '/dev/video4':B sq= 0B f=0/0 Duration: N/A, start: 33575.082223, bitrate: 235929 kb/s Stream #0:0: Video: rawvideo (NV12 / 0x3231564E), nv12, 640x512, 235929 kb/s, 60 fps, 60 tbr, 1000k tbn, 1000k tbc -

@Philemon-Benner It looks like voxl-uvc-server is working fine. The error messages seem to be related to voxl-tflite-server and not voxl-uvc-server. Can you use voxl-streamer to stream the output of voxl-uvc-server to VLC and see if there are still green lines? What are you currently using to stream the output of voxl-uvc-server?