Starling 2 SLAM ROS2 Foxy

-

Hello,

I wanted to use ROS2 Foxy to run SLAM and create a map of an indoor environment on RViz2. Initially, I tried using /tof_pc to get point cloud data, but it seems this topic doesn’t account for the UAV’s position and orientation (Starling 2). As a result, the point cloud data kept appending to itself incorrectly, and I couldn’t generate a usable map.

Could you clarify:

- What sensors are fused or used in /voa_pc_out?

- Does /voa_pc_out compensate for UAV movement, and is this topic alone sufficient for mapping an area?

- What topic combinations would you recommend for:

i) LiDAR SLAM

ii) Visual SLAM

For example:

a) imu_apps + tof_pc

b) voa_pc_out

c) tracking cameras + imu_apps

d) any other combinationThanks,

George -

-

You can either use stereo cameras (alongside running voxl-dfs-server) or tof sensors

-

It's just a pointcloud representing what the cameras see at the vehicle's current orientation

-

Have you considered using voxl-mapper alongside listening to the topic "/voxl_mapper_aligned_ptcloud"? Voxl-mapper already does the calculations you mentioned to generate a static pointcloud outputted to that pipe. You can debug your setup by going to voxl-portal and checking that voxl-mapper is outputting a 3D map of your room.

Hope that helps,

Ted -

-

@teddy-zaremba thanks for your response.

-

By default, are the stereo cameras being used? Or is it the tof sensor?

-

To clarify, if we try to visualize voa_pc_out on rviz2, I would not be able to see the UAV movement. I would need to use a topic that already fuses imu_apps data with tof_pc data or create such a custom topic. Is my understanding correct?

-

I'd be happy to try it. I've opened the voxl mapper page on the voxl portal and voxl-dfs-server is enabled, but I'm unable to start it with systemctl start voxl-dfs-server. I'm also unable to see /voxl_mapper_aligned_ptcloud under ros2 topic list (I don't think it's being published). How can we address these?

-

What's the best way to get the created map from voxl mapper as a ROS2 foxy interface?

Thanks,

George -

-

-

For "voa_pc_out" the default on voxl-vision-hub allows input from both stereo and tof. You can check which inputs you have enabled by checking the voa_input in "/etc/modalai/voxl-vision-hub.conf".

-

Correct, I would just use "voxl_mapper_aligned_ptcloud"

-

Can you share your output from

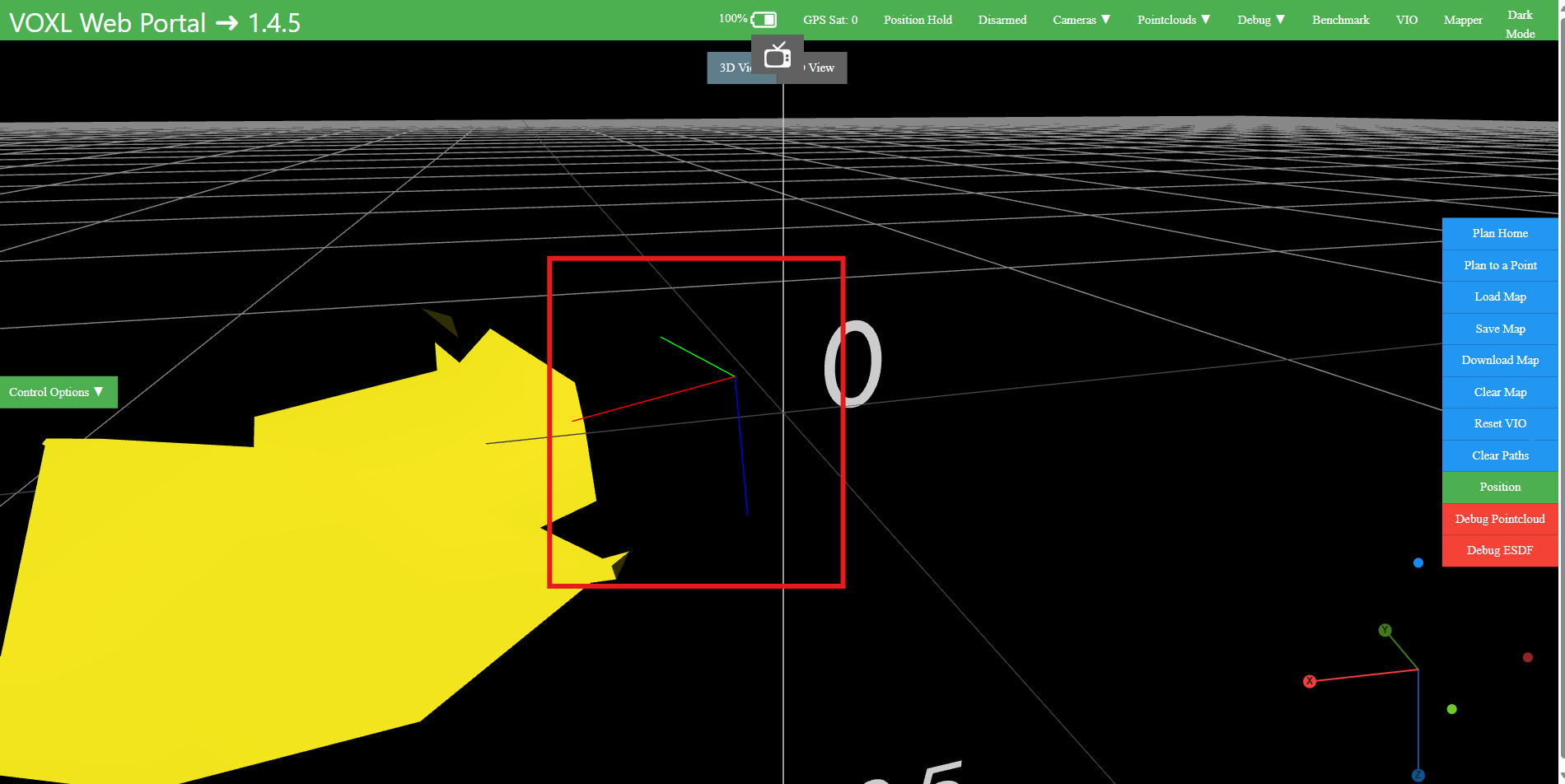

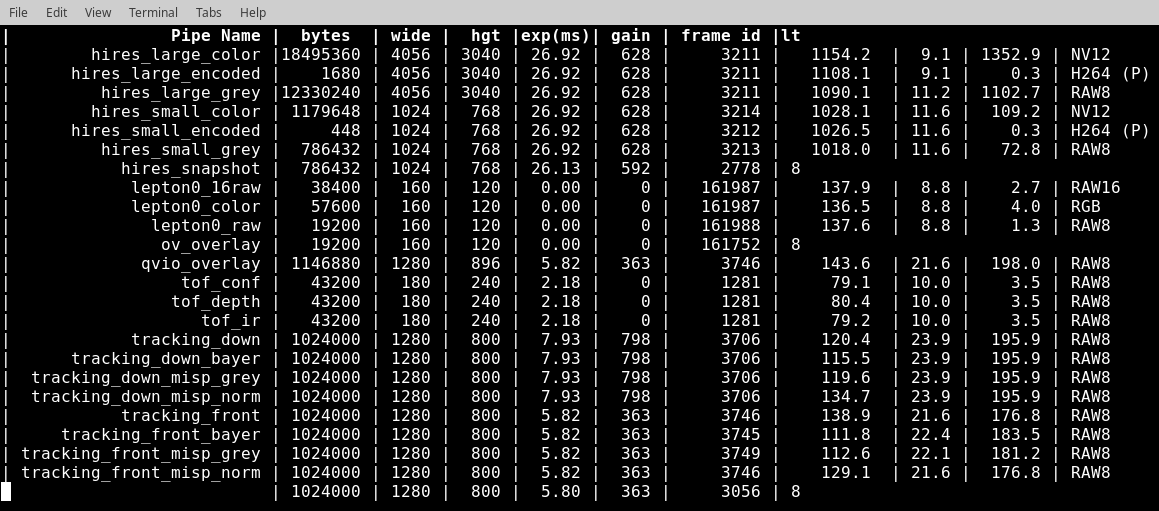

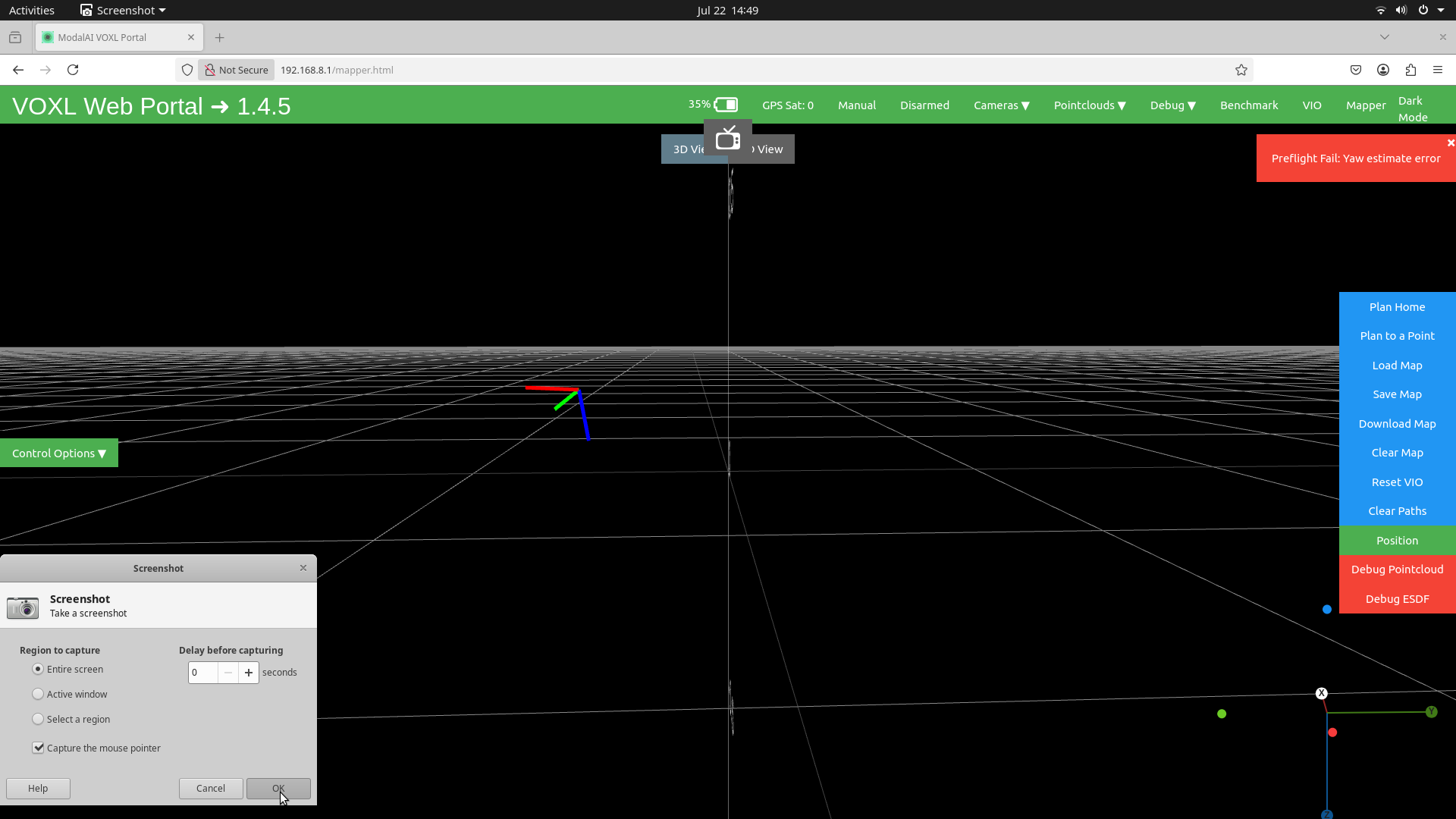

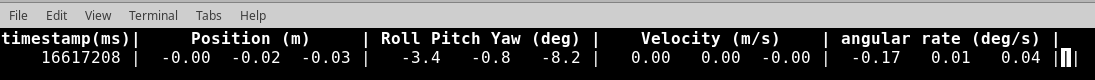

voxl-inspect-cam -ato make sure your stereo cameras are setup correctly? I would debug this on voxl-portal before moving onto the ROS portion. Do you have tracking cameras setup, and will you be running openvins or voxl-qvio-server. Once that's verified check that you're seeing that pose on voxl-mapper. You should see the orientation show up like in the red box in the picture below or by runningvoxl-inspect-pose -l.

- You can use the pointcloud data published to voxl_mapper_aligned_ptcloud once you have voxl-mapper working. I've never plotted it myself, but you can take a look at how voxl-portal plots it for inspiration (here).

-

-

-

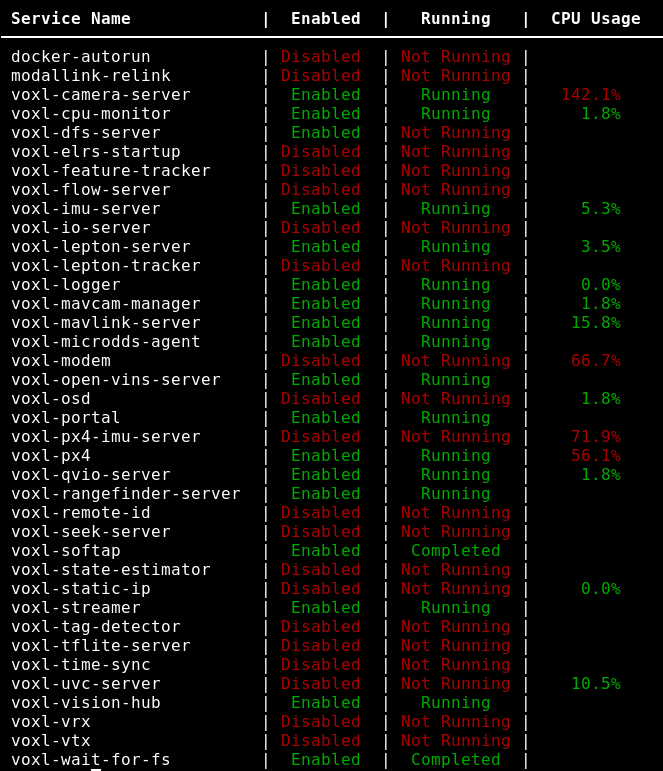

Screenshots are attached below. On the voxl portal, I'm able to see the tracking_down and tracking_front feeds, but I don't see the tracking_rear option (my Starling2 is in C27 config: 1 hires camera, 1 tof sensor, 3 tracking cameras). I haven't changed anything related to the default camera setup, except adding a Lepton 3.5 sensor. I was hoping to use the voxl-qvio-server, but I'm happy to explore openvins if that's more feasible. I don't see a red box, but I'm able to see the axes on the voxl mapper page, and its moving when I move the UAV.

-

How can I access the voxl_mapper_aligned_ptcloud?

Thanks,

George -

-

That looks good except voxl-mapper doesn't look like it's installed or running. You can install it using the command below. Make sure your starling is connected to wifi else you can adb push the voxl-mapper deb.

$ wget http://voxl-packages.modalai.com/dists/qrb5165/sdk-1.5/binary-arm64/voxl-mapper_0.2.3_arm64.deb $ dpkg -i voxl-mapper_0.2.3_arm64.debAfter it's installed and running check that you see the map updating on portal and you should see the voxl_mapper_aligned_ptcloud pipe show up as a ros topic.

-

@teddy-zaremba Thanks for the information.

I am working with George on the same project. We’ve installed the required packages, and voxl-mapper is working properly in the VOXL Portal. We can also see the /voxl_mapper_aligned_ptcloud topic available in ROS 2. However, we have a few questions and would really appreciate your guidance.- Is /voxl_mapper_aligned_ptcloud the accumulated 3D map of the environment? If so, we assume this topic contains the fused and globally aligned point cloud (not just live frame-by-frame sensor data).

- If the answer to (1) is yes, can we use ros2 bag record to save this map and later visualize it in RViz? Specifically:

- Will the recorded data still reflect the accumulated map, or will it only include a stream of point clouds per frame?

- What are the best practices to record and visualize the full map offline?

- RViz does not recognize any transformation (TF) tree, which causes issues in data visualization. We understand we need to publish appropriate transforms to view data correctly in RViz. Here are our observations and questions:

a. /voxl_mapper_aligned_ptcloud uses frame_id: "world" — but this world frame comes from VOXL and is not part of our RViz TF tree.

When we visualize this point cloud in RViz, we have to run:ros2 run tf2_ros static_transform_publisher 0 0 0 0 0 0 map world

This lets us see the point cloud, but only as a live stream — not the full accumulated map.

We’re trying to build the following TF chain to resolve this:

NED → map (static)

map → odom (static or dynamic)

odom → base_link (dynamic, from QVIO)

base_link → tof (static) or voxl_mapper_aligned_ptcloud

tof or voxl_mapper_aligned_ptcloud → world (static)For each of these we take the extrinsic information that we have to find the translation and orientation of these coordinate systems.

Does this seem correct for enabling RViz to visualize the accumulated map properly?

b. Even for /tof_pc, we also need to publish a static transform like:

ros2 run tf2_ros static_transform_publisher 0 0 0 0 0 0 map world

to view data in RViz. But again, this only gives us frame-by-frame output. Should we instead define a more complete set of transforms from base_link to world?c. We’re currently unable to display QVIO odometry data in RViz to track the drone’s path.

VOXL publishes QVIO data on /qvio in two message types: nav_msgs/Odometry and geometry_msgs/PoseStamped. However, neither of these messages includes a valid frame_id, which prevents RViz from interpreting the data.- What frame_id should we assign to these messages?

- What transforms should we publish so that we can correctly display odometry and the drone's trajectory in RViz?

We would be very thankful for your help.

Thanks,

Roya -

Hi @Roya-Salehzadeh,

-

Yep, the points showing up in voxl_mapper_aligned_ptcloud are static (ie. not tied to the vehicle's current pose)

-

I've never tried to do that so I'm not 100% sure but recording the points with a ros2 bag seems like a reasonable approach

3a. I'm not sure why you would need to do any transformation to plot the data on RViz. Voxl-mapper already does transformations to put the data in a static frame of reference. If you're only seeing it as a live stream this is probably because it's playing one message one after the other. I'm not sure but there might be a setting in RViz to ignore the timestamps or you could potentially do something like set all the timestamps to 1.

3b. Voxl-mapper uses the tof data to generate the map. What are you trying to do by visualizing the tof data?

3c. For visualizing the path I'm not sure if you would need to do transformations or not. This is the line that digests the pose data in voxl-portal. After a little investigating it seems like the /world might be the correct frame_id.

If it were me, I'd start by getting the accumulated map on RViz then plot the odometry inside that map with the /world frame_id and then check if you need to do any additional transformations.

@brahim what're your thoughts on the RViz frame_id for odometry?

Let me know if that helps,

Ted -