Need help simulating .tflite yolo models on my linux machine.

-

-

@thomas

Hi thomas,

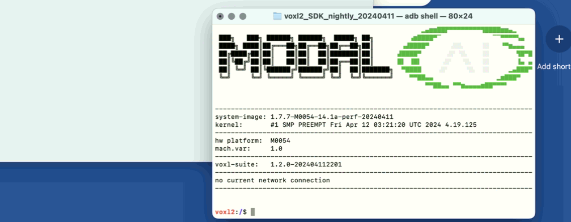

Is this package for voxl2min or voxl2?

Regards -

@thomas

Because I think this SDK is meant for M-0054 while our version is M0104. Let me know if we can go ahead and flash this SDK or not?

Thanks -

@thomas

Also, Now that I have flashed the SDK onto my chip its showing voxl2 in the shell screen instead of voxl2-mini. And even if I try and go ahead and do the following steps. I still cant get the desired output.

file:///home/bhavya/Pictures/Screenshots/Screenshot%20from%202024-04-15%2014-29-02.png

file:///home/bhavya/Pictures/Screenshots/Screenshot%20from%202024-04-15%2014-29-02.png -

Oh my apologies, here's the link for VOXL2 Mini nightly SDK. I almost never work with these so apologies for the confusion

Thomas

-

@thomas

Hey thomas,

So I tried with this new sdk. Unfortunately, I still dont see any output here as well

-

Have you also tried using this method? Or were there issues getting WiFi setup?

@thomas said in Need help simulating .tflite yolo models on my linux machine.:

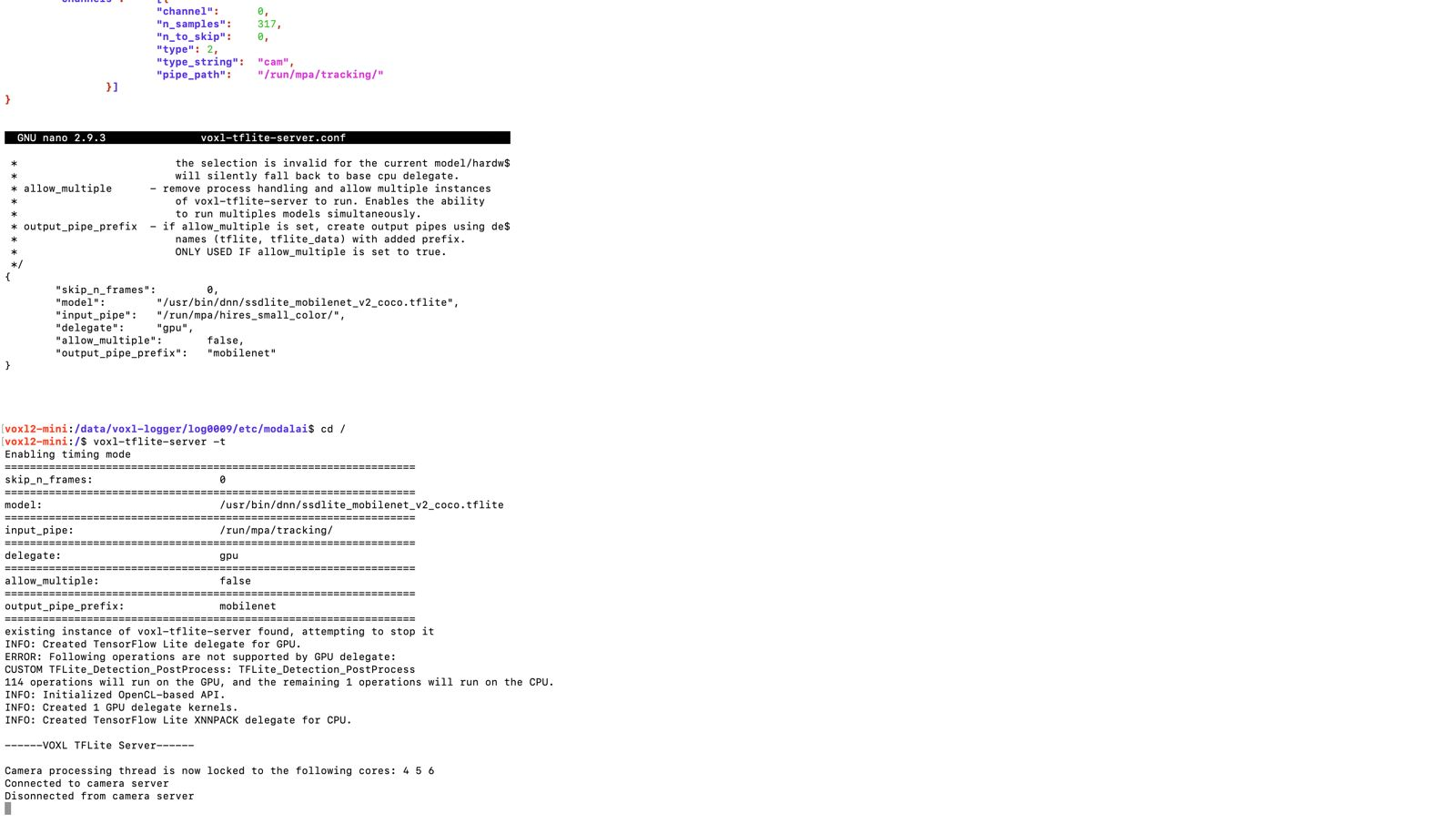

Okay so I've identified a problem in our timing code and am working on a fix. Here's a short-term solution for you:

Make sure /etc/modalai/voxl-tflite-server.conf has "input_pipe" set to "/run/mpa/tracking" Get your VOXL's IP address with voxl-my-ip and enter this IP into your web browser to pull up voxl-portal in your browser. Run voxl-tflite-server on VOXL Run your voxl-logger log as voxl-logger -p /data/voxl-logger/log0009/ or whatever the path is saved asNow in your web browser, from the "Cameras" dropdown click tflite. You may need to refresh the page to get this to pop up. The permalink to this page is <YOUR IP>/video.html?cam=tflite (e.g. http://192.168.0.198/video.html?cam=tflite) so you can optionally just go there. This overlay shows the ML model running and in the upper left of the frame shows a framerate. You can use this to estimate for the time being.

Am working on the fix now, will let you know when it's released.

Thomas

Yeah I'm really not sure what's going on here, on that nightly SDK

voxl-tflite-server -tis printing out timing information. I don't have a VOXL2 Mini to test on so if it's a specific issue there I'm really not sure. Let me try some stuff today and I'll get back to you.Thomas

-

@thomas

Hi thomas,

Yes so right now we tried figuring out if maybe the issue is with the model not being specific to voxl2mini or maybe the logs are generated based on voxl2. But I dont think thats the case.

The thing which I'm concerned about is:- How do we identify or pinpoint the problem ...like are we supposed to see the output after the 'Disconnected from camera server' line or before? And if we are supposed to see it and are not able to... then where exactly is the problem happening in the src/main.cpp file of voxl-tflite-server. Can we debug that somehow?

- Also did you check running the voxl-replay logger command directly without having any camera setup or camera-server configured.... similar to the way we are currently running on our end.

-

If

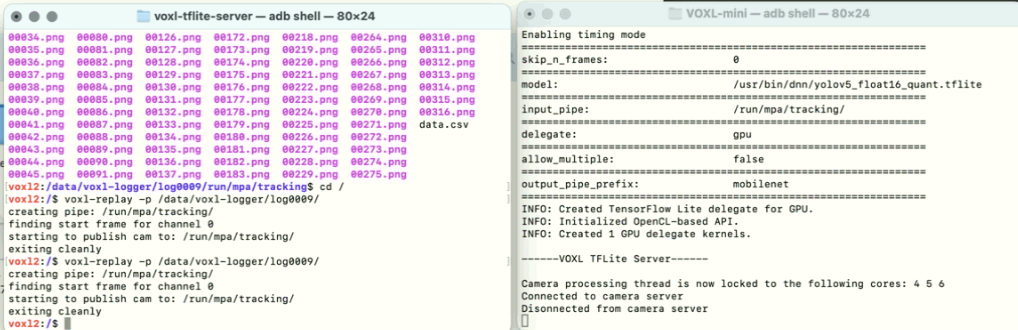

voxl-tflite-serverwas running into an actual error you'd see some sort of an error message printout. We typically debug issues throughvoxl-portalbut if you aren't able to get WiFi set up you won't be able to do so. I again reflashed a nightly SDK to confirm things are working on my end and they are. To reconfirm:- Flash the latest SDK nightly from the link shared above

- Modify

/etc/modalai/voxl-tflite-server.confto have/run/mpa/trackingas its input. This file gets overwritten on flash so make sure you do this. voxl-tflite-server -tin one terminalvoxl-replay -p /data/voxl-logger/log0009/in another

I can tell you that replay doesn't care about camera server being configured, it should work regardless.

-Thomas

-

@thomas

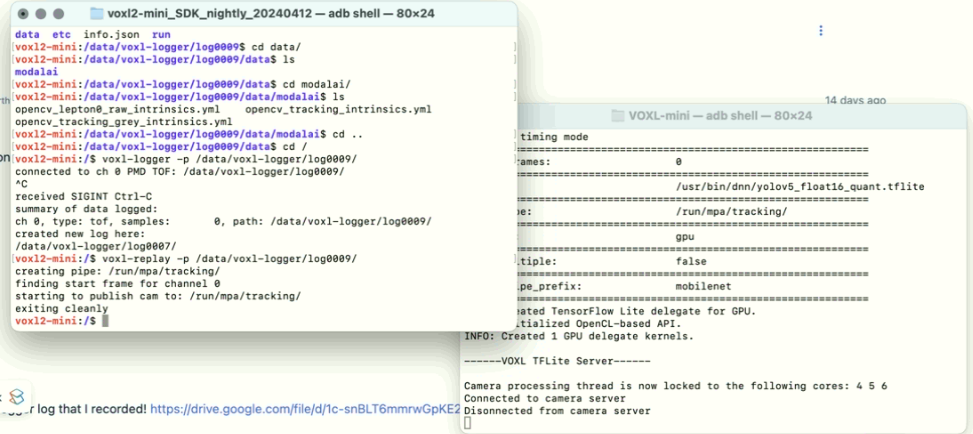

Hi thomas,

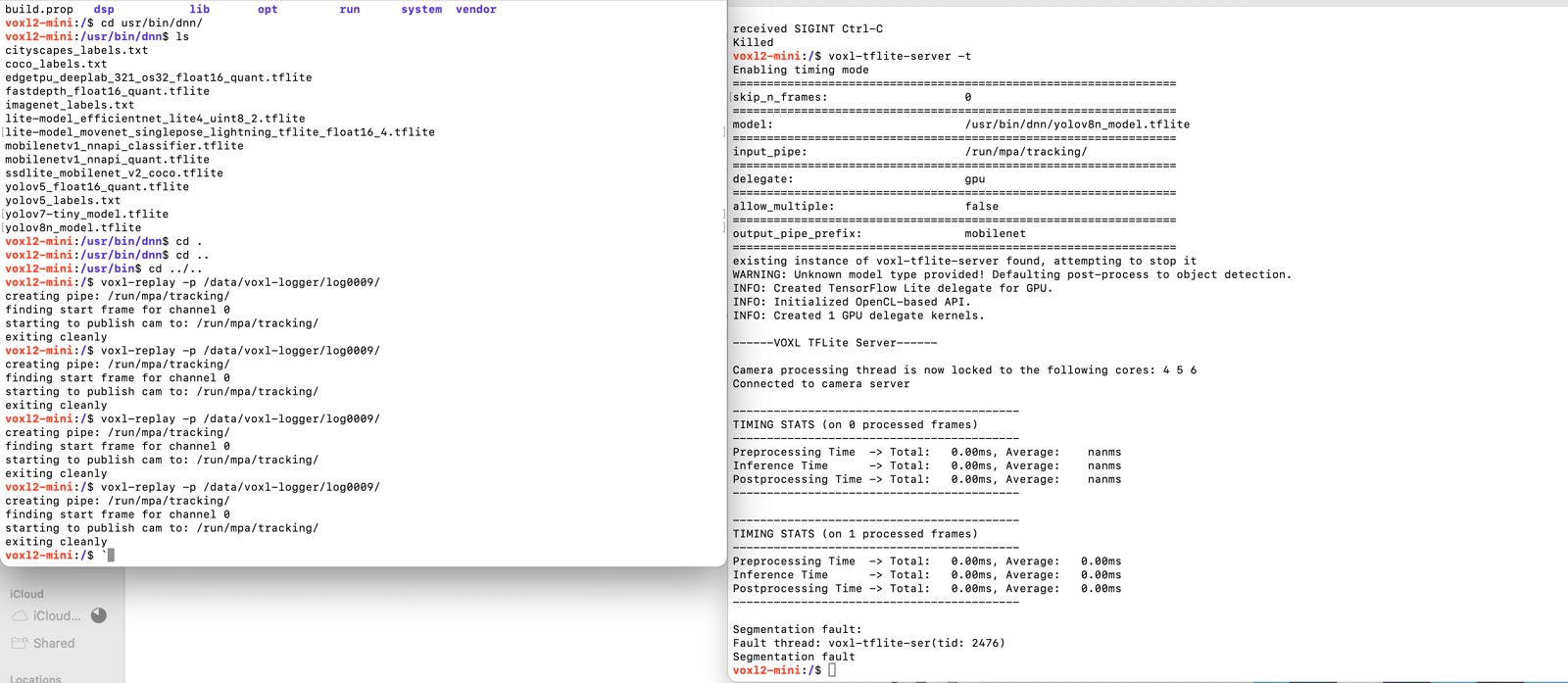

So I followed just the 4 steps you shared and its working perfectly fine with the default models.

But when i deploy my custom voxl-tflite-server deb package onto the chip it says warning downgrading from voxl-tflite-server 0.3.3 to 0.3.2. So maybe I feel the issue is with the deb package of voxl-tflite-server I'm building with my custom tflite models and building the package out of it and deploying.

Maybe you can update the gitlab repo so that I can build my custom voxl-tflite-server deb package and deploy it.

Let me know if you can do this.Thanks

file:///home/bhavya/Pictures/Screenshots/Screenshot%20from%202024-04-17%2012-28-53.png -

Hey Ajay,

The Gitlab repo for tflite server actually is updated, just only for the

devbranch. We're not quite ready to merge this tomasteryet. So you can just switch to that branch and branch your changes off of that and you should be fine.Thanks,

Thomas -

@thomas

Hi thomas,

So we were able to run our custom tflite models using the updated tflite-server 0.3.3 . But now we are facing some new problem which is related to our custom tflite files. We are getting Segmentation fault errors as shown.

Could you help us regarding what exactly could we be missing here. Since I followed the steps mentioned in the documentation to create the compatible tflite models (using fp16)

https://docs.modalai.com/voxl-tflite-server-0_9/#benchmarksIs there something we can test externally to check if our tflite model is compatible or not? Like some code or tool.

Regards,

-

The first question I usually ask people about custom models is the labels. Are you using the same classes as the original YOLO or different classes? If you have a different labels file, that needs to be reflected in

/usr/bin/dinnin the corresponding .txt file.Also is this YOLOv8?

Thanks,

Thomas -

@thomas

Hi thomas,

So I was assuming the labels which are used for yolov5 which is present in the repo by default would work fine with custom models(yolov7 and yolov8) as well.And yes the last screenshot is for yolov8.

-

It's dependent on what labels/classes you trained your YOLOv7/8 networks on. If it's on the same labels as whats in

/usr/bin/dnn/yolov5_labels.txtthen you should be fine. If not you could definitely segfault.If that's all fine then I'm not immediately aware what could be causing an issue. As you likely know we don't officially support YOLOv7/8 and so you'll need to debug it yourself to find the issue. If you do find an issue and have a corresponding fix, you're more than welcome to open up a Merge Request in the repo and I can take a look at it. We've wanted to add support for YOLOv7/8 for some time but it just isn't a high enough priority at the moment.

Hope this helps,

Thomas

-

@thomas

Hey thomas,

Hope you're doing well,

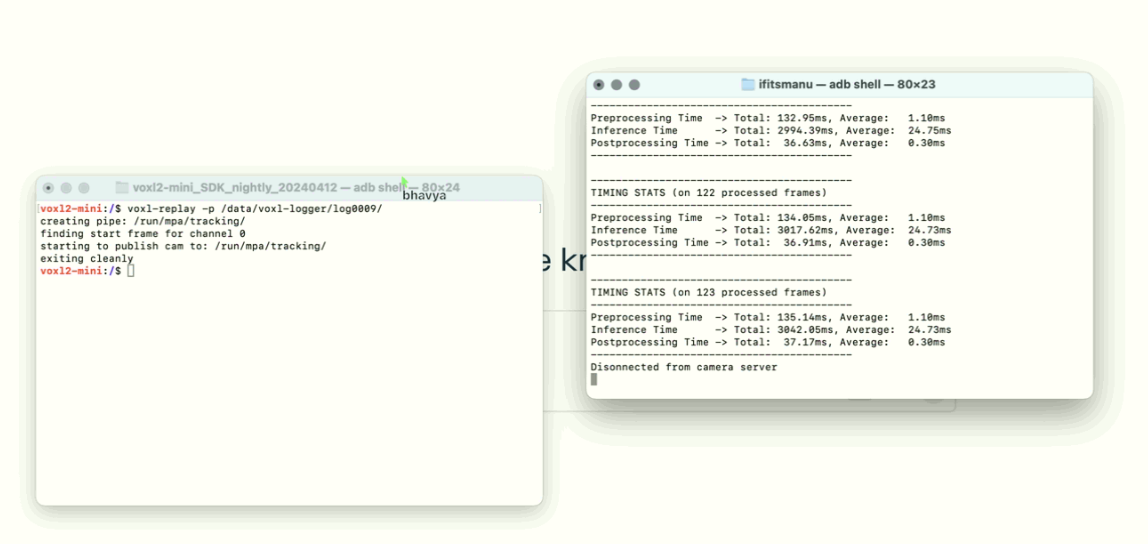

So I have a question - The three lines of results which we get after running the models :- Preprocessing time , 2. Inference Time, 3. Post processing Time

Are they specific to CPU only. or are they according to if the task is delgated to GPU or something.

If its the later case, then can we manually run the tflite-server by manually delegating the model to the GPU or DPU, instead of it automatically detecting and processing accordingly.

Regards,

Bhavya - Preprocessing time , 2. Inference Time, 3. Post processing Time

-

Only the inference time should be affected by your choice of hardware. The other two should be the same regardless of your choice.

Thomas