Voxl-tflite-server error while using DeepLabV3

-

Hello there,

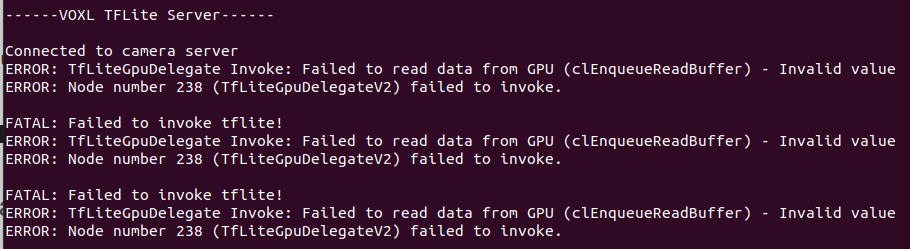

I have been trying to run the voxl-tflite-server (apq8096) on the GPU with the DeepLabV3 model. It worked well on the CPU, without any errors. However, when im trying to run it on the GPU, i don`t get any frames and i get the following errors:

And then the same repeating lines over and over again:

-

Hey @Maxim-Temp,

The deeplabv3 model is not officially supported for the apq8096 platform because of issues like this that arise with some of the newer, larger models running with an older version of tensorflow lite. The first set of errors is expected, as these Ops are not supported for the gpu delegate in tensorflow 2.2.3, and they should just fall back to the cpu.

The next set of errors (as per docs here: https://registry.khronos.org/OpenCL/sdk/1.0/docs/man/xhtml/clEnqueueReadBuffer.html) likely means that tensorflow is incorrectly passing an invalid buffer region to OpenCL, which will be difficult to chase down. This error is rising here, either line 124 or 149 in

apq8096-tflite/tensorflow/tensorflow/lite/delegates/gpu/cl/cl_command_queue.ccif you would like to investigate further. -

@Matt-Turi Thank you for your quick response. I will investigate further!

-

Hey @Maxim-Temp, quick update.

As of a few minutes ago, the latest voxl-tflite-server (v0.3.0) is now running with tensorflow v2.8.0 for apq8096. This means that you can now run any of the models that were included with qrb5165 (including Deeplabv3) on VOXL without dealing with these headaches. You can pull this from the dev apq8096 packages repo, or directly from here: http://voxl-packages.modalai.com/dists/apq8096/dev/binary-arm64/voxl-tflite-server_0.3.0_202207212215.ipk

-

@Matt-Turi Wow, thank you for updating me. I will try these models now