Need help simulating .tflite yolo models on my linux machine.

-

Hi,

I'm new to VOXL. I have trained a yolo like model and have my yolo_custom.tflite model with me. How can I simulate it for a VOXL2mini environment.

I tried following the steps there in https://docs.modalai.com/voxl-tflite-server/ But am lost at how to setup and configure and what all are the prerequisites. -

Hey Ajay, happy to try and help! I wrote a custom section just on making and deploying custom models here, can I ask what parts specifically you're struggling with?

Thanks,

Thomas -

@thomas

Hey thomas, Thanks for the quick reply,

So is it posible to simulate the tests in an environment without having the actual device with us? I'm asking this because you mentioned in some other thread that flashing the SDK on the device is necessary. I dont have the actual device with me right now and was hoping if I could somehow simulate the tflite models on my computer for testing purpose -

So it would certainly be best to have a device with you as we don't currently officially support the ability to run

voxl-tflite-serverlocally or within a Docker container, for example. You could potentially find a way to make this work but it certainly isn't something that I've tested.Now you can still create .tflite models on your computer and you can still use them for inference locally, there are some guides on Google's TFLite documentation for how to do this. But inside of

voxl-tflite-serverwe do some pre/post processing steps on the data which you would ultimately need to emulate to get a good idea of prediction metrics. This local inference, however, won't be useful for determining things like operational framerates for the model. For that, you'll definitely need a device to see how much the GPU/NPU can hardware accelerate the model you've created.Hope this helps, happy to answer any more questions you may have!

Thomas Patton

-

@thomas

Thank you for providing the necessary details. Once I acquire the device/chip for testing, I would appreciate it if you could also provide me with a comprehensive guide detailing the steps required to simulate and test TensorFlow Lite models in the VOXL environment. I have the tflite files ready with me.

Thanks -

We have a documentation page on how to do that here, give that a read and then we can talk about next steps!

Thomas Patton

-

@thomas

Hi thomas,

Hope you're doing well,

So I have recieved my VOXL chip, and have followed the steps to setup, install and test my chip on my Macbook. I am able to launch voxl-docker image successfully as well using voxl-docker -i voxl-crossBut am completely lost as to how to launch and run my .tflite models from here onwards. Would appreciate if you can guide me through the same.

Thanks

-

Take a look at our developer bootcamp, you don't have to do the whole thing but it will get you used to interfacing with VOXL. Then when you feel ready check out the TFLite Server docs, these detail how to load your custom .tflite models onto VOXL.

Thanks,

Thomas

-

@thomas

thanks for the above docs . So I have gone through the docs extensively but still dont find the instructions to deploy my custom tflite models on the VOXL2 mini chip. If possible can you please help me with the same. I would greatly appricate it -

Here's the docs section on custom models, give it a try and let me know how I can help.

https://docs.modalai.com/voxl-tflite-server/#custom-models

Thomas

-

@thomas

Thanks for this,

If you dont mind can we connect personally for few minutes? Also I wanted to ask that will we be able to simulate and benhcmark the yolo models without having camera sensor attached to the chip? If needed is there any way to test it using my macbook's camera?Regards

-

You actually may be able to benchmark voxl-tflite-server without an image sensor attached. The way we do this is with a tool called voxl-logger which lets us fully capture the inputs from the all the pipes over a given time period. If you had a log created by voxl-logger, you could then use voxl-replay to replay the log and then measure the performance of voxl-tflite-server over that interval. It would be much easier to simply use you VOXL with an image sensor though. If you want to go down the logger path, though, I can try to get a log together for you that you can replay. You definitely cannot test it with your Macbook camera.

Thanks,

Thomas -

@thomas

Yes, thanks fro this,

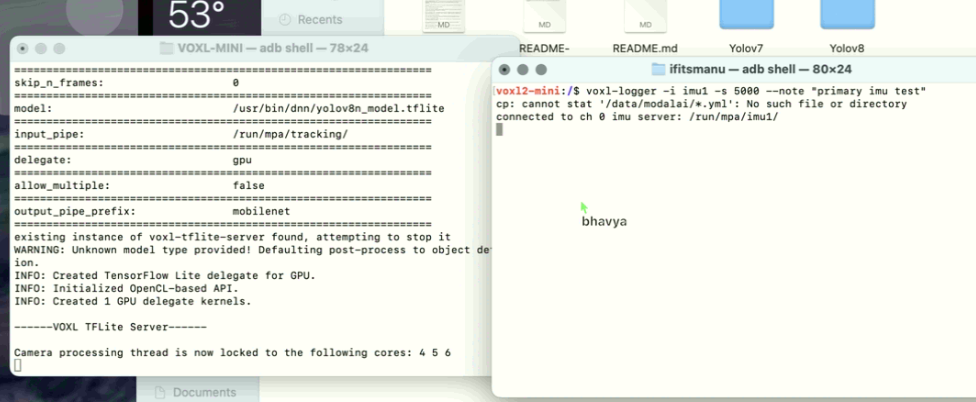

I would appreicate if you can share the voxl-logger tool usage. Because right now when I just launch the tflite server by doing the configuration with my custom tflite model. it just says=================================================================

skip_n_frames: 0model: /usr/bin/dnn/yolov8n_model.tflite

input_pipe: /run/mpa/tracking/

delegate: gpu

allow_multiple: false

output_pipe_prefix: mobilenet

existing instance of voxl-tflite-server found, attempting to stop it

WARNING: Unknown model type provided! Defaulting post-process to object detection.

INFO: Created TensorFlow Lite delegate for GPU.

INFO: Initialized OpenCL-based API.

INFO: Created 1 GPU delegate kernels.------VOXL TFLite Server------

Camera processing thread is now locked to the following cores: 4 5 6

And nothing afterwards. so i believe its running but I actually want to see the numbers. or the benchmark performances of the model. How do I do that.

Thanks a lot again,

Bhavya -

With all services on VOXL you should always run with the

-hoption if you're curious for more information on ways to use the service. In the case ofvoxl-tflite-server, we have both debug and timing options that can be specified to see printed outputs or timing benchmarks respectively.Thomas

-

@thomas

Hi thomas,

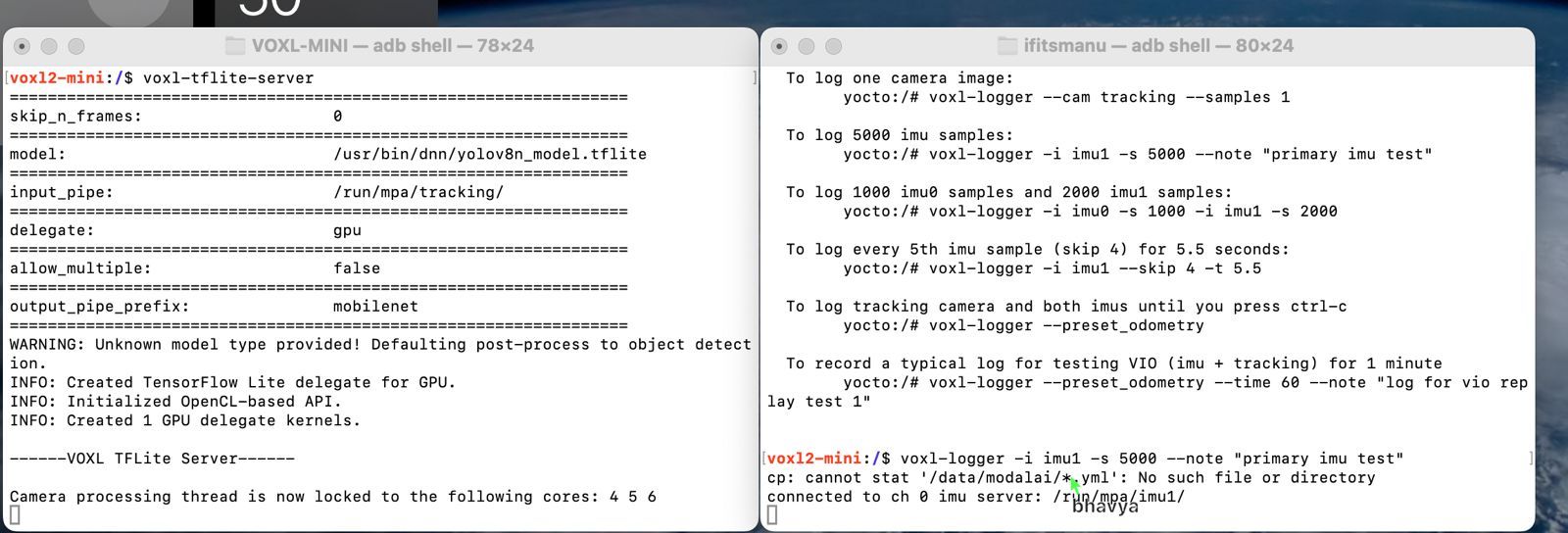

Okay, so I actually did do those steps. And using that info I got to know that to benchmark the model I need to runvoxl-tflite-server

and then in another terminal I did:

voxl-logger -i imu1 -s 5000 --note "primary imu test"

but then i get this error:

-

Really strange that you don't have the

/data/modalai/dir as that's where we store thesku.txtand other important info. Maybe try calibrating the IMU?https://docs.modalai.com/calibrate-imu/

Thomas

-

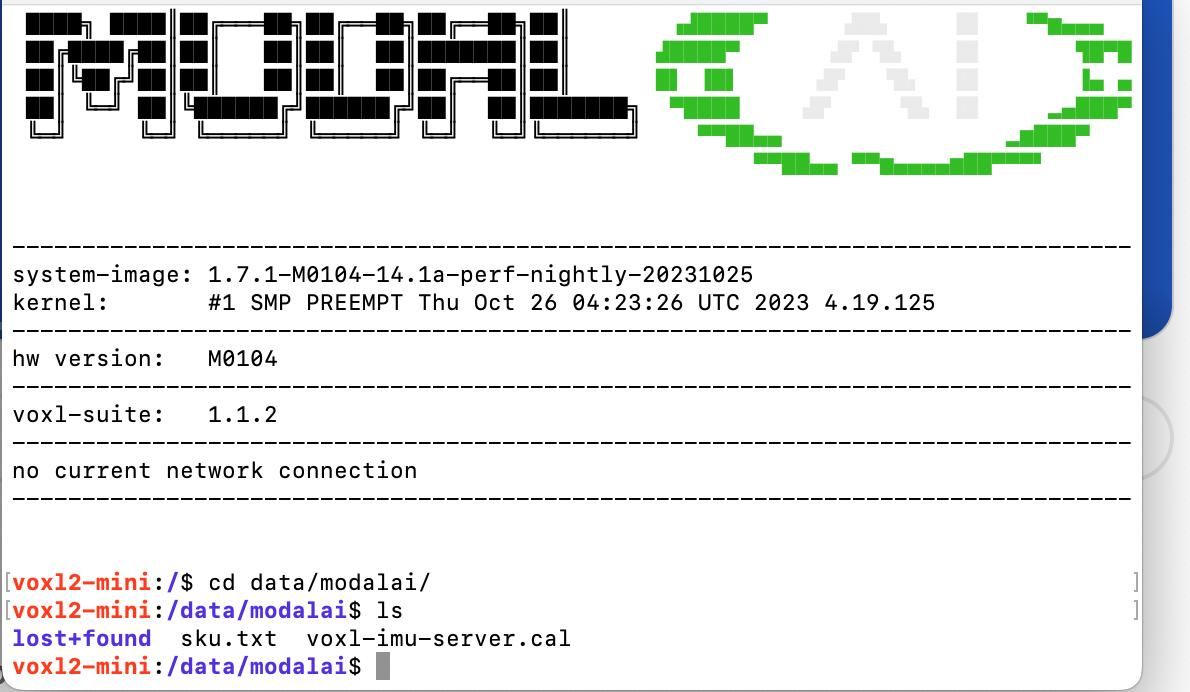

@thomas

Yes, so we have the directory present, but it only has the sku.txt file there. I think its because of some .yml file being missing we get error. So what should we do to load that file successfully in the dir -

-

@thomas

Hi thomas,

So I did try calibrating the imu sensor using voxl-calibrate-imu. But I still dont see the .yml file in the data/modalai directory.

So I'll just share the brief overview on what steps I followed to get to the current point:- I connected VOXL (with only the default sensors, I did not attach any sensor seperately) to my computer.

- I created a deb package to deploy to VOXL by loading my custom tflite files in the misc_files/usr/bin/dnn directory of the voxl-tflite-server with correspodning changs in the voxl-configure-tflite file present in the scripts/ qrb5165 folder. And then following the build and deploy commands to deploy the package to voxl

- I then did adb shell to load into my VOXL and do see all the tflite files present there. I then ran voxl-configure-tflite command to load my custom tflite model.

- Following that I simply executed voxl-tflite-server command

- In another similar terminal I then tried benchmarking by doing voxl-logger command as shown and am now getting the error.

file:///home/bhavya/Downloads/screen1.jpeg

file:///home/bhavya/Downloads/screen1.jpeg

file:///home/bhavya/Downloads/screen2.jpeg

file:///home/bhavya/Downloads/screen2.jpegThanks

-

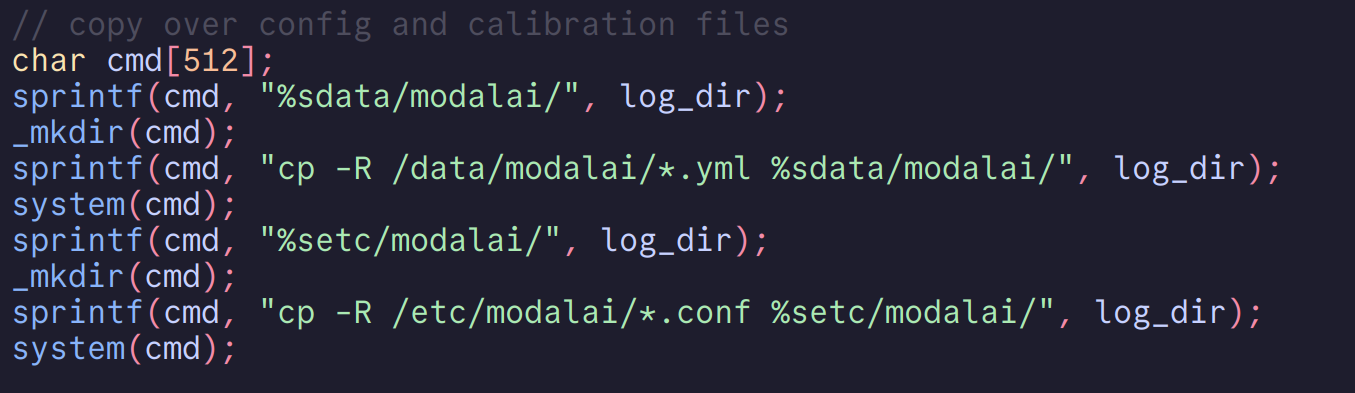

Okay yeah I think I figured it out. Take a look at these lines from the

voxl-loggercode:

You can see that

voxl-loggeris just trying to copy over anything that matches *.yml . So while I work on a fix for this, could you just do inside your VOXLtouch /data/modalai/something.yml. ? This should remove that error.Keep me posted,

Thomas