ROS Bag File Example

-

Hello,

I'm evaluating the ROS application of the VOXL output in and Via ROS.

I'd really like to see a ROS Bag file that has the VOXL topics for the mapping so I can evaluate.Is this something you could provide or post to Git please?

Thanks

John

-

Hi John,

We currently publish our data through voxl-mpa-to-ros, which pulls data from our modal pipe architecture services and publishes them to ros. Currently it publishes cameras (as well as some debug overlays/stereo disparity map/etc), imus, VIO data, and pointclouds the come from depth from stereo or our TOF sensor. If you gave a bit more insight as to what you're trying to use this for I can point you to additional places that could be helpful for your purposes.

-

@Alex-Gardner

https://youtu.be/FpGJ77xcb9w?t=21

So this looks like it's publishing a 3D map (TOF or Stereo).

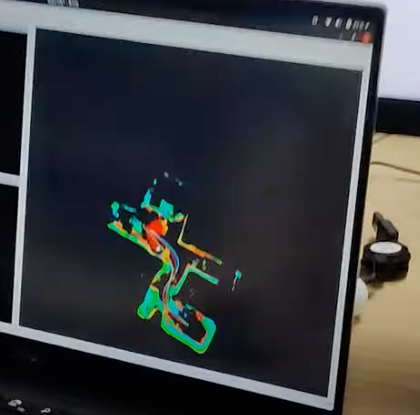

We've previously been using ROS bag files from here: https://fpv.ifi.uzh.ch/datasets/ - running on the Qualcomm dataset - (screen attached).

Our understanding had been that you appear to be using Octomap / VoxBlox - here to display the PCL output? https://youtu.be/v9hG6LZdmHM?t=12

"All of the SLAM algorithms shown in this video are running on board the VOXL. The algorithms employed are a combination of ROS, OMPL, and Qualcomm's VIO."

Our hope was that we would be able to expose that 3D mapping layer (which appears to be at a 5cm ish layer.

From here we wanted to then tinker with this via some of our own systems to go from the Voxel engine into Unity where we can perform a myriad of other tasks.The basic setup for the POC is:

UAV > Onboard SLAM > ROS Node (UAV) > ROS Node Receiver > Local / Server Reciever side > Unity.

For the evaluation now we want to go:

ROS Bag file (Odom, PCL / Map output) > Local Server > Unity.)

Happy to organise a quick conversation if that's unclear? If that's something you could assist with - since for our part we're an end consumer of this data.

Thanks

John

-

What I'm trying to ascertain is whether you are already generating equivalent to this on the UAV - as per the above-linked video (but not necessarily the Octomap specifically - but as a PCL cloud / occupancy cloud that can be defined?

E.g. http://wiki.ros.org/octomap_server

octomap_point_cloud_centers (sensor_msgs/PointCloud2)

The centers of all occupied voxels as point cloud, useful for visualization. Note that this will have gaps as the points have no volumetric size and OctoMap voxels can have different resolutions! Use the MarkerArray topic instead.map (up to fuerte) / projected_map (since fuerte) (nav_msgs/OccupancyGrid)

Downprojected 2D occupancy map from the 3D map. Be sure to remap this topic if you have another 2D map server running. New / changed in octomap_mapping 0.4.4: The topic is now projected_map by default to avoid collisions with static 2D maps -

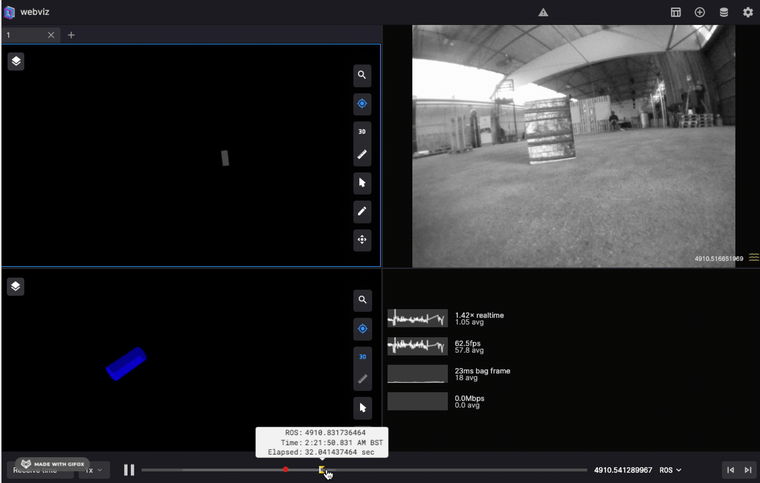

Our current mapping solution (the first picture you posted/the video posted last friday) uses voxblox, though it is a POC on our end, and the mapping solution that we've been working on and will release (timeline a couple months out) will be significantly different. We've been modifying voxblox to not use ROS (so that it's more lightweight, and this has mostly been designed as a tool for comms-denied autonomous flying). We're still working out how we want to export the map both in real time and for saving to a disk. Our current visualization is going to be through voxl-portal, which will be sending the mesh in a format similar to a GLTF but in a much simpler, binary form where the data is essentially a metadata followed by a list of vertices with xyz/rgb. We'll also have commands available to save the signed distance field representation of the map (which is how voxblox represents the data), or to export to an occupancy grid, but likely will not have these publishing over a network by default.

This mapping software is still a few months out but it, like the rest of our software, will be open source with modify/build instructions if you want to play around with any/all of it.

-

@Alex-Gardner

Hi Alex - that's very helpful.

Since we're using Unity (via ROS Bridge > Unity OR ROS > Server Special Sauce > Unity) we're not wedded to ROS - but rather the generated output.

Since you need a tool to convert Odom / PCL data / Vox data into something instantiable.Would it be possible to have a little more info / or any confirmed aspects between the VoxBlox specification/output - and what gets generated via VoxBlox currently.

We already have to "treat" the data a bit and apply some systems to it. I'd love to try and steal a march on this with my dev team so any of the "known knowns" would be helpful ( ). We're targeting October for a POC - even if that's just the output (rather than an E2E system).

). We're targeting October for a POC - even if that's just the output (rather than an E2E system).If you're moving towards a native / ModalAi - VoxBlox Fork - especially if its tailored to an embedded system i'd love to see if we could access even that current VoxBlox Bag data. Since we're really only concerned with the output (and we get what we're given as it were) with a view that in the intervening months - this work will be released and I gather the native implementation of the optional sensors is the same.

Given our hop back from Octomap - based on this performance scale. - https://voxblox.readthedocs.io/en/latest/pages/Performance.html#runtime-comparison-to-octomap

Have you hit a throttle yet on the size / ray length based on say the https://www.modalai.com/collections/development-drones?

Are there any early indicators around the early "real-time" limitations. Given an indoor / scanning range constrained use case.Its easy for us to up the HZ rate on the playback - but the sensor processing is where we've had issues with speed before?

It would also be very helpful to know if based on the above - you recommend a specific model lof development UAV. I saw you have a pre-order model - is there a timescale for this too? Also based on the assumption that a TOF / Stereo is still the principal ViO input - will the Vox Update be applied backwards - ie allow us to move from an existing dev drone to upgrade. We are looking a lot at acquiring a https://www.modalai.com/collections/robots/products/seeker?variant=39435414994995

My CEO has asked me to assemble some whole life costs - so unit + Sensors + Software engineering time. I wonder if you can point me on this.

Thanks for the very helpful answers! Also helping me look smart with my bosses

Happy to take this to another medium is needs be / mNDA etc. -

Still in the somewhat early stages of development so this may be subject to change, but the I/O format will be through our Modal Pipe Architecture, publishing posix pipes to /run/mpa.

//server pipes #define ALIGNED_PTCLOUD_NAME "voxl_mapper_aligned_ptcloud" #define ALIGNED_PTCLOUD_LOCATION (MODAL_PIPE_DEFAULT_BASE_DIR ALIGNED_PTCLOUD_NAME "/") #define ALIGNED_PTCLOUD_CH 0 #define TSDF_VOXELS_NAME "voxl_mapper_tsdf_voxels" #define TSDF_VOXELS_LOCATION (MODAL_PIPE_DEFAULT_BASE_DIR TSDF_VOXELS_NAME "/") #define TSDF_VOXELS_CH 1 #define TSDF_SURFACE_PTS_NAME "voxl_mapper_tsdf_surface_pts" #define TSDF_SURFACE_PTS_LOCATION (MODAL_PIPE_DEFAULT_BASE_DIR TSDF_SURFACE_PTS_NAME "/") #define TSDF_SURFACE_PTS_CH 2 #define PLAN_NAME "voxl_planner" #define PLAN_LOCATION (MODAL_PIPE_DEFAULT_BASE_DIR PLAN_NAME "/") #define PLAN_CH 3 #define MESH_NAME "voxl_mapper_mesh" #define MESH_LOCATION (MODAL_PIPE_DEFAULT_BASE_DIR MESH_NAME "/") #define MESH_CH 4This is the current list of outputs that will be published and available. We're still working out the format of the TSDF outputs, but I can tell you more about the others.

-

The aligned pointcloud is mostly a visualization tool that transforms the most recent pointcloud that it integrated to match the drone's orientation, this is using a standard libmodal_pipe pointcloud structure.

-

The planner output a polynomial path (the format of which can be found here), this header is currently pasted in the vvpx4 and mapper projects, but will most likely be merged into the interfaces file in libmodal_pipe when we release this.

-

The mesh output is using a binary format of our own creation somewhat similar to a GLTF but not human-readable and thus much smaller. It will be published over the web through voxl-portal by default. The mesh is meant to have the ability to publish updates, not the entire mesh each time (though there is a command that can be given to the server to republish the whole mesh). The mesh is divided into "blocks", where the mapper maintains a list of which ones have been changed since the last publish, and then only republishes those blocks. The output will follow the format of:

{ Mesh metadata //(timestamp, how many blocks, size_bytes, etc) in format: mesh_metadata_t { //Array of metadata.num_blocks of the following: Block metadata //position of block, number of vertices in block, in format: mesh_block_metadata_t {//Array of block_metadata.num_vertices of the following: Vertex Data //xyz,rgb, in format: mesh_vertex_t } } }The structs have definitions that are subject to change but are currently:

#define MESH_MAGIC_NUMBER (0x4d455348) //magic number, spells MESH in ascii // TODO: put these in MPA somewhere typedef struct mesh_metadata_t { uint32_t magic_number; ///< Unique 32-bit number used to signal the beginning of a VIO packet while parsing a data stream. uint32_t num_blocks; ///< Number of blocks being sent int64_t timestamp_ns; ///< timestamp at the middle of the frame exposure in monotonic time uint64_t size_bytes; ///< Total size of following data (num_blocks number of blocks, each containing metadata and array of vertices double block_size; ///< Length of the edges of a block in meters uint64_t reserved[12]; ///< Reserved fields for later use, total size of struct 64 bytes }mesh_metadata_t; typedef struct mesh_block_metadata_t { uint16_t index[3]; uint16_t num_vertices; } block_metadata_t; typedef struct mesh_vertex_t { uint16_t x; uint16_t y; uint16_t z; uint8_t r; uint8_t g; uint8_t b; } mesh_vertex_t;As for the limitations that you were asking about, the ROS demo that we ran we used 20cm voxels with a ray length of 5 meters (the reliable range of the PMD TOF Sensor that we use). We're hoping to either get the voxel size down a bit or (scary) modify the core algo of voxblox to allow a surface between two unoccupied voxels (i.e. a wall could fall between 2 voxels and currently voxblox will backfill the voxel behind since it requires an occupied voxel) to allow for slightly higher definition walls.

UAV-wise, we have done this once on a M500 drone, but we've been trying to build this with the primary use case being indoor mapping, and thus most of what we do with this is on a seeker. The seeker comes better equipped for this with the ability size-wise to fly through doors and arriving equipped with both the TOF sensor and the stereo pair (though we have so far been primarily using the TOF for mapping, the final mapping product will allow for either/both to be used).

Let me know if you have any other questions!

-

-

That's absolutely amazing! I like it a lot and makes a good deal of sense - thanks for the input on the UAV selection.

Much appreciated. -

Hi there - is there somewhere to track the progress of the Voxl work? Or could you provide an update. After much debate finally got sign off on a UAV purchase. The FLIR integration is somewhat key - but wanted to see when this might come?

-

@BrandMetis-Width

Also this might be of interst to you - https://github.com/facontidavide/BonxaiNot yet ROS integrated - but could be an excellent match.

Core implemented in < 1000 lines of code too.