VOXL 2 tflite GPU delegate fails on custom model

-

I downloaded the "CenterNet MobileNetV2 FPN Keypoints 512x512" model from the TF2 Object Detection Zoo and customized the voxl-tflite-server software to load this model and run inference on a set of images. In this model, there are a few operations which are not supported; however, 120 operations are delegated to the GPU, and the remaining are delegated to the CPU. The model runs inference on the input images with no issues.

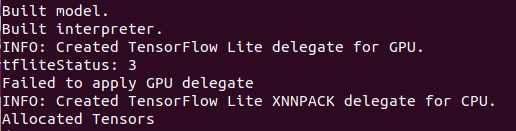

My problem: I have retrained the "CenterNet MobileNetV2 FPN Keypoints 512x512" to detect a new class's bounding box and keypoints. I modified voxl-tflite-server to use this model and updated the voxl-tflite-server.conf file with the model's name. When I try to load the model, the GPU delegate fails to apply as shown below

The NNAPI delegate fails as well with the same "error" as above. I looked into the meaning of tfLiteStatus = 3: kTfLiteApplicationError : Delegation failed to be applied due to the incompatibility with the TfLite runtime, e.g., the model graph is already immutable when applying the delegate. However, the interpreter could still be invoked.

The only thing I could muster from this error message is that the model graph is immutable, but the subgraphs can still be used; and for some reason this prevents the GPU delegate from being applied.

The model was retrained using the Object Detection API. After performing model analysis (using the Python API) it is apparent that the networks are slightly different; in the retrained model there are new layers in the list, but I'm not sure if/why that would cause the GPU delegate to fail.

Things I've tried:

- Post-training quantization (float16),

- Other models (CenterNet HourGlass104 Keypoints 512x512 from TF2 Detection Zoo),

- Specific input sizing prior to conversion from Tensorflow to TFLite (input can be [1,?,?,3], tried specifying as [1,256,256,3] or [1,512,512,3]),

- Representative dataset during conversion (images are a [1,height,width,3] uint8),

- Other versions of Tensorflow; tried 2.13, 2.10, 2.8 for both training and conversion.

I'm asking for advice on how to mitigate compatibility issues with the GPU on the VOXL 2. I suspect that the object detection API used for retraining is the culprit; does anyone know how to train in a way that the resulting model is compatible with the VOXL 2 GPU, or of a comprehensive guide for retraining a model without the object detection API?

Hardware Specs:

- Board: VOXL 2

- OS: Ubuntu 18.04

- SDK: 0.95beta (for dual IMX412 camera capability)