Tflite Server with Custom Trained YoloV5 receives SIGINT When Ran

-

My team is tasked with using a custom trained YoloV5 image classifier on the VOXL m500.

Here are the steps I took:- Used the following export command to save our YoloV5 with custom weights into a TF Saved Model YoloV5 Forum

- I used the following script, copying most of it from an archived version of the ModalAi Deep Learning Docs (For some reason the current webpage has nothing)

import tensorflow as tf saved_model_dir='/directory/best_saved_model/' converter = tf.compat.v1.lite.TFLiteConverter.from_saved_model(saved_model_dir) converter.use_experimental_new_converter = True converter.allow_custom_ops = True converter.target_spec.supported_types = [tf.float16] tflite_model = converter.convert() with tf.io.gfile.GFile('model_converted.tflite', 'wb') as f: f.write(tflite_model)- I export the .tflite frile to the drone and move it to

/usr/bin/dnn - I renamed my custom model to

yolov5_float16_quant.tflitebecause I was getting an error when trying to use a model of a different name - I ran

voxl-configure-tfliteand selected yolov5 as the model to use

Results

- I can't see Tflite on the VOXL portal, but I can see the hires camera (which is being piped to TFlite)

- If I run

roslaunch voxl_mpa_to_ros voxl_mpa_to_ros, it advertises both tflite and tflite_data, but gives me the following error: "Interface: tflite's data pipe disconnected, closing until it returns"

Trouble shooting

- I tried rebooting the drone

- I killed the

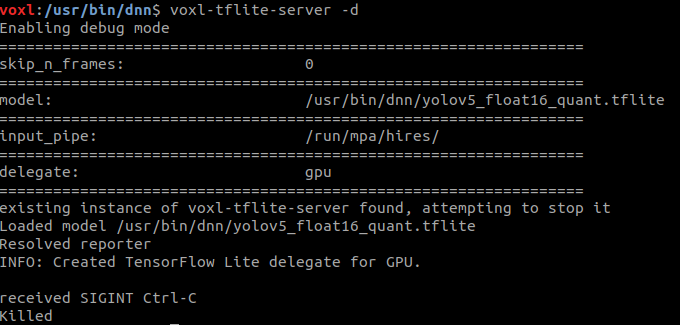

voxl-tflite-serverand tried to run it in debug mode which gave me the following output:

- I restored the stock YoloV5 classifier to the

yolov5_float16_quant.tflitename and it worked perfectly

I don't know where to go from here. Any input is appreciated, thanks.